Introduction

AI in the music industry is revolutionizing how songs are created, produced, and experienced. Artificial intelligence analyzes melodies, rhythms, and audience behavior to automate and improve every creative stage. Artists no longer rely only on manual production; they now use intelligent tools to mix, master, and promote their music.

Because of these innovations, technology and creativity now work together. Artists can compose faster, producers can reach global audiences, and record labels can understand listener behavior through data. Tools like Amper and AIVA assist with songwriting, while AI music analytics platforms reveal listening habits and preferences.

Why AI Matters in Music

AI adds precision, speed, and creativity to music production. It helps artists focus on artistry while handling repetitive studio tasks.

Key Benefits

Reduces time spent on editing and mastering.

Enhances audio quality with predictive algorithms.

Generates rhythms and melodies faster.

Provides listener insights for marketing.

For record labels, AI predicts emerging trends. Machine learning models can analyze streaming patterns to identify rising genres or beats. This allows producers to invest wisely and target specific audiences.

However, balance matters. While AI can detect rhythm and tone, it cannot replace the emotional depth of human experience. The best results come from combining human creativity with machine precision.

Ultimately, AI in the music industry is important because it brings efficiency and imagination together, letting artists innovate without losing authenticity.

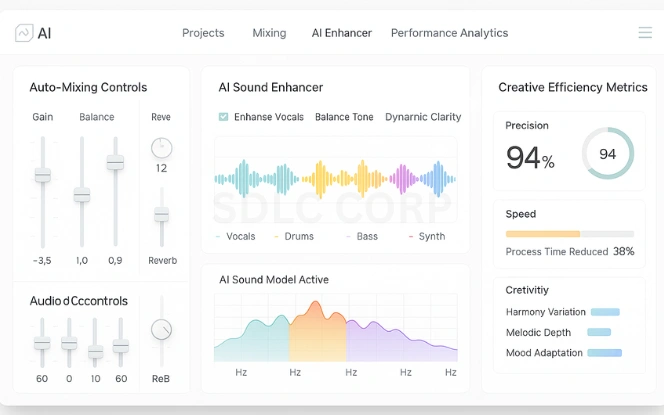

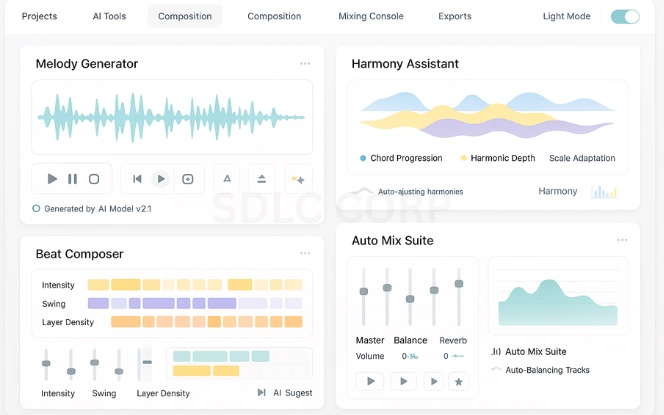

AI Music Production Tools

Modern AI music production tools simplify studio processes and make high-quality production accessible to everyone. These tools help professionals and beginners experiment freely without technical barriers.

Core Functions

Generative Models: Create melodies and harmonies using pattern recognition.

Neural Networks: Learn from an artist’s style to generate new ideas.

Digital Signal Processing (DSP): Automatically adjusts EQ, reverb, and compression.

Auto Accompaniment: Adds rhythm, percussion, or harmony to existing tracks.

AI tools like AIVA or Soundful allow musicians to turn a short melody into a complete arrangement in minutes. These platforms make production more intuitive, similar to how SDLC’s The Role of Sound Design and Music in Slot Game Engagement explains the emotional impact of audio design on user experience.

These tools democratize creativity by reducing the need for costly studios. They enhance productivity and innovation, proving that AI in the music industry is not about replacing musicians but helping them work smarter.

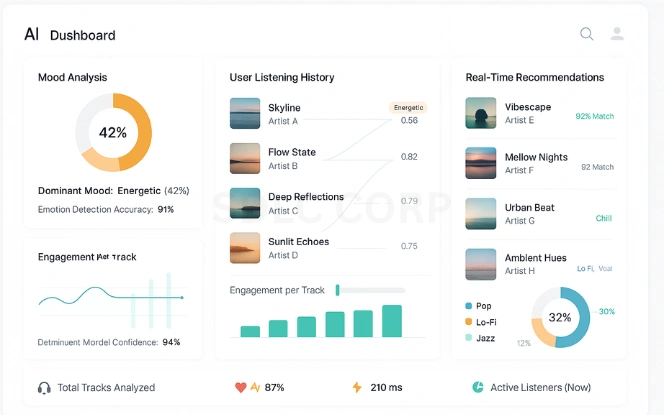

AI Music Analytics

AI music analytics turns large volumes of data into actionable insights. It helps artists and record labels understand their audience and make data-driven decisions about production and marketing.

What It Tracks

Stream counts, skips, and replays.

Listener demographics and locations.

Playlist additions and removals.

Social media engagement.

Machine learning models such as regression and clustering identify trends and preferences. For instance, they can predict which sounds keep listeners engaged or which release times perform best.

The SDLC Corp blog Video Game Music: Why It’s More Important Than You Think provides a great example of how analytics-driven insights shape emotional connection and engagement through sound.

Because decisions are based on accurate insights, marketing becomes more effective. AI music analytics reduces risks and helps artists connect more deeply with their fans.

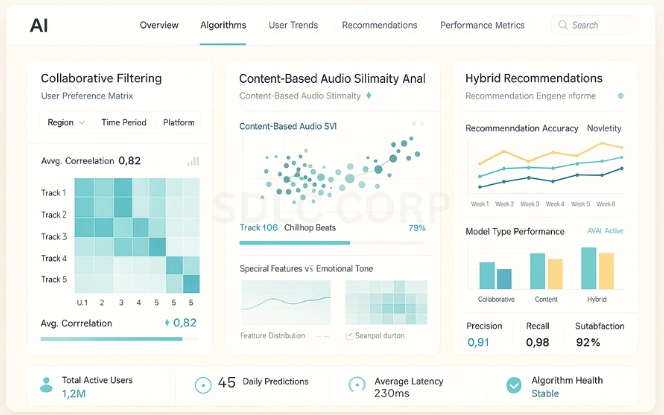

Machine Learning for Music Streaming

Machine learning for music streaming powers the algorithms behind personalized playlists on platforms like Spotify and Apple Music.

Techniques Used

Collaborative Filtering: Suggests songs liked by users with similar tastes.

Content-Based Filtering: Recommends tracks with similar characteristics such as tempo or tone.

Hybrid Models: Combine both methods for better accuracy.

Deep Embeddings: Map users and tracks based on listening patterns.

Each listener interaction, whether skipping or replaying, helps the system learn and refine its recommendations. Over time, it delivers playlists that feel personalized and intuitive.

For instance, SDLC’s Sound Design and Audio Integration in Unreal Engine Games discusses how adaptive audio enhances immersion, a concept similar to AI personalization in music streaming.

When applied responsibly, machine learning for music streaming improves user satisfaction and helps artists reach the right audiences.

AI Driven Music Recommendation

AI driven music recommendation systems analyze user behavior to predict what songs people will enjoy next.

How It Works

Collects listening history and feedback.

Scores potential tracks based on similarity.

Updates suggestions in real time.

Key Metrics

Engagement Rate: How often users interact with songs.

Retention: How long they stay active on the platform.

Diversity: The balance between familiar and new tracks.

AI models personalize playlists that evolve with listener behavior. The same adaptive thinking behind SDLC’s The Impact of Audio Design on the Cozy Gaming Experience applies here matching mood and tone to enhance emotional engagement.

As a result, AI driven music recommendation blends data and creativity to keep music discovery exciting and dynamic.

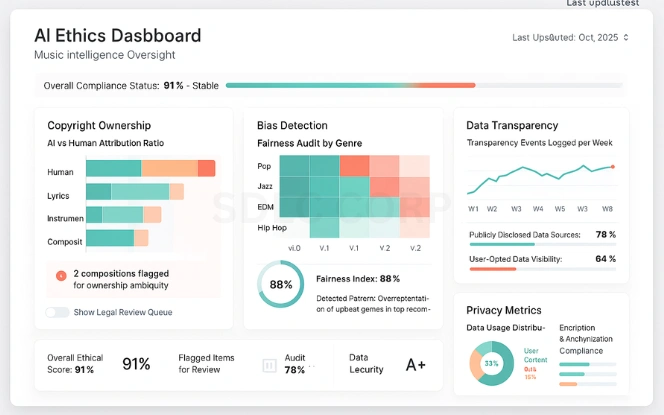

Ethical Issues in AI Music

As technology grows, ethical issues in AI music are becoming increasingly important. Innovation must be balanced with fairness, ownership, and transparency.

Main Concerns

Copyright Ownership: Determining who owns AI-generated compositions.

Transparency: Listeners should know when AI contributes.

Bias: Algorithms must promote diversity, not favoritism.

Data Privacy: Listener information must stay protected.

Job Impact: Automation may shift roles for sound engineers and composers.

The SDLC article The Role of Sound Design in Crafting Immersive Gaming Worlds also highlights the balance between creative innovation and ethical responsibility, a principle that equally applies to AI in music.

Responsible design ensures AI enhances rather than exploits creativity. Ethical frameworks around data and authorship help build trust among creators and audiences.

Integration and Workflow

Integrating AI across the music workflow makes production faster and more efficient.

Typical Workflow

Record raw audio.

Apply AI music production tools for editing and mastering.

Use AI music analytics to identify audience preferences.

Employ machine learning for music streaming to promote releases.

Analyze results and refine future projects.

Much like SDLC’s Essential Game Development Tools You Need in Your Arsenal, which describes streamlining creative processes with technology, AI tools in music shorten production cycles while improving sound quality.

By merging creativity with analytics, AI in the music industry creates smarter workflows that deliver professional results faster.

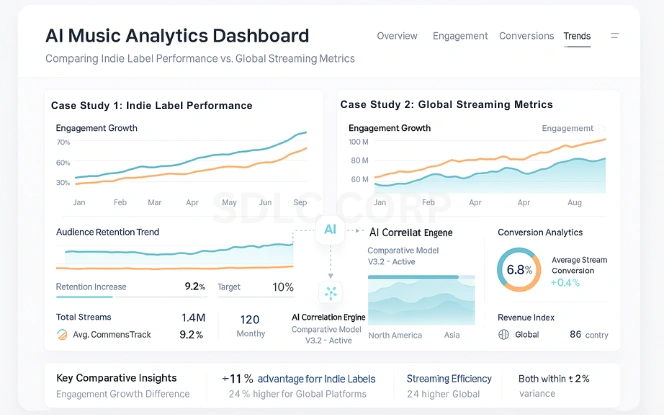

Case Studies

Indie Label Example

A small independent label used AI music production tools to identify rising lo-fi trends. Within six months, they increased their audience reach by 30 percent through targeted remixes.

Streaming Platform Example

A global streaming service implemented machine learning for music streaming and AI driven music recommendation systems. After improving algorithm fairness, listener engagement grew by 15 percent.

These examples show how AI in the music industry drives measurable results by combining data and creativity responsibly. SDLC’s Video Game Music: Why It’s More Important Than You Think demonstrates how thoughtful use of sound and data leads to stronger audience connection.

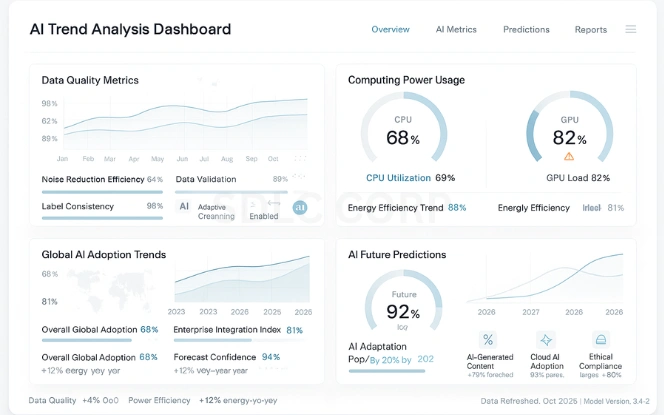

Challenges and Future Trends

Despite progress, challenges remain for widespread AI adoption in music.

Key Challenges

Data Quality: Poor metadata reduces model accuracy.

Computing Power: AI training demands significant resources.

Adoption Resistance: Some artists fear losing creative control.

Emerging Trends

Real Time Adaptive Music: Sound that reacts to listener emotions.

AI Co Composers: Tools that assist rather than replace creators.

Explainable AI: Transparent systems that show why recommendations are made.

Looking ahead, AI in the music industry will evolve through collaboration between technology and creativity. For instance, Blockchain in Film and Music Industries for Decentralizing Content explores how digital transparency will shape future innovation in entertainment.

Conclusion

Artificial intelligence is transforming the music industry by enhancing creativity, efficiency, and audience engagement. It streamlines production, improves sound quality, and delivers data-driven insights that help artists and producers make smarter decisions. Through predictive analytics and ethical frameworks, AI empowers creators to innovate while maintaining authenticity.

Integrating AI into music production and streaming isn’t just advantageous; it’s becoming essential for building sustainable, personalized, and impactful listening experiences.

Contact us at SDLC Corp to explore how AI-driven solutions can elevate your creative process. Hire AI Development Services with SDLC Corp and transform your music innovation strategy today.

FAQs

How Is AI in the Music Industry Transforming Music Creation?

AI in the music industry allows musicians to compose, mix, and master faster using tools like AIVA and Amper Music. By combining creativity and data, AI helps artists explore new soundscapes while staying true to their style.

What Are the Best AI Music Production Tools Used by Professionals?

Popular AI music production tools include AIVA, Soundful, and Amper Music. These platforms help artists generate melodies, harmonies, and arrangements efficiently, improving workflow without losing the human touch.

How Does Machine Learning for Music Streaming Improve Listener Experience?

Machine learning for music streaming powers AI driven music recommendation systems that tailor playlists to each user. This technology learns from mood, behavior, and time of day, providing highly personalized listening experiences.

What Are the Main Ethical Issues in AI Music Creation?

Ethical issues in AI music involve ownership, transparency, and data privacy. Responsible development ensures algorithms remain fair, transparent, and respectful of creative rights.

How Do AI Driven Music Recommendation Systems Work in Streaming Platforms?

AI driven music recommendation systems use behavioral data and pattern recognition to suggest new songs and artists. This creates diverse, engaging playlists tailored to every listener’s preferences.

What Does the Future Hold for AI in the Music Industry?

The future of AI in the music industry looks promising, with AI music analytics and machine learning for music streaming driving real-time personalization. As technology advances, ethics and creativity will continue to guide innovation.