Introduction

AI models like GPT, BERT, and Stable Diffusion have revolutionized industries, but out-of-the-box, they are often general-purpose. They might understand a broad range of topics but fail to deliver domain-specific accuracy.

This is where fine-tuning and AI Development Services come in. Fine-tuning allows developers to customize pre-trained models for their unique data, tasks, and objectives. Whether you want a chatbot that speaks your brand’s language or a model that detects fraud patterns in financial data, fine-tuning can help.

In this guide, we’ll explore what fine-tuning is, how it works, best practices, and future trends so you can harness it effectively for your projects.

What is Fine-Tuning?

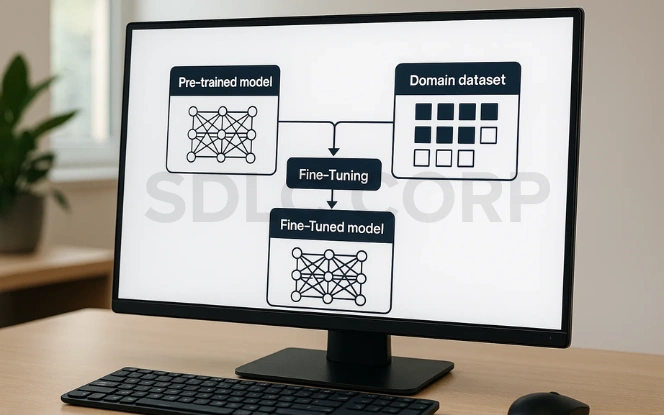

Fine-tuning is the process of adapting a pre-trained AI model (one already trained on massive, general-purpose datasets) to perform better on a specific task or domain by training it on smaller, specialized datasets. This process ensures higher accuracy, domain-specific behavior, and faster deployment. Fine-tuning is widely used in Natural Language Processing (NLP), computer vision, and multimodal AI systems.

How Fine-Tuning Works

- Pre-trained model:

- Starts with a model trained on vast data (e.g., GPT-4 trained on web-scale content).

- Has general knowledge but may lack domain-specific understanding.

- Starts with a model trained on vast data (e.g., GPT-4 trained on web-scale content).

- Domain dataset:

- Smaller, curated, and task-specific (e.g., medical records, legal documents, customer service chats).

- Helps the model specialize in niche areas.

- Smaller, curated, and task-specific (e.g., medical records, legal documents, customer service chats).

- Fine-tuning process:

- Retrains the model partially or fully on the new dataset.

- Adjusts weights and biases to refine predictions or responses.

- Balances between retaining general knowledge and achieving task-specific performance.

- Retrains the model partially or fully on the new dataset.

Types of Fine-Tuning

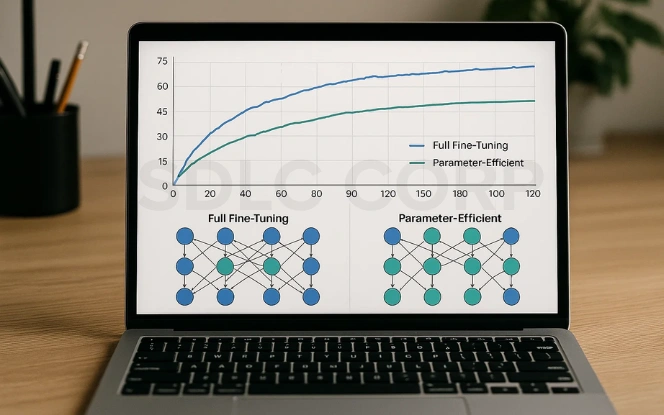

Full Fine-Tuning:

- All model parameters are updated.

- Pros: Maximum performance improvement for specific tasks.

- Cons: Requires high computational resources and large labeled datasets.

Parameter-Efficient Fine-Tuning:

- Only a subset of parameters is updated.

- Techniques: LoRA (Low-Rank Adaptation), Adapters, Prefix Tuning.

- Pros: Faster, cheaper, and resource-friendly.

- Cons: Slightly less task-specific customization compared to full tuning.

Additional Key Points

- Fine-tuning bridges the gap between general AI models and industry-ready solutions.

- It is essential for brand-specific tone, regulatory compliance, and specialized knowledge integration.

- Can be applied to text, images, audio, or multimodal AI models.

- Ongoing fine-tuning is often required to keep models updated with changing trends or new data.

Also read – Retrieval-Augmented Generation (RAG)

Why Fine-Tuning Matters

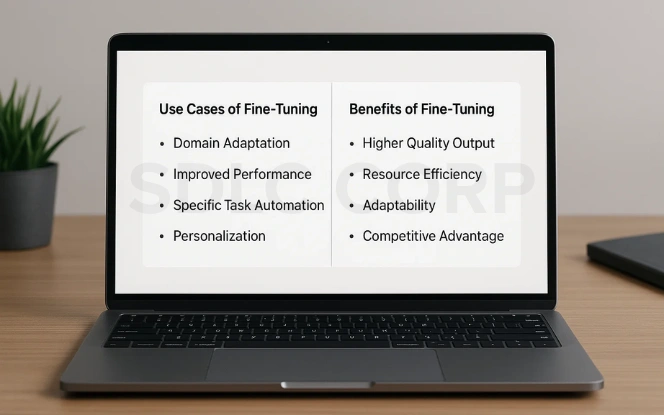

Fine-tuning is more than a performance upgrade; it turns general-purpose AI models into highly specialized solutions tailored for unique business needs. Instead of starting from scratch, fine-tuning leverages the power of pre-trained models and aligns them with your organization’s goals, tone, and ethical standards. It allows businesses to achieve precision, cost-effectiveness, and faster deployment while maintaining flexibility to adapt to evolving requirements.

Benefits of Fine-Tuning

- Higher accuracy: Models become task-specific, improving their performance in niche domains.

- Cost-efficiency: Avoids the huge expenses of building and training models from the ground up.

- Time-saving: Speeds up development and deployment compared to full-scale model training.

- Better alignment: Ensures AI models follow brand tone, compliance rules, and industry regulations.

- Reduced data requirement: Needs less training data compared to training a model from scratch.

- Scalability: Easily adapts models for multiple tasks within the same organization.

- Continuous improvement: Supports iterative updates to keep models relevant as business needs evolve.

Use Cases of Fine-Tuning

Healthcare:

- Disease prediction from medical records.

- AI assistants for patient care.

- Clinical document summarization.

Finance:

- Detecting and preventing fraudulent transactions.

- Risk analysis and predictive modeling.

- Automating financial document analysis.

E-commerce:

- Personalized product recommendations.

- AI-powered virtual shopping assistants.

- Sentiment analysis for customer feedback.

NLP (Natural Language Processing):

- Chatbots tuned to specific industries (e.g., legal, education).

- Automatic summarization of lengthy documents.

- Translation models trained for regional dialects or technical language.

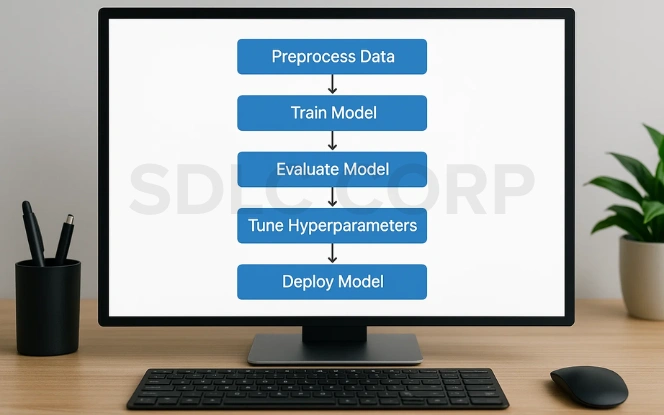

Step-by-Step Fine-Tuning Process

Fine-tuning may sound complex, but it can be broken down into structured steps. This process helps you adapt pre-trained AI models efficiently to meet your specific goals.

1. Choose the Right Pre-Trained Model

The starting point is selecting a foundation model that fits your use case.

- Language models: GPT, BERT, LLaMA (for NLP tasks like chatbots, summarization, classification).

- Vision models: CLIP, ResNet (for image recognition, computer vision tasks).

- Multimodal models: LLaVA, Flamingo (for tasks involving both text and images).

2. Prepare Your Data

Your data is the fuel for successful fine-tuning.

- Collect domain-specific datasets: For example, medical records for healthcare AI or legal documents for law-focused chatbots.

- Clean & preprocess: Remove duplicates, standardize formatting, and normalize data.

- Annotate if necessary: Add proper labeling for supervised tasks (e.g., tagging for classification problems).

3. Set Up Your Environment

Establish a robust training environment.

- Frameworks: Use PyTorch, TensorFlow, or Hugging Face Transformers for flexibility and scalability.

- Hardware: Leverage GPUs/TPUs for faster processing.

- Cloud platforms: Utilize AWS SageMaker, Google Vertex AI, or Azure ML for large-scale experiments without heavy on-premise investments.

4. Configure Hyperparameters

Hyperparameters control how your model learns.

- Learning rate: Adjust how quickly the model updates its weights.

- Batch size: Choose the number of samples processed before the model updates.

- Epochs: Set the number of full passes through the training data.

5. Train & Validate

This is where the model adapts to your data.

- Training: Retrain the pre-trained model on your custom dataset.

- Validation: Use a separate validation set to prevent overfitting and fine-tune parameters further.

- Evaluation: Track metrics like accuracy, F1-score, or loss reduction to measure success.

6. Deploy & Monitor

Bring your fine-tuned model into the real world.

- Deployment: Integrate it into applications, APIs, or services.

- Monitoring: Continuously observe performance to detect model drift or reduced accuracy.

- Retraining: Periodically update the model with new data to keep it relevant.

Challenges in Fine-Tuning

| Challenge | Impact (1-10) | Difficulty to Mitigate (1-10) |

|---|---|---|

| Data Scarcity | 8 | 7 |

| Overfitting | 7 | 6 |

| Resource Costs | 9 | 9 |

| Bias | 8 | 8 |

Explore – Diffusion Models for Generation

Popular Tools & Frameworks for Fine-Tuning AI Models

Fine-tuning AI models becomes faster, more cost-efficient, and highly scalable when the right tools are used. From open-source libraries to enterprise-ready platforms, these solutions help researchers, developers, and businesses adapt pre-trained models for domain-specific needs with minimal effort.

1. Hugging Face Transformers

- Industry-leading open-source library for NLP, vision, and multimodal fine-tuning.

- Offers pre-trained models (BERT, GPT, T5, CLIP) ready for customization.

- Built-in support for LoRA, PEFT, and adapters for efficient fine-tuning.

- Best for: Quick experimentation and production-ready workflows.

2. PyTorch Lightning

- Simplifies complex training workflows with modular, clean code.

- Eases scaling from research to production environments.

- Ideal for fine-tuning large deep learning models efficiently.

- Best for: Teams needing structured, production-oriented setups.

3. Cloud Platforms (AWS SageMaker, Azure ML, Google Vertex AI)

- Provide fully managed environments for training and deployment.

- Support distributed training for handling large models at scale.

- Offer enterprise-grade monitoring, security, and compliance.

- Best for: Large-scale, high-security enterprise projects.

4. LoRA & PEFT Libraries

- Enable Low-Rank Adaptation (LoRA) & Parameter-Efficient Fine-Tuning (PEFT).

- Significantly reduce compute and memory costs.

- Hugging Face PEFT library makes integration easy and production-ready.

- Best for: Cost-conscious projects with limited hardware resources.

5. Additional Tools

- Weights & Biases (W&B): Track experiments and model performance.

- Fast.ai: High-level API for simpler and faster fine-tuning.

- DeepSpeed: Optimizes large-scale training and reduces resource needs.

Best Practices for Fine-Tuning AI Models

| Best Practice | Purpose | Key Takeaway |

|---|---|---|

| Start small | Experiment with lightweight models first | Helps reduce cost & complexity during initial trials |

| Use parameter-efficient techniques | Apply LoRA, adapters, and quantization | Lowers computational needs & speeds up fine-tuning |

| Monitor regularly | Track performance & detect model drift | Ensures model stays accurate & reliable over time |

| Ethics first | Review outputs for bias & compliance | Promotes fairness, trustworthiness & regulatory adherence |

Also read – Transfer Learning Essentials

Future of Fine-Tuning

Fine-tuning is evolving rapidly, making AI customization more accessible, automated, and efficient. Here’s what the future holds:

1. No-Code Fine-Tuning

- Platforms are emerging that let users fine-tune models without writing code.

- Drag-and-drop interfaces and guided workflows are democratizing AI development.

- Opens up AI customization to non-developers, small businesses, and domain experts.

- Speeds up deployment for industries without large tech teams.

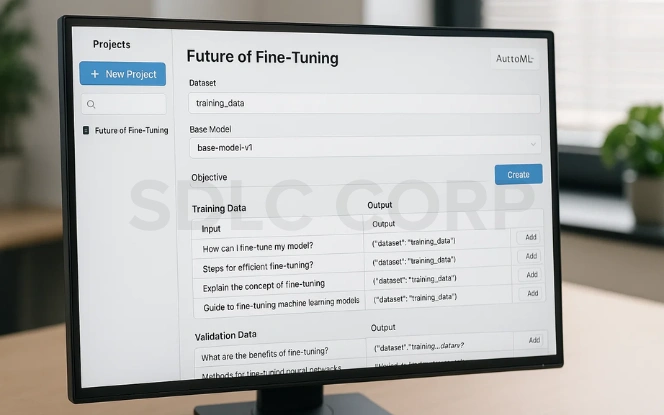

2. AutoML-Driven Fine-Tuning

- Automated Machine Learning (AutoML) tools will handle data preprocessing, hyperparameter tuning, and retraining with minimal manual effort.

- Reduces the need for specialized ML engineers for every project.

- Makes continuous learning pipelines possible, keeping models up to date as data changes.

3. Edge-Friendly Fine-Tuning

- Development of lightweight fine-tuning techniques for mobile, IoT, and edge devices.

- Ensures models can adapt quickly without depending on cloud infrastructure.

- Enables real-time, low-latency AI in applications like smart wearables, autonomous vehicles, and on-device assistants.

4. Scaling Fine-Tuned Models in Production

As fine-tuned models are deployed across multiple applications, maintaining consistency becomes more complex. Enterprise environments often require standardised workflows and version control. Practices used by an enterprise AI development company can help inform strategies for managing fine-tuned models at scale while maintaining reliability and traceability.

Evaluation, Governance, and Risk Management

Fine-tuned models must be evaluated for bias, overfitting, and unintended behaviour. Governance frameworks help ensure models remain aligned with intended use and regulatory expectations. An AI consulting company may assist organisations in defining evaluation criteria, audit processes, and risk mitigation strategies related to fine-tuning.

Conclusion

Fine-tuning is the bridge between general AI and customized, high-performance solutions. It transforms pre-trained models into tools that understand your industry, data, and goals. Whether you’re a startup optimizing a chatbot for better customer engagement or an enterprise building predictive analytics to drive smarter decisions, fine-tuning is your shortcut to powerful, domain-specific AI.

Explore how SDLC Corp can help you fine-tune models for your unique use cases and take your AI strategy to the next level.

FAQs

1. What is fine-tuning in AI?

Fine-tuning is the process of adapting a pre-trained AI model to perform better on a specific task or domain by training it on smaller, domain-specific datasets. This helps achieve higher accuracy and relevance without building models from scratch.

2. How much data do I need for fine-tuning?

The amount of data depends on the model size and complexity of the task. Parameter-efficient fine-tuning (like LoRA) can work well with a few thousand labeled examples, while full-model fine-tuning often requires larger datasets.

3. What are the benefits of fine-tuning over training from scratch?

Fine-tuning is faster, cheaper, and more efficient than building a model from scratch. It leverages pre-trained knowledge, reducing computation needs and accelerating deployment while improving task-specific performance.

4. Can fine-tuning cause overfitting?

Yes, especially when using small datasets. To prevent this, use validation sets, regularization techniques, and parameter-efficient methods like adapters or LoRA that minimize overfitting risks.

5. What industries benefit the most from fine-tuning?

Fine-tuning is widely used in healthcare (diagnosis prediction), finance (fraud detection), e-commerce (recommendation systems), and NLP applications like chatbots, summarizers, and translators tailored for specific domains.