Introduction

In recent years, Graph Neural Networks (GNNs) have emerged as one of the most powerful tools in the field of graph-based machine learning. Unlike traditional neural networks that process data in grids (like images) or sequences (like text), GNNs are designed to handle data represented as graphs, a structure made up of nodes (entities) and edges (relationships).

This capability has made GNNs a game-changer for applications in social network analysis, recommendation engines, fraud detection, and drug discovery, making them a crucial component in modern AI development services. Simply put, they bring deep learning on graphs to life.

What Are Graph Neural Networks?

Graph Neural Networks (GNNs) are a class of deep learning models designed for non-Euclidean data that doesn’t fit into regular grids like images or sequences. Instead of just handling isolated points, GNNs work with nodes (data entities) and edges (their relationships).

This makes them ideal for problems where connections between data points matter as much as the points themselves. For example, a user’s value in a social network depends not only on their profile but also on their connections.

Key applications of GNNs include:

- Social Networks – Friend recommendations, community detection, fake account identification.

- Recommendation Systems – Ranking items using user-item interaction graphs.

- Drug Discovery – Analyzing molecular structures for property prediction.

- Fraud Detection – Spotting anomalies in transaction networks.

By aggregating information from connected nodes, GNNs create richer, context-aware representations, making them more powerful than traditional neural networks for these tasks.

In short, GNNs are a cornerstone of graph-based machine learning, enabling smarter and more effective modeling of real-world, interconnected data.

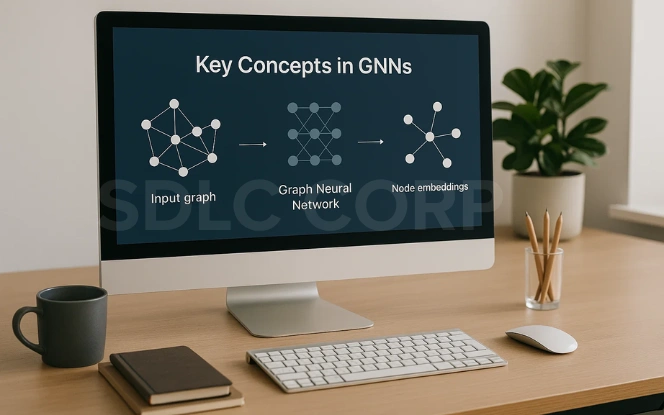

Key Concepts in GNNs

Understanding Graph Neural Networks (GNNs) requires familiarity with a few fundamental ideas. GNNs are designed to work on graph-structured data, where entities (nodes) are connected through relationships (edges). They use a process called message passing, where each node gathers and updates its information based on its neighbors, allowing the network to capture complex patterns.

Common GNN variants include Graph Convolutional Networks (GCNs) for aggregating neighbor information and Graph Attention Networks (GATs), which assign different importance levels to connections, improving performance on complex tasks.

To understand how graph deep learning works, it’s important to know a few core concepts:

- Graph Structure – A graph consists of nodes (data points) and edges (connections), representing relationships between entities.

- Message Passing – In GNNs, each node aggregates information from its neighbors, refining its representation layer by layer.

- Graph Embeddings – Through graph representation learning, nodes and edges are converted into vector spaces, enabling the model to make predictions effectively.

Popular GNN architectures include Graph Convolutional Networks (GCNs) for aggregating neighbor information and Graph Attention Networks (GATs), which use attention mechanisms to prioritize more important connections.

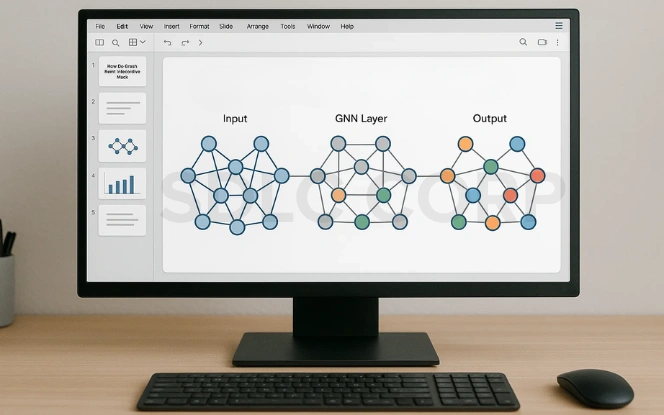

How Do Graph Neural Networks Work?

The working principle of Graph Neural Networks (GNNs) combines deep learning with graph structures, enabling models to learn from relationships between data points. Unlike traditional networks, GNNs iteratively refine node representations by exchanging information with their neighbors.

Key steps include:

- Initialization – Each node starts with an initial feature vector (e.g., user profile data in a social network or atom features in a molecule).

- Message Passing – Nodes share information with their connected neighbors, allowing context to flow through the graph.

- Aggregation & Update – The gathered messages are combined (using operations like summation, averaging, or attention) and used to update the node’s representation.

- Multiple Layers – This process is repeated across layers, helping nodes capture both local (immediate neighbors) and global (wider network) structures.

- Readout – Finally, the network produces outputs such as classification (e.g., community detection), prediction (e.g., molecular properties), or ranking (e.g., recommendation scores).

Through this iterative process, GNNs perform deep learning on graphs, enabling them to model complex relational data effectively.

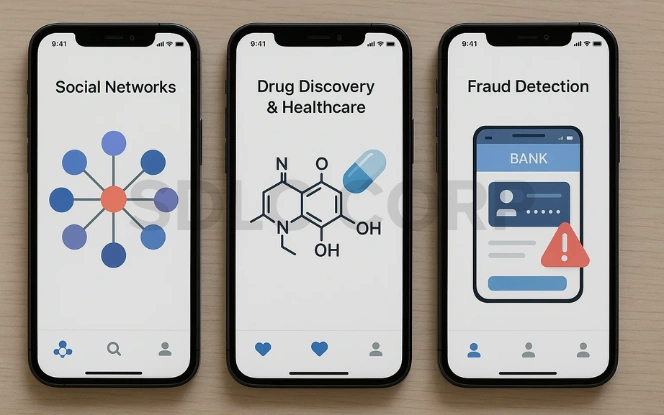

Real-World Applications of GNNs

The applications of Graph Neural Networks (GNNs) are growing rapidly across multiple industries, thanks to their ability to learn from complex relationships in data.

Key use cases include:

- Social Networks – Detecting communities, identifying fake accounts, and improving friend or content recommendations.

- Drug Discovery & Healthcare – Predicting molecular properties, modeling protein interactions, and accelerating new drug development.

- Recommendation Systems – Analyzing user-item interaction graphs to deliver highly personalized content and product suggestions.

- Fraud Detection – Uncovering hidden patterns in transaction networks to detect fraudulent activities in banking and e-commerce.

These examples show how graph-based machine learning is reshaping industries by providing deeper insights into relational and connected data.

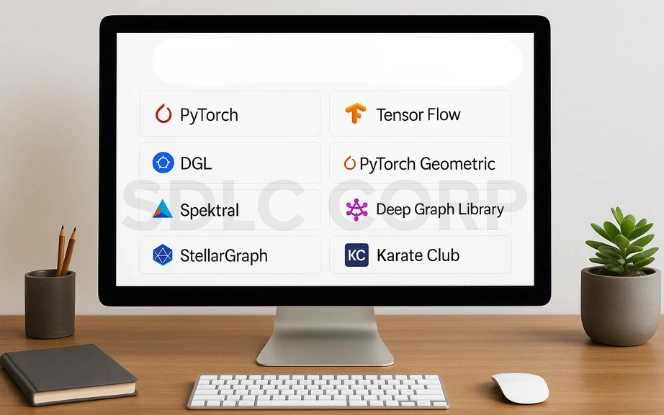

Popular Tools & Frameworks for Building GNNs

If you’re ready to dive into Graph Neural Networks (GNNs), several powerful frameworks make the process easier and more efficient:

- PyTorch Geometric (PyG) – One of the most widely used libraries for GNNs, PyG offers ready-to-use layers, datasets, and models, making it ideal for rapid experimentation and research.

- Deep Graph Library (DGL) – Designed with scalability and flexibility in mind, DGL can handle large graph datasets efficiently and supports multiple deep learning backends, making it a great choice for production-level applications.

- TensorFlow GNN – A framework for developers in the TensorFlow ecosystem, it integrates seamlessly with other TensorFlow tools, enabling smooth workflows for building and training GNN models.

These frameworks simplify graph deep learning, helping researchers and developers focus on designing effective models and solving real-world problems instead of dealing with low-level implementation complexities.

Challenges & Future of Graph Neural Networks

While Graph Neural Networks (GNNs) are driving innovation across industries, they are not without limitations. Understanding these challenges is crucial for researchers and practitioners working to advance the field.

Current Challenges:

- Scalability – Processing large-scale graphs with millions or billions of nodes (like social media networks or e-commerce platforms) requires significant computational resources, making real-time applications challenging.

- Interpretability – GNNs are often considered black-box models. Understanding why a model makes a specific prediction remains difficult, which is problematic for critical areas like healthcare and finance, where explainability is essential.

- Generalization – GNNs sometimes struggle to adapt to unseen or evolving graph structures, limiting their performance when applied to dynamic or heterogeneous graphs.

- Data Quality & Noise – Real-world graphs often contain incomplete or noisy data, which can reduce model effectiveness.

- Training Complexity – Proper tuning of GNN architectures, layers, and hyperparameters can be complex and resource-intensive, requiring expertise and experimentation.

Future Directions:

- Improved Architectures Research is focused on developing lightweight and scalable GNN models capable of handling massive graphs efficiently.

- Explainable GNNs Efforts are underway to make GNNs more interpretable, providing transparency into decision-making processes.

- Integration with Large Language Models (LLMs) Combining GNNs with LLMs could unlock advanced reasoning over graph-structured knowledge, leading to smarter AI systems.

- Real-Time Graph Learning Progress in streaming GNNs will enable real-time analysis for applications like fraud detection or cybersecurity.

- Cross-Domain Applications Future GNNs are expected to expand into new areas like IoT networks, smart cities, and personalized medicine.

Conclusion

Graph Neural Networks are redefining how we approach graph-based machine learning. They enable machines to learn from structured relationships, making them ideal for complex real-world problems. As GNNs continue to evolve, we can expect more breakthroughs in deep learning on graphs, transforming fields from healthcare to cybersecurity.

If you’re interested in exploring these technologies, partnering with an experienced AI development company can help you leverage tools such as PyTorch Geometric or DGL to build cutting-edge solutions. The future of graph deep learning awaits with SDLC CORP.

FAQs

1. What makes Graph Neural Networks different from traditional neural networks?

Traditional neural networks work well on grid-like data (images, text, etc.), whereas GNNs are designed for graph-structured data, where relationships between data points (nodes and edges) are just as important as the data itself.

2. Are GNNs only used for social networks?

No. While they’re popular for social network analysis, GNNs are also used in drug discovery, fraud detection, recommendation systems, cybersecurity, and knowledge graph reasoning.

3. Do GNNs require large datasets to work effectively?

Not always. GNNs can perform well even on smaller graphs if they are well-structured and contain meaningful relationships. However, larger datasets often enable better generalization.

4. Which is the best framework to start learning GNNs?

For beginners, PyTorch Geometric (PyG) is highly recommended due to its easy-to-use API and rich documentation. Advanced users working with large graphs may prefer DGL for its scalability.

5. Are Graph Neural Networks explainable?

Interpretability is a challenge for GNNs. However, researchers are developing explainable GNN methods to help visualize how node features and connections influence predictions.

6. What industries will benefit most from GNNs in the future?

Healthcare (drug design), finance (fraud detection), e-commerce (recommendations), cybersecurity, and smart cities are some key sectors likely to see major benefits from graph-based machine learning.