Introduction

Artificial Intelligence has advanced rapidly with Large Language Models (LLMs), now powering smart search and autonomous agents. In 2025, LLMs are multimodal, memory-enabled, and domain-specific. Insights into double descent in large language models have further improved their performance and reliability.

With rapid growth in AI adoption, many organizations now rely on experienced AI development companies to build scalable, intelligent solutions tailored to their domain. These companies help integrate LLMs across sectors like healthcare, e-commerce, education, and more.

Evolution of LLMs: A Timeline

The evolution of LLMs has been nothing short of revolutionary. Here’s how LLMs have evolved over the years

- 2018 – BERT: Introduced by Google, BERT (Bidirectional Encoder Representations from Transformers) revolutionized Natural Language Processing by enabling models to understand context in both directions.

- 2019 – GPT-2: OpenAI’s GPT-2 demonstrated the power of large-scale unsupervised language generation, capable of producing coherent long-form text.

- 2020 – T5 & Electra: Google’s T5 (Text-To-Text Transfer Transformer) unified NLP tasks under one framework. Electra improved training efficiency with a new pretraining method.

- 2021 – Codex: Codex, from OpenAI, brought code generation to the mainstream, enabling tools like GitHub Copilot.

- 2022 – GPT-3.5, ChatGPT: Enhanced conversational models led to the explosive popularity of AI chatbots and assistants.

- 2023 – Claude, LLaMA, Bard: Emphasis shifted to safety, cost-effectiveness, and open-source accessibility.

- 2024 – GPT-4, Gemini, Claude 3: The focus expanded to multimodality (supporting images, text, audio) and extended context lengths.

- 2025 – GPT-4o, LLaMA 3, Mistral: Real-time, multimodal, open-weight models optimized for edge devices and multi-agent tasks.

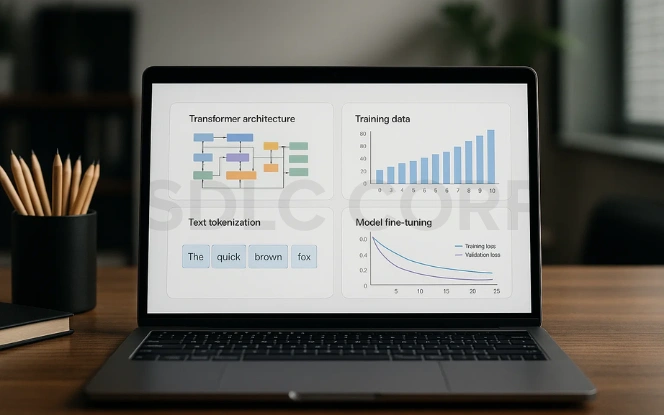

Core Technologies Behind LLMs

To fully understand the power of LLMs, it’s essential to break down their core components:

- Transformers: The foundational architecture enabling parallel attention mechanisms. This allows LLMs to learn context and dependencies effectively.

- Tokenization: Splits input text into manageable units (tokens) that LLMs can process efficiently. Techniques like byte-pair encoding (BPE) optimize this step.

- Fine-Tuning & RLHF (Reinforcement Learning with Human Feedback): Helps tailor general-purpose models to domain-specific tasks and align outputs with human values.

- RAG (Retrieval-Augmented Generation): Enhances generation accuracy by retrieving relevant external documents at runtime, providing up-to-date context.

- Quantization: Converts large models into compressed versions (e.g., INT8, 4-bit) to run on resource-constrained devices.

- Multimodal Fusion: Enables LLMs to process text, image, and audio inputs simultaneously, increasing their versatility and real-world usability.

- LoRA & QLoRA: Lightweight fine-tuning techniques that allow rapid domain-specific adjustments to base models with minimal cost.

- Prompt Engineering: The art of crafting inputs to get desired responses from LLMs. It’s crucial for maximizing model output quality.

Use Cases by Industry

LLMs have found applications across a wide range of industries:

Healthcare

- AI-powered patient triage chatbots

- Medical documentation summarization

- Symptom-to-diagnosis automation

Education

- Real-time essay feedback and grading

- AI tutors for multilingual learning

- Custom learning pathways for each student

Retail & E-Commerce

- Personalized shopping assistants and product recommendations

- AI-driven customer service chatbots

- Natural language voice-based product search

Legal

- Smart contract analysis and clause summarization

- Legal research assistance with citation generation

- Compliance automation and case law lookup

Finance

- NLP for fraud detection and anomaly identification

- Real-time financial news and sentiment analysis

- Personalized investment advisory bots

Entertainment & Media

- AI scriptwriting and storytelling assistants

- Subtitle generation and dubbing

- Audience sentiment monitoring

- Content moderation and filtering

Manufacturing & Supply Chain

- Predictive maintenance using textual sensor data

- Inventory management automation

- Voice-activated machinery diagnostics

- Vendor communication chatbots

LLM Benchmarks and Performance Comparisons

| Model | Parameters | Context Length | Modality | Strength |

|---|---|---|---|---|

| GPT-4o | ~1T | 128K | Text, Vision, Audio | Real-time multimodal AI |

| Claude 3 Opus | N/A | 1M+ | Text, Code | Long context, safe responses |

| Gemini 1.5 | N/A | 1M+ | Text, Vision | Native search integration |

| LLaMA 3 | 70B | 128K | Text | Tunable open-source |

| Mistral | 12B | 32K | Text | Lightweight, efficient |

Deployment Trends: Where LLMs Live and Work

The way LLMs are deployed and accessed is evolving rapidly, moving beyond mere cloud APIs to encompass a diverse ecosystem of solutions tailored for specific needs:

1. Cloud-Based APIs (Most Common)

- Offered by OpenAI, Anthropic, Google, Microsoft

- No need to manage infrastructure

- Pay-per-token or subscription pricing

- Scales automatically with user demand

2. On-Premise Deployments

- Used by enterprises with strict data security (e.g., healthcare, finance)

- Full control over hardware and data

- Higher setup and maintenance costs

- Requires in-house MLOps expertise

3. Containerized & Kubernetes-Based Setups

- Use Docker + K8s to deploy models like LLaMA, Mistral

- Popular in hybrid cloud environments

- Scalable and infrastructure-agnostic

- Tools: Hugging Face TGI, vLLM, Ray Serve

4. Edge and On-Device Inference

- For phones, browsers, IoT, wearables

- Models quantized/distilled (e.g., MobileBERT, Phi-2)

- Low latency and no internet needed

- Ideal for privacy-sensitive applications

5. Browser-Based Inference

- Models run with WebAssembly, WebGPU

- No back-end server required

- Lightweight models (tinyGPT, GGML) supported

- Great for demos and offline utilities

6. Function-as-a-Service (FaaS)

- Run models on demand via serverless platforms

- Platforms: Modal, Baseten, AWS Lambda with AI containers

- Cost-effective for bursty traffic

- Quick to deploy, easy to scale

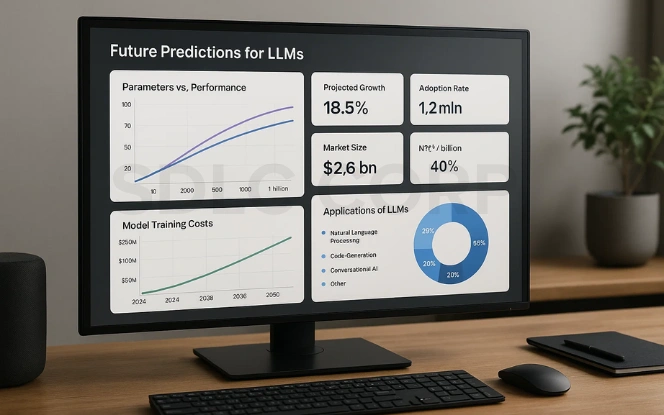

Future Predictions for LLMs

The future of LLMs is heading toward greater autonomy, personalization, and decentralization:

- Self-Updating LLMs: Continuous learning and memory-aware models that evolve through user interaction and feedback.

- Decentralized AI Systems: Federated and blockchain-based training to preserve privacy and eliminate centralized control.

- Robotics Integration: LLMs embedded in physical robots to provide reasoning, planning, and autonomous execution in real-world environments.

- Hyper-Personalized Agents: Tailored digital assistants for individuals and businesses, trained on proprietary data and user behavior.

- Multi-Agent Systems: Autonomous LLM-based teams coordinating tasks like hiring, reporting, or design without human supervision.

- LLMs as Operating Systems: Future interfaces might run on AI-first operating systems where apps are dynamically generated by LLMs.

- LLM-Powered Scientific Research: Assisting researchers in hypothesis generation, paper writing, experiment design, and literature review.

Optimizing LLM Inference for Production

Deploying Large Language Models (LLMs) in production introduces unique challenges especially around latency, cost, scalability, and user experience. This guide presents a comprehensive blueprint for optimizing LLM inference in real-world applications.

Core Challenges in Production

- Latency Sensitivity: Real-time apps (e.g. chatbots, code assistants) demand low-latency responses.

- Throughput Demands: High concurrent usage requires efficient batching and load distribution.

- Cost Efficiency: LLMs are expensive to run, especially large models (GPT-3/4 class).

- Reliability: Production workloads require 99.9% uptime and graceful failure handling.

Key Optimization Strategies

1. Model Selection

Use smaller or distilled models when possible.

Fine-tune small models on domain-specific data for better performance.

2. Quantization

Reduces model size (FP32 → INT8/FP16).

Faster matrix ops + smaller memory footprint.

Tools: bitsandbytes, ONNX, TensorRT.

3. Key-Value (KV) Caching

Store past attention values to avoid recomputation during autoregressive decoding.

Critical for chat, code completion, and streaming.

Major speedup: Especially for long sequences or long conversations.

4. Speculative Decoding

Use a small “draft” model to generate multiple tokens in parallel.

The final model verifies or corrects the draft.

Implemented in: GPT-4 Turbo, vLLM.

Speeds up generation 2–3×.

5. Batching and Prefilling

Batch requests from multiple users to maximize GPU utilization.

Pre-fill model prompts asynchronously.

Tooling: Hugging Face TGI, vLLM, Triton.

Cost Optimization Tactics

| Strategy | Benefit | Tools |

|---|---|---|

| Use Distilled Models | Lower compute cost | Hugging Face DistilGPT2 |

| Quantize Weights | 2–4× smaller models | ONNX, TensorRT, bitsandbytes |

| Autoscaling | Pay-per-usage | Kubernetes, Ray Serve |

| Prompt Caching | Avoid redundant compute | Redis, vector stores (e.g., FAISS) |

| Serverless Model APIs | Cost-effective for low QPS | Modal, Baseten, AWS SageMaker |

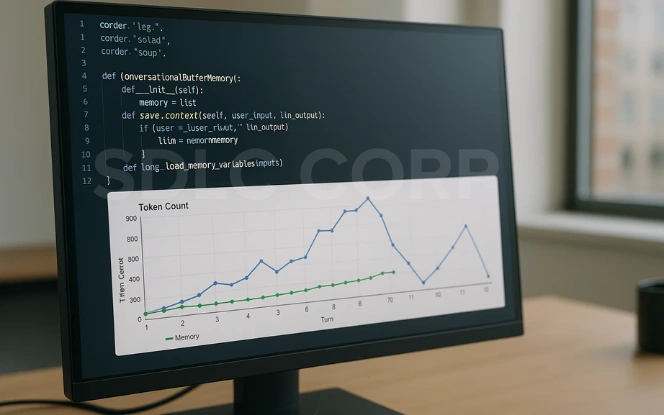

How LLMs Use Memory to Maintain Context

Large Language Models maintain “context” by remembering relevant input information across tokens or turns. This ability is key to enabling coherent and relevant responses in tasks such as conversation, summarization, and long-form content generation.

How Memory Works in LLMs

LLMs don’t have traditional memory like a computer. Instead, they simulate memory through contextual embeddings and, optionally, external memory systems.

1. Self-Attention Mechanism

- Core to transformer models.

- Allows the model to weigh and attend to different parts of the input when generating output.

- All input tokens are processed together, and each output token considers all previous tokens via attention.

2. Token-by-Token Generation

- LLMs are autoregressive: they generate text one token at a time.

- Each token prediction is based on all previous tokens in the window.

- No actual “memory” is stored beyond this unless explicitly engineered.

Memory Beyond the Context Window

Once input exceeds the context window, models forget earlier content. To address this, researchers and engineers use advanced strategies:

A. Retrieval-Augmented Generation (RAG)

- Relevant information is retrieved from an external source (e.g., vector database) and added to the prompt.

- Simulates long-term memory.

- The user asks, “What did I say last week?”

- The system retrieves notes and injects them into the current prompt.

B. Memory Replay

- The system stores key past interactions and re-injects them into future prompts.

- Used in agent frameworks like LangChain and AutoGPT.

C. Tool/Code-Augmented Memory

- Use tools like scratchpads, working memory slots, or structured notes to maintain relevant details.

D. External Memory Architectures

- Researchers are exploring architectures like:

- Longformer, Memorizing Transformers, Mamba, RETRO, etc.

- Some use recurrence or attention with memory banks to handle long sequences efficiently.

Internal vs. External Memory

| Type | Description | Limitations |

|---|---|---|

| Internal (Attention) | Memory via attention over tokens in context | Limited by context window |

| External (RAG, DBs) | Retrieve stored knowledge/documents dynamically | Needs infrastructure & engineering |

| Persistent Memory | Stored history injected manually | Fragile, requires summarization |

Ethics, Risks & Regulation

As LLMs scale, so do their ethical implications and legal responsibilities:

- Bias & Fairness: Training data biases can manifest in outputs. Responsible AI requires transparent datasets and mitigation strategies.

- Hallucinations: LLMs can generate confident but false information. Solutions include RAG, fact-checking layers, and feedback mechanisms.

- Intellectual Property Issues: Unclear copyright ownership over generated content or training sources remains a major legal gray area.

- AI Safety & Alignment: Ensuring LLMs behave in ways aligned with human intentions, particularly in high-stakes domains like healthcare or law.

- Global AI Regulations: Increasing governmental scrutiny and compliance requirements under policies like the EU AI Act, India’s DPDP Act, and the US NIST AI Risk Management Framework.

- Transparency: Open-weight models and audit trails will become industry standards for trustworthy AI systems.

- Consent & Data Rights: Regulations will require user consent and opt-out mechanisms for AI data collection.

LLMs vs SLMs (Large vs Small Language Models)

| Feature | LLMs (Large Language Models) | SLMs (Small Language Models) |

|---|---|---|

| Model Size (Parameters) | Hundreds of millions to hundreds of billions | Typically < 100 million (can be even < 10M) |

| Context Window | Large (4K–1M+ tokens) | Smaller (256–8K tokens) |

| Inference Cost | High (GPU/TPU required) | Low (can run on CPU, edge devices) |

| Accuracy/Capability | High (complex tasks, reasoning, code, multimodal) | Moderate (basic tasks, focused applications) |

| Deployment Scope | Cloud or powerful on-prem systems | Edge devices, mobile, browsers, microservices |

| Training Requirements | Extensive compute and large datasets | Lightweight datasets, fewer resources |

| Fine-tuning Complexity | Expensive, needs large-scale infrastructure | Easier, feasible on consumer-grade machines |

| Speed / Latency | Slower, especially for long prompts | Fast, sub-second responses possible |

What Are SLMs?

SLMs (Small Language Models) are compact transformer-based models designed for lightweight tasks, local deployment, and cost-efficiency.

Examples:

- Phi-2 (2.7B), Gemma 2B, DistilBERT, TinyLlama, Mistral-7B (considered “small” relative to >50B LLMs)

- Ultra-small: tinyGPT, ALBERT, MobileBERT, and models under 50M parameters

Strengths:

- Low-latency, energy-efficient

- Ideal for real-time edge AI,

- Fine-tuning possible on CPU

Weaknesses:

- Limited reasoning and abstraction capabilities

- Cannot manage long documents or complex tasks

- Prone to hallucination or oversimplification on nuanced queries

Summary: Key Takeaways

| Question | Answer |

|---|---|

| Do you need high reasoning ability? | LLM |

| Do you want to run AI on-device? | SLM |

| Is cost a concern for your workload? | SLM (cheaper for scaled deployments) |

| Are you building a complex assistant? | LLM |

| Is your model for mobile/edge apps? | SLM |

Conclusion

LLMs are transforming how people write, search, and interact with machines. In 2025, real-time, multimodal AI assistants span devices and industries, powered by large language models as optimizers for smarter, more efficient systems.

To build AI into your business, partner with our AI development company. Let us help you innovate faster, safer, and smarter.

FAQs

Q1. What’s the difference between an LLM and traditional NLP?

A: LLMs use deep learning and transformer-based architectures to understand context, semantics, and generate natural-sounding responses, unlike rule-based NLP, which relies on predefined logic.

Q2. Can LLMs replace human jobs?

A: LLMs automate repetitive and data-heavy tasks but also create new roles in AI oversight, prompt engineering, ethics, and customization.

Q3. Are there any free or open-source LLMs available?

A: Yes. Popular free/open-source LLMs include:

- LLaMA 3

- Mistral

- Falcon

- Phi-3-mini

These can be fine-tuned and deployed locally.

Q4. Can LLMs run offline or on small devices?

A: Yes. With quantization and lightweight models (like TinyLLaMA, GGUF), LLMs can now run on laptops, mobile phones, and even edge IoT devices.

Q5. What is RAG in LLMs?

A: RAG (Retrieval-Augmented Generation) enhances model responses by fetching real-time data or documents and combining them with generative output. It improves accuracy and reduces hallucinations.

Q6. What are quantized models?

A: These are compressed versions of LLMs (e.g., 8-bit, 4-bit models) that reduce size and computation needs ideal for deployment on edge or low-resource environments.

Q7. How much does it cost to fine-tune an LLM?

A: Fine-tuning a small-to-medium model can cost between $50–$500 using LoRA, QLoRA, or open-source tools. Costs rise significantly for large proprietary models.

Q8. Are LLMs multilingual?

A: Yes. Modern models like GPT-4o, Claude 3, and Gemini 1.5 support over 100+ languages, including low-resource and regional dialects.

Q9. What’s the best LLM in 2025?

A: Depends on the use case:

- GPT-4o – Best for real-time, multimodal tasks

- Claude 3 Opus – Best for long-context, safety

- Gemini 1.5 – Best for search-integrated applications

- LLaMA 3 – Best open-source LLM for customization