Introduction

Responsible AI is more than a concept. It is a practical approach to building artificial intelligence systems that are safe, fair, and transparent.

As AI becomes part of healthcare, finance, education, and daily tools, the way it is developed has real consequences for people and society. Responsible AI ensures that systems work reliably, respect human values, and minimize harm.

It brings together AI governance, AI ethics, and practical methods for ethical AI development. By applying AI risk management and following trustworthy AI frameworks, organizations can design technology that earns trust while staying compliant with regulations. Responsible AI development is not about slowing progress. It is about ensuring that innovation is sustainable, transparent, and aligned with human needs.

What Is Responsible AI Development?

Responsible AI development means creating systems that follow principles of fairness, safety, and accountability. AI is different from traditional software because it learns from data, which introduces risks such as bias and unpredictability.

Key aspects of responsible AI include:

- Fair and inclusive datasets.

- Transparent algorithms that explain decisions.

- Privacy safeguards.

- Security against attacks.

- Compliance with laws and regulations.

Responsible AI ensures that organizations innovate responsibly while building trust with users.

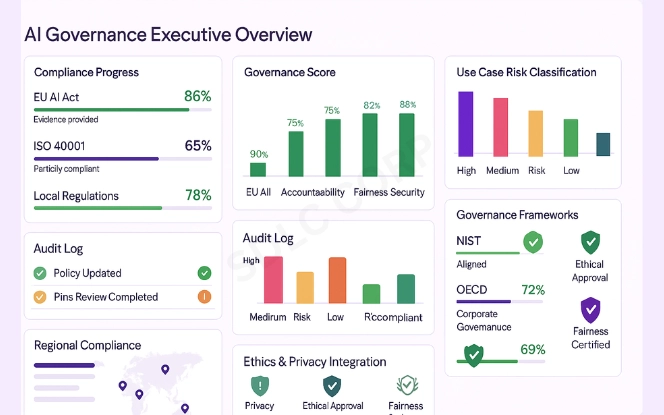

The Role of AI Governance

AI governance sets the rules for how AI is built and used. It defines responsibilities, oversight, and compliance mechanisms. Governance protects against misuse and ensures alignment with social and legal expectations.

Elements of governance include:

- Policies: Clear rules on acceptable AI practices.

- Oversight: Committees or independent boards for review.

- Compliance: Adherence to global and local laws.

- Transparency: Clear documentation of decisions.

Enterprise-Level Governance and Control: Large organisations often deploy AI across departments and regions, increasing governance complexity. Enterprise frameworks help manage access controls, data usage, and accountability. Practices followed by an enterprise AI development company can inform scalable and responsible AI governance models.

Good governance is the backbone of responsible AI. Without it, risks multiply; with it, organizations can deploy AI with confidence.

AI Ethics as the Foundation

AI ethics provides moral guidelines for AI development. Ethical challenges arise from biased training data, privacy risks, and lack of accountability.

Core ethical principles:

- Fairness: Prevent discrimination.

- Transparency: Make decisions understandable.

- Accountability: Assign responsibility for outcomes.

- Human focus: Support people rather than replace judgment.

Ethical AI creates trust by ensuring fairness and respect for human values.

Ethical AI Development in Practice

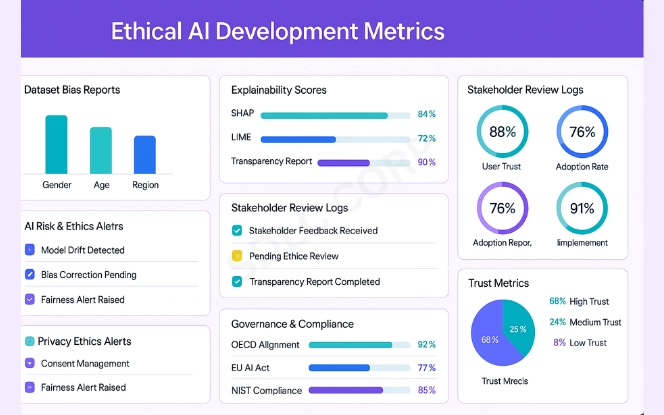

Ethical AI development applies ethics at each stage of the AI lifecycle.

Steps include:

- Bias detection: Identify and reduce hidden bias in data.

- Explainability: Use interpretable models and tools.

- Monitoring: Track AI performance after launch.

- Diverse input: Involve multiple stakeholders.

By embedding ethics into practice, organizations reduce risk and increase adoption.

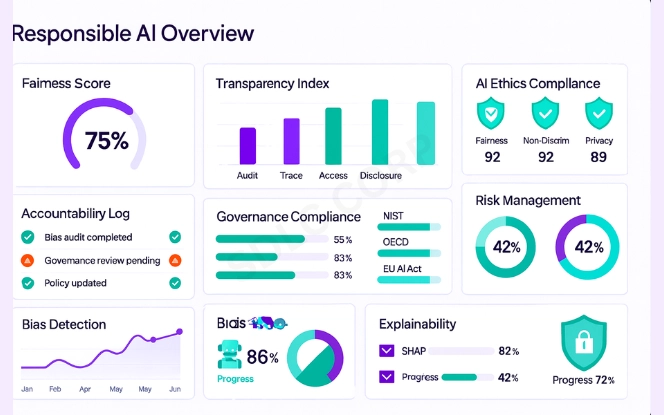

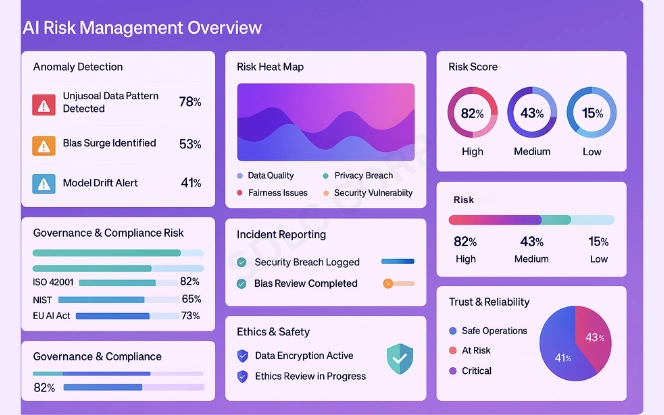

AI Risk Management: Mitigating the Challenges

AI risk management identifies and controls threats unique to AI. Risks include bias, data breaches, and unpredictable outputs.

Risk management involves:

- Protecting sensitive data.

- Running bias audits.

- Testing extreme scenarios.

- Reporting incidents promptly.

Effective risk management reduces harm while enabling safe innovation.

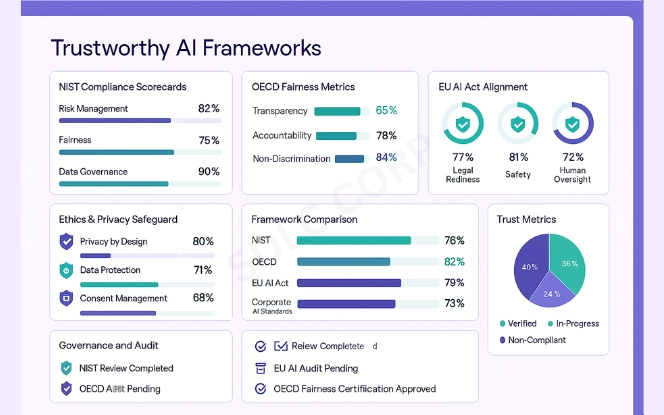

Trustworthy AI Frameworks

Trustworthy AI frameworks provide structured methods for building responsible systems.

Notable frameworks include:

- NIST AI Risk Management Framework: Structured risk identification and control.

- OECD AI Principles: Promotes fairness and accountability.

- EU AI Act: Legal safeguards for rights and safety.

Adopting frameworks ensures compliance and builds user confidence.

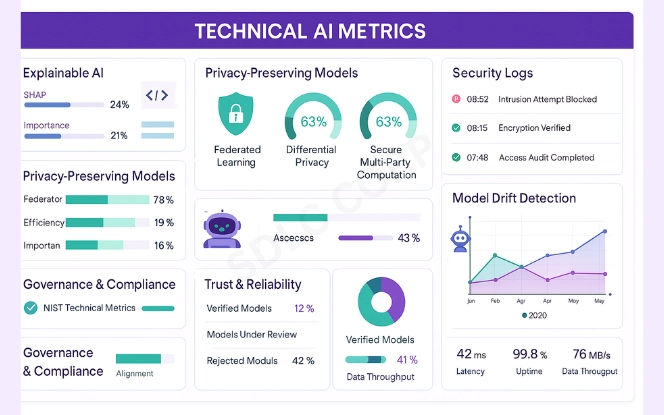

Technical Considerations in Responsible AI

Technical practices turn principles into working systems:

- Explainable AI tools (LIME, SHAP) for clarity.

- Bias detection algorithms to ensure fairness.

- Model monitoring for long-term accuracy.

- Privacy-preserving methods like federated learning.

- Security measures against adversarial attacks.

These tools make responsible AI both practical and effective.

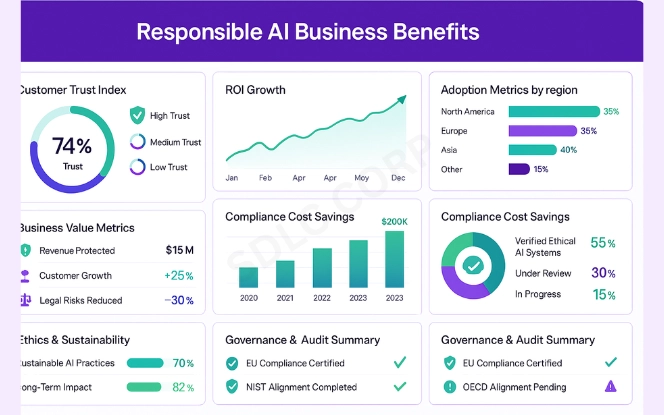

Business Benefits of Responsible AI

Responsible AI is not only ethical, it is also strategic.

Benefits include:

- Customer trust: Fair, transparent systems improve adoption.

- Competitive advantage: Ethical AI differentiates in crowded markets.

- Legal compliance: Reduces risks of penalties.

- Innovation: Provides safe conditions for experimentation.

Responsible AI creates sustainable value for both businesses and society.

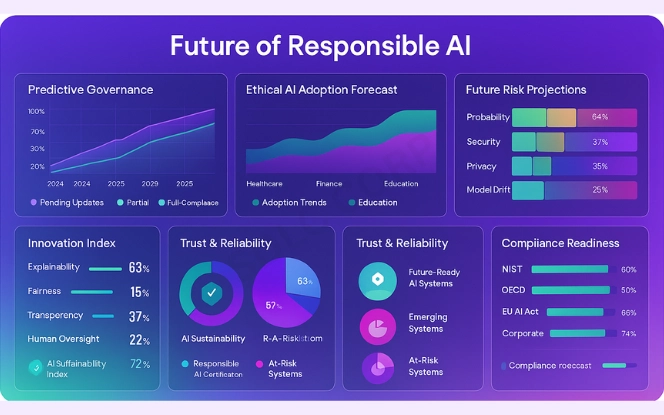

The Future of Responsible AI

AI will shape critical areas like healthcare, mobility, and national security. The importance of responsibility will grow alongside its power.

Trends include:

- More regulation and oversight.

- Independent review boards.

- Cross-border cooperation.

- Advanced global frameworks.

The future of AI depends on trust. Responsibility ensures technology benefits society.

Risk Management and Policy Alignment

Responsible AI development requires alignment with legal, regulatory, and internal policies. Risk assessments help organisations anticipate unintended consequences. An AI consulting company may assist in defining governance structures, escalation procedures, and compliance strategies.

Conclusion

Responsible AI development ensures fairness, safety, and accountability in technology. Through AI governance, AI ethics, ethical AI development, AI risk management, and trustworthy AI frameworks, organizations can design systems that deliver value while protecting people.

At SDLC Corp, we help businesses build AI that is effective, transparent, and sustainable. Contact us SDLC Corp to learn how we can support your responsible AI journey.

Hire AI Development Services with SDLC Corp today and build AI solutions you can trust.

FAQs

What Is Responsible AI And Why Does It Matter?

Responsible AI refers to developing artificial intelligence in a way that is safe, fair, and transparent. It matters because it ensures that AI systems are used responsibly, protect human rights, and avoid harmful outcomes. Responsible AI builds trust between organizations and users while supporting long-term innovation.

How Does AI Governance Help In Responsible AI Development?

AI governance provides policies, oversight, and compliance frameworks that guide how AI is designed and deployed. It ensures that organizations follow regulations, document decision-making processes, and reduce risks. Effective AI governance creates accountability and transparency across the entire lifecycle of AI systems.

Why Are AI Ethics Important For Businesses Using AI?

AI ethics help businesses prevent discrimination, protect user privacy, and ensure accountability in decision-making. By focusing on ethical principles such as fairness and transparency, companies can build systems that earn customer trust. Ethical practices also reduce regulatory risks and improve long-term adoption of AI solutions.

What Are The Best Practices For Ethical AI Development?

Ethical AI development includes removing bias from data, using explainable models, and monitoring AI systems after deployment. Involving diverse stakeholders and aligning with trustworthy AI frameworks also helps businesses create fair and reliable systems. These practices make AI more sustainable and user-focused.

How Can AI Risk Management Improve AI Adoption?

AI risk management identifies and reduces potential threats such as bias, security vulnerabilities, and data leaks. By applying strong controls, organizations can prevent failures and build trust in AI applications. Risk management also supports compliance with global frameworks and helps businesses scale AI responsibly.