Introduction

In a world where content is king, visuals reign supreme. But not everyone has the time, skill, or tools to design images manually. That’s where text-to-image generation tools enter the spotlight AI-powered systems that allow users to describe what they want in text, and receive high-quality, often stunning images in return. These tools are transforming how we approach design, storytelling, marketing, and more. With just a few words, anyone can bring their imagination to life without even touching a brush or design software.

Whether you’re a graphic designer seeking inspiration, a content marketer looking to speed up production, or simply curious about the magic behind this technology, this blog will walk you through everything you need to know about text-to-image generation from how it works and who’s leading the market, to ethical concerns and exciting future trends.

1. Understanding the Technology Behind Text-to-Image AI

Before diving into the tools themselves, it’s essential to understand how this technology works. Most of today’s leading text-to-image systems use diffusion models, which transform random visual noise into coherent images over multiple steps. These models are trained on huge datasets made up of image-text pairs, enabling them to link natural language prompts with accurate visual outcomes.

For instance, if a prompt says “a futuristic city under moonlight, in cyberpunk style,” the model recognizes the objects (“city,” “moonlight”), the style (“cyberpunk”), and builds an image accordingly. Some tools even use transformer-based architectures—like those used in GPT models to better interpret sentence structure and context.

Related Read: Curious about converting visuals into written content instead? Here’s what are 3 easy methods for turning an image into text.

2. Key Players in the Text-to-Image Space

The field is quickly evolving, yet several tools and companies have emerged as leaders in the domain. Each tool has unique capabilities and caters to different audiences from casual users to professional artists.

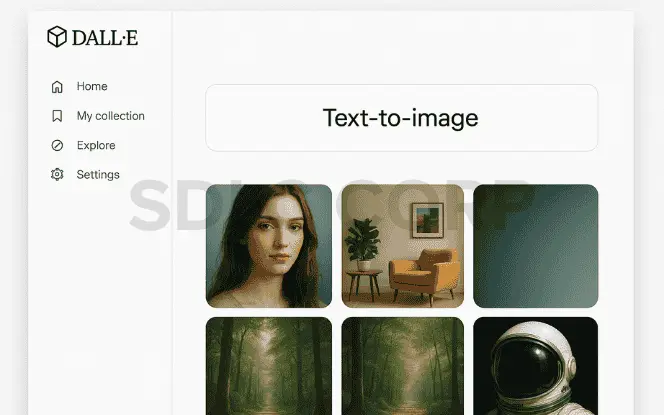

1. OpenAI’s DALL·E 2 and DALL·E 3

Is among the most well-known text-to-image models. With an emphasis on realism and creativity, DALL·E models excel at producing stunning visuals from imaginative prompts. Moreover, OpenAI has integrated DALL·E into platforms like Microsoft Designer and Bing Image Creator, bringing image synthesis to mainstream applications.

Features:

DALL·E 3 delivers more nuanced, faithful renditions of prompts, including better facial details and textual elements. It’s highly accessible via ChatGPT interfaces.

Pricing & Access:

DALL·E 3 access is bundled with ChatGPT Plus or Enterprise plans. Pricing varies based on image size and quality.

Rights & Licensing:

OpenAI typically grants broad usage rights, including for commercial use, but you should review the specific terms in your subscription.

Best Use Cases:

Ideal for content creators, marketers, designers, and storytellers seeking rich, prompt-driven visuals. Great for book illustrations, ads, storyboard visuals, and rapid ideation.

2.Midjourney

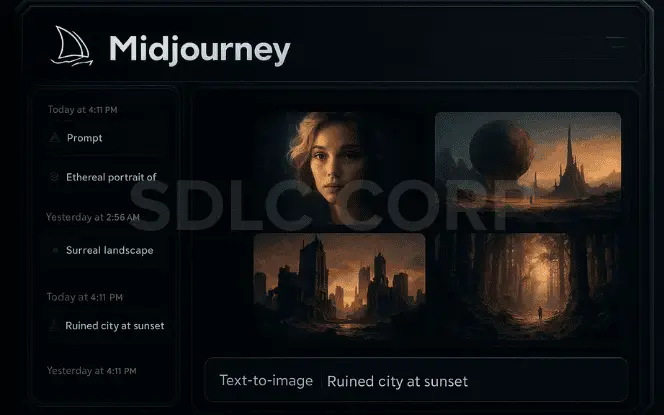

On the other hand, has carved a niche with its stylized, cinematic aesthetic. Operating primarily through Discord, it relies on community engagement and prompt engineering. Designers often prefer Midjourney for creating mood boards, concept art, or stylized illustrations.

Features:

Famous for cinematic, stylized outputs favored by artists, concept designers, and mood-board creators. Operates via Discord with prompt refinements and community engagement.

Pricing Plans:

Offers four subscription tiers—Basic, Standard, Pro, and Mega. Features like unlimited Relax mode generations and commercial usage vary by tier.

Rights & Licensing:

Licensing depends on your subscription. Paid tiers generally include commercial usage rights; free trials have restrictions.

Best Use Cases:

Creative professionals crafting concept art, stylized illustrations, moodboards, or signature aesthetic visuals.

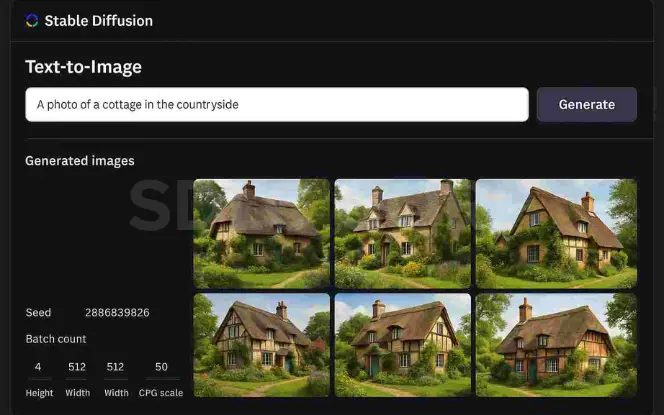

3. Stable Diffusion

An open-source alternative developed by Stability AI, provides greater flexibility. Because it’s open source, developers and artists can fine-tune it, build custom interfaces, or integrate it into other platforms. This has fueled a vibrant ecosystem of derivative tools and plugins. Furthermore, being able to run Stable Diffusion locally is a significant advantage for those concerned about privacy or internet dependence.

Features:

Open-source, highly customizable. Supports prompt weighting, image-to-image generation, negative prompts, outpainting, and running locally/offline.

Pricing:

Offers free access, plus Pro / API subscription tiers. Pricing and features vary; some platforms offer plans starting around $9/month.

Rights & Licensing:

Open-source license grants extensive freedom, though specifics may vary by derivative model or integration.

Best Use Cases:

Developers, researchers, and privacy-minded creators building custom tools, plugins, or workflows—or working offline.

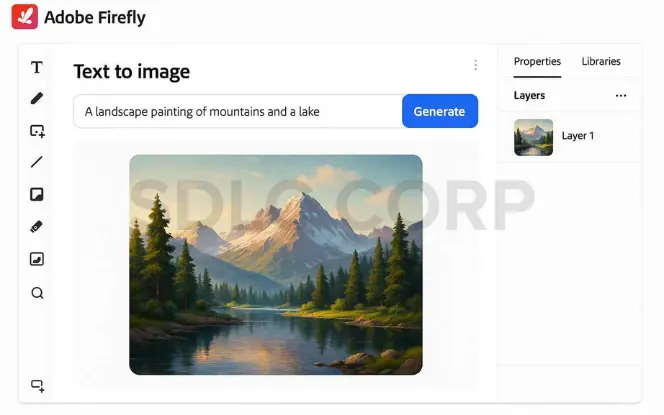

4. Adobe Firefly

Offers AI-powered text-to-image generation integrated within the Adobe Creative Suite. While still under development in some areas, it focuses on commercial usability, licensing clarity, and creative integration.

Features:

Fully integrated into Adobe Creative Cloud. Includes text-to-image, video generation, mood-boarding (Firefly Boards), prompt-based editing, generative fill/expand, and support for third-party models.

Pricing:

Standalone plans range from about $9.99/month to $199.99/month depending on credits. Creative Cloud Pro bundle includes unlimited generative image credits and 4,000 AI credits/month.

Rights & Licensing:

Trained on legally cleared data for commercially safe usage, offering legal protection for commercial projects.

Best Use Cases:

Creative professionals in the Adobe ecosystem needing seamless integration, cross-device synchronicity, and licensing assurance.

3. Use Cases Across Industries

The applications of text-to-image generation are practically limitless. For instance, in advertising and marketing, creative teams can instantly prototype visuals, social media graphics, or ad concepts without involving a traditional design pipeline. Similarly, e-commerce platforms can generate dynamic product imagery for catalogs and promotional banners.

In the gaming industry, developers use text-to-image tools for rapid concept development. Character designs, environment mockups, and asset previews can be generated in minutes, reducing production time significantly. The film industry is also tapping into these tools for storyboarding and concept visualization, allowing directors and art departments to explore various directions early in pre-production.

Meanwhile, educational content creators are using AI-generated visuals to enhance learning materials. Science diagrams, historical scenes, and illustrative metaphors are easier to produce than ever before. In the medical field, researchers are exploring synthetic data generation for rare conditions training diagnostic models without real patient images.

Lastly, individual creators, such as digital artists or social media influencers, are embracing text-to-image AI for art, memes, or even children’s books. Thus, these tools aren’t just for big businesses; they empower anyone with an idea to bring it to life visually.

Bonus: For those who frequently convert visual assets, check out 3 Easy Strategies of Converting Images to Word in 2024. Whether you’re a student or a professional, this guide makes converting formats effortless.

4. How Prompt Engineering Enhances Results

Creating a strong prompt is like giving clear directions to a designer. The better the description, the better the image. For instance, “a cat” might yield a basic photo. But “a fluffy tabby cat sitting on a red velvet armchair, Victorian style” provides richer context.

Prompt engineering has quickly become a creative skill. Communities share prompt templates and even tools like “prompt builders” help guide users on what to include such as lighting, mood, style, and subject. Rewriting a prompt just slightly can lead to drastically different results, which is both a challenge and a creative opportunity.

5. Ethical Concerns and Challenges

While text-to-image technology is advancing rapidly, its growth raises several complex ethical and legal questions that are far from resolved.

1. Copyright and Intellectual Property Risks

A major controversy stems from the way these models are trained—often on vast datasets scraped from the internet. These datasets may contain millions of copyrighted images, illustrations, and artworks, sometimes without explicit consent from the creators. This creates a legal grey area:

Derivative works: AI-generated images can closely resemble or directly replicate elements of original works, raising questions of authorship and infringement.

Artist rights: Many artists argue that their styles are being replicated without attribution or compensation, challenging traditional notions of creative ownership.

Regulatory uncertainty: Current copyright laws in most countries do not fully address AI-generated content, leaving both creators and users vulnerable to disputes.

2. Bias, Stereotyping, and Cultural Representation

AI models inevitably reflect the biases present in their training data. This can manifest in subtle or overt ways:

Demographic bias: Overrepresentation or underrepresentation of certain ethnicities, genders, or body types.

Stereotypical depictions: When prompted with certain professions or roles, models may default to culturally biased or historically skewed imagery.

Cultural insensitivity: Lack of context or nuance can lead to inaccurate or even offensive portrayals of traditions, attire, or symbols.

These issues highlight the need for careful dataset curation, ongoing bias audits, and culturally aware moderation.

3. Misinformation and Synthetic Media Abuse

The ability to generate hyper-realistic visuals introduces risks in the realm of public trust and information integrity:

Deepfakes and false narratives: AI-created images could be used to fabricate events, impersonate individuals, or support false claims in political or social contexts.

Erosion of trust: As synthetic imagery becomes indistinguishable from reality, the public may begin to question the authenticity of genuine photographs and news sources.

Legal and ethical gaps: Many jurisdictions lack comprehensive laws for addressing the malicious use of synthetic media, particularly when it comes to disinformation campaigns.

6. Accessibility and Democratization of Creativity

What once required Photoshop skills, art degrees, or access to stock libraries is now possible for anyone with a browser and an idea. Text-to-image generation tools are democratizing visual creativity. Students, small business owners, indie creators, and even NGOs are leveraging this tech to stand out in competitive digital spaces.

Many tools also support non English prompts, making global content creation more inclusive. The availability of free or freemium tools means users can experiment and learn without investing heavily upfront.

Working with scanned PDFs? Here’s how to easily convert free PDF images to text using simple tools.

7. Future Trends in Text-to-Image Generation

The horizon for this tech is full of exciting possibilities:

- Real-time generation: Imagine creating visual responses in live chats or games.

- Video generation: The next step could involve moving visuals or animations from text.

- Augmented Reality (AR): Text-generated visuals for AR shopping, filters, and experiences.

- Creative Personalization: Tailored visual styles that match your brand or personality.

- Multimodal AI: Tools that combine audio, text, image, and video into a single creative workflow.

With improving GPUs and edge computing, image generation might soon happen on your smartphone, instantly and offline.

Conclusion

Text-to-image tools are redefining creativity, enabling quick, vivid visuals for marketing, art, gaming, and more. Ethical use through crediting, transparency, and fair policies is key. Partnering with an AI development company can help you integrate these tools effectively.

FAQ'S

Are text-to-image tools free to use?

Most platforms offer a free trial or limited credits so you can experiment. After that, you’ll need a paid plan for higher quality, faster queues, or business features. Open-source tools can be run locally at no license cost, but you’ll still pay in hardware/compute time and setup effort.

Can I use AI-generated images for commercial purposes?

Sometimes—check the current terms for your specific plan and region. Many providers allow commercial use on paid tiers. Regardless of the tool, you’re responsible for avoiding trademarked logos, celebrity likenesses, or copyrighted characters, and for securing model/photo releases if your image depicts identifiable people or branded products.

What is prompt engineering?

It’s crafting inputs that steer the model’s output. A simple structure is:

Subject | Style/era | Lens/lighting | Composition | Key details | Aspect ratio | (Negative terms)

Tip: iterate, compare small changes, and (where supported) try negative prompts, seeds for reproducibility, or image references/control for layout.

Which tool is best for artists?

Depends on your goal:

Stylized concept art quickly → tools known for strong artistic looks.

Maximum control & customization (fine-tuning, local add-ons) → open-source ecosystems.

Business compliance & easy handoff to design apps → tools integrated with creative suites.

Beginner-friendly → tools inside familiar chat/design surfaces.

How do these tools understand my text?

They pair a text encoder (transformer) with an image generator (diffusion). The text encoder turns your prompt into embeddings; the diffusion model iteratively denoises random noise into an image that matches those embeddings. Safety and policy filters may block or adjust outputs.

Can I generate images of real people or brands?

You can’t directly generate exact replicas of real people or trademarked brands without permission, as that can raise legal and ethical concerns. However, Generative AI for Real Estate can create realistic, AI-generated lookalike visuals—such as property photos, agent avatars, or branded-style graphics—that are inspired by your requirements without copying actual individuals or brand assets. This ensures compliance while still delivering professional, high-quality images tailored to your marketing needs.

-Can I run these models on my own computer?

Yes — you can run many text-to-image models locally if you have the right hardware. An NVIDIA GPU with 8–12GB+ VRAM is ideal, though smaller setups or Apple Silicon can work with optimizations. Tools like Automatic1111, ComfyUI, or InvokeAI make it easy, while CPU-only runs are slow. For higher resolutions or faster output, cloud services may be better.