Introduction

Artificial Intelligence (AI) is transforming industries, but one of its biggest challenges is bias. Bias happens when algorithms produce unfair results due to skewed data, flawed models, or human influence. Simply put, biased data leads to biased outcomes, making mitigation crucial for building ethical and trustworthy systems. The impact is serious: in healthcare, it can cause misdiagnosis; in finance, it may affect credit scoring; in HR, it can distort hiring; and in law enforcement, it risks unfair profiling. That’s why AI fairness must be treated as a priority. Addressing these challenges often requires specialized expertise. Partnering with experts in AI development solutions can help organizations design transparent, fair, and responsible AI systems.

Enterprise-Scale Bias Controls in AI Platforms

Large organizations deploy AI across multiple teams and use cases, increasing the risk of inconsistent bias controls. Enterprise-grade platforms implement centralized monitoring and reporting mechanisms. Architectural practices used by an enterprise AI development company often inform how bias mitigation is standardized at scale.

1. What is Bias in AI?

Before diving into mitigation strategies, it’s essential to establish a clear understanding of AI bias. The AI bias definition can be explained as the tendency of an artificial intelligence system to produce unfair, partial, or discriminatory outcomes that do not reflect objective reality. Instead of being truly neutral, AI systems can unintentionally replicate human prejudices or systemic inequalities.

Unlike traditional software, AI learns from data. If the data itself carries imbalance, stereotypes, or historical discrimination, the system can magnify these flaws at scale. This makes bias not just a technical issue, but also a societal and ethical challenge.

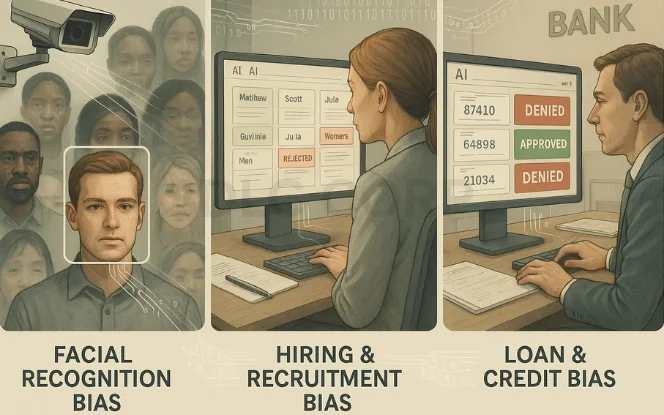

AI Bias Examples in Real-World Systems

To understand the impact of AI bias, let’s look at practical scenarios:

- Facial Recognition Systems

- Research from MIT and other institutions revealed that facial recognition technologies have higher error rates for women and darker-skinned individuals compared to white males.

- This raises concerns when such systems are deployed in law enforcement, security, or surveillance.

- Research from MIT and other institutions revealed that facial recognition technologies have higher error rates for women and darker-skinned individuals compared to white males.

- Hiring and Recruitment AI

- Automated hiring systems have shown bias by preferring resumes with “male-associated” language or names, leading to unfair hiring practices.

- Example: An AI tool once downgraded resumes containing the word “women’s” (e.g., “women’s chess club captain”).

- Automated hiring systems have shown bias by preferring resumes with “male-associated” language or names, leading to unfair hiring practices.

- Loan and Credit Approvals

- AI-powered financial models have been accused of discriminating against minority groups, either due to biased historical lending data or feature correlation with sensitive attributes like ZIP codes.

- AI-powered financial models have been accused of discriminating against minority groups, either due to biased historical lending data or feature correlation with sensitive attributes like ZIP codes.

These AI bias examples demonstrate how technical flaws can translate into real-world harm, often at scale.

Why AI Bias Matters

Ignoring AI bias has multi-dimensional consequences:

- For Businesses

- Loss of brand reputation and customer trust if AI systems are found to discriminate.

- Increased operational risks, including inaccurate predictions and poor decision-making.

- Loss of brand reputation and customer trust if AI systems are found to discriminate.

- For Customers

- Discriminatory experiences reduce confidence in AI-powered services.

- Biased outcomes may deny individuals access to jobs, loans, or healthcare opportunities.

- Discriminatory experiences reduce confidence in AI-powered services.

- For Regulators

- Governments across the globe are implementing AI fairness and transparency regulations.

- Non-compliance can result in fines, lawsuits, and stricter scrutiny.

- Governments across the globe are implementing AI fairness and transparency regulations.

In short, addressing bias is not optional it is a technical, ethical, and regulatory necessity. AI systems must be designed with fairness at their core to build a foundation of trustworthy and responsible AI.

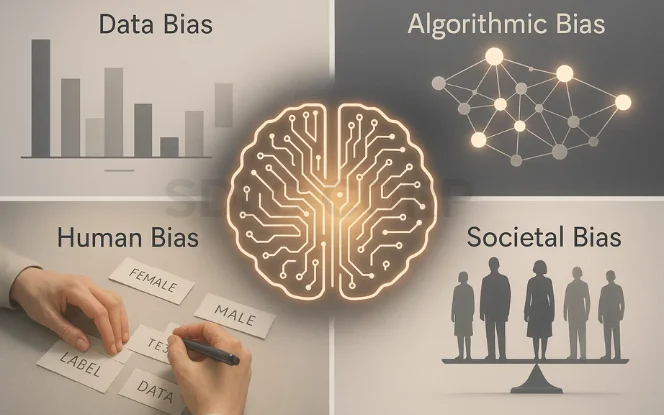

2. Sources of Bias in AI Systems

To design fair and trustworthy models, it is essential to understand the sources of AI bias. Bias in artificial intelligence doesn’t come from a single factor—it emerges from multiple layers of the AI development lifecycle, including data collection, algorithm design, human intervention, and broader societal influences.

1. Data Bias

Data is the backbone of AI, and if the data is biased, the outputs will be too. Data bias occurs when the training dataset is:

- Unbalanced – Certain demographics or scenarios are overrepresented, while others are underrepresented.

- Incomplete – Missing critical features or groups needed to reflect reality.

- Historically Skewed – Past inequalities are embedded in the dataset.

Example: A medical AI model trained mostly on data from Western populations may misdiagnose patients from other ethnic groups due to lack of diversity in training data.

2. Algorithmic Bias

Even if the data is relatively balanced, the algorithms themselves can introduce bias. This happens when:

- The model prioritizes accuracy over fairness, unintentionally optimizing for majority groups.

- Certain features are weighted in ways that correlate with sensitive attributes (e.g., ZIP code correlating with race or income).

- Limitations in model design prevent detection of minority patterns.

Example: A fraud detection system may disproportionately flag transactions from low-income neighborhoods if its optimization criteria are not adjusted for fairness.

3. Human Bias

AI systems reflect the perspectives of the people who build them. Human bias enters AI pipelines when:

- Developers make assumptions while framing problems or designing models.

- Labeling bias occurs during data annotation—for instance, subjective judgments about what qualifies as “toxic” content in moderation datasets.

- Teams lack diversity, leading to blind spots in identifying fairness gaps.

Example: If annotators label job applications differently based on gendered names, the AI will learn and perpetuate those patterns.

4. Societal Bias

AI is ultimately a mirror of the world we live in. Societal bias happens when:

- Pre-existing inequalities and systemic discrimination are reflected in AI outcomes.

- Data inherits historical unfairness, such as wage gaps, educational inequalities, or biased policing records.

- Algorithms inadvertently reinforce these disparities instead of correcting them.

Example: Predictive policing algorithms trained on crime data often over-target marginalized communities, perpetuating existing cycles of inequality.

Why Identifying Bias Sources Matters

Recognizing the types of AI bias helps organizations:

- Pinpoint root causes before deploying AI models.

- Apply the right bias mitigation techniques at each stage.

- Build fair, explainable, and regulation-compliant AI systems.

By addressing these sources of AI bias, businesses can move closer to creating AI systems that are reliable, ethical, and inclusive.

Read Also : Generative AI for Cryptocurrency

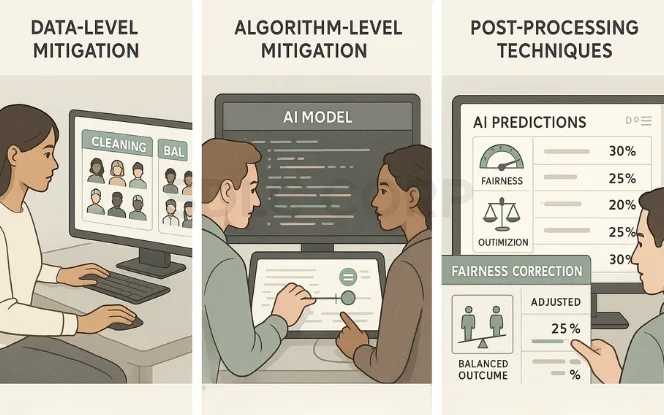

3. Bias Mitigation Techniques in AI Systems

Addressing bias in AI requires a multi-layered strategy. Since bias can originate from data, algorithms, or even model outputs, mitigation techniques must target each stage of the AI pipeline. Below are the most effective approaches to bias mitigation in AI, categorized into data-level, algorithm-level, and post-processing techniques.

Data-Level Mitigation

Since biased datasets are one of the primary sources of AI bias, correcting data-related issues is often the first step in building fair AI models.

- Data Balancing (Oversampling & Undersampling)

- Oversampling: Increasing the representation of minority groups to match majority samples.

- Undersampling: Reducing the size of overrepresented classes to prevent bias dominance.

- Example: In fraud detection, oversampling rare fraudulent cases ensures the model doesn’t ignore them.

- Oversampling: Increasing the representation of minority groups to match majority samples.

- Synthetic Data Generation (SMOTE, GANs)

- SMOTE (Synthetic Minority Oversampling Technique): Creates synthetic examples of minority classes by interpolating existing data points.

- GANs (Generative Adversarial Networks): Generate realistic synthetic datasets to balance training data.

- Example: GANs can create synthetic medical images of underrepresented conditions for diagnostic AI.

- SMOTE (Synthetic Minority Oversampling Technique): Creates synthetic examples of minority classes by interpolating existing data points.

- De-Biasing During Labeling & Preprocessing

- Involves careful annotation guidelines to reduce subjective labeling errors.

- Techniques like text de-biasing can remove gender or racial stereotypes from natural language datasets.

- Example: Adjusting word embeddings to eliminate gendered associations (e.g., “doctor” ≠ “male”).

- Involves careful annotation guidelines to reduce subjective labeling errors.

Algorithm-Level Mitigation

Even with clean data, algorithms can reinforce or amplify biases. To address this, bias-aware model design is crucial.

- Fairness-Aware Machine Learning Algorithms

- Modify training objectives to optimize for both accuracy and fairness.

- Incorporate fairness constraints (e.g., equal opportunity, demographic parity) into the loss function.

- Modify training objectives to optimize for both accuracy and fairness.

- Adversarial De-Biasing Techniques

- Use adversarial networks to remove sensitive attributes (such as gender or race) from influencing predictions.

- Example: An adversarial model predicts outcomes while another model tries to guess sensitive attributes—forcing the system to minimize bias signals.

- Use adversarial networks to remove sensitive attributes (such as gender or race) from influencing predictions.

- Regularization Methods to Reduce Bias

- Penalize models for disproportionate influence of biased features.

- Example: Adding fairness-based penalties to regression models to reduce dependency on sensitive attributes.

- Penalize models for disproportionate influence of biased features.

Post-Processing Techniques

When neither data nor algorithm-level fixes fully eliminate bias, post-processing methods can help adjust predictions for fairness.

- Re-Weighting Predictions for Fairness

- Assign different weights to model outputs to ensure balanced representation across demographic groups.

- Assign different weights to model outputs to ensure balanced representation across demographic groups.

- Threshold Adjustments for Equal Opportunity

- Modify classification thresholds so that acceptance/rejection rates are equalized across groups.

- Example: In credit scoring, adjust the threshold so approval rates are not unfairly low for certain communities.

- Modify classification thresholds so that acceptance/rejection rates are equalized across groups.

- Calibration Across Demographic Groups

- Ensure probability scores are equally reliable across all demographics

- Example: A risk prediction score of 0.8 should imply the same likelihood of an event, regardless of the person’s gender or race.

- Ensure probability scores are equally reliable across all demographics

Why Multi-Layered Mitigation Works

No single technique guarantees fairness. Instead, combining data-level, algorithm-level, and post-processing approaches ensures:

- More robust fairness outcomes

- Better compliance with AI regulations

- Stronger trust from customers and stakeholders

By applying these bias mitigation strategies, organizations can create responsible AI systems that balance performance with fairness.

4. Tools and Frameworks for Bias Detection & Mitigation

As AI adoption grows, organizations need practical tools and frameworks to detect, measure, and mitigate bias. Fortunately, the AI community has developed a range of fairness toolkits, metrics, and best practices to support responsible AI development.

Fairness Toolkits

Several open-source frameworks allow developers to audit AI systems for fairness and apply corrective measures:

- IBM AI Fairness 360 (AIF360)

- A comprehensive Python library offering over 70 fairness metrics and 10 bias mitigation algorithms.

- Includes pre-processing, in-processing, and post-processing techniques for machine learning pipelines.

- Best suited for research and enterprise-scale AI audits.

- A comprehensive Python library offering over 70 fairness metrics and 10 bias mitigation algorithms.

- Google What-If Tool

- An interactive tool for visualizing and probing ML models without writing additional code.

- Helps compare model performance across demographic groups and identify potential biases in predictions.

- Useful for model debugging and fairness evaluation during experimentation.

- An interactive tool for visualizing and probing ML models without writing additional code.

- Microsoft Fairlearn

- A fairness-focused Python package that provides fairness metrics and bias mitigation algorithms.

- Supports techniques like grid search with fairness constraints and explainability dashboards.

- Widely used in enterprise AI projects for monitoring ethical compliance.

- A fairness-focused Python package that provides fairness metrics and bias mitigation algorithms.

Metrics for Fairness

To quantify and address bias, organizations rely on mathematical fairness metrics. The most common include:

- Demographic Parity – Ensures that outcomes (e.g., approval rates) are equal across demographic groups.

- Equalized Odds – Requires that true positive and false positive rates are similar across groups.

- Predictive Parity – Ensures that predictions (e.g., risk scores) carry the same meaning across different groups.

- Example: In loan approvals, demographic parity ensures that approval rates are not disproportionately skewed against certain communities.

Industry Practices

Beyond tools and metrics, leading organizations integrate bias detection and mitigation into governance frameworks:

- Model Audits

- Regular internal and third-party audits to evaluate models for fairness, transparency, and compliance.

- Regular internal and third-party audits to evaluate models for fairness, transparency, and compliance.

- Ethical AI Guidelines

- Clear policies outlining acceptable practices for data collection, model design, and deployment.

- Example: Google’s AI Principles and Microsoft’s Responsible AI Standard.

- Clear policies outlining acceptable practices for data collection, model design, and deployment.

- Explainable AI Integration (XAI)

- Using explainability techniques such as LIME, SHAP, or counterfactual analysis to make AI decisions more transparent and accountable.

- Using explainability techniques such as LIME, SHAP, or counterfactual analysis to make AI decisions more transparent and accountable.

Why These Tools Matter

By leveraging fairness toolkits, metrics, and ethical practices, businesses can:

- Detect hidden biases early in development.

- Ensure regulatory compliance with AI fairness laws.

- Build trustworthy, inclusive AI systems that enhance customer confidence.However, applying these solutions in real-world settings comes with challenges.

In short, adopting these tools and frameworks ensures that AI is not just intelligent, but also fair, ethical, and socially responsible.

Check Out : Generative AI for Supply Chain

5. Challenges in Bias Mitigation

While bias mitigation in AI has made significant progress, several practical and technical challenges remain. Achieving fairness, transparency, and accountability is not a one-time fix but a continuous process that requires balancing multiple factors.

Trade-Off Between Accuracy and Fairness

- Improving fairness often comes at the expense of model performance.

- For example, adjusting thresholds to ensure equal opportunity may slightly reduce predictive accuracy.

- Businesses face the dilemma of deciding whether to maximize accuracy for efficiency or sacrifice some accuracy for ethical fairness.

- Striking this balance is one of the most persistent challenges in responsible AI adoption.

Regulatory and Ethical Complexities

- Global regulations around AI fairness are still evolving and fragmented.

- The EU AI Act, U.S. AI Bill of Rights, and India’s AI ethics guidelines all propose different compliance requirements.

- Ethical expectations from customers often go beyond legal mandates, creating additional layers of responsibility.

- Organizations must navigate this complex regulatory landscape while maintaining global scalability of AI systems.

Technical Limitations of Current Methods

- Existing bias detection algorithms may fail to capture intersectional bias (e.g., race + gender combined).

- Many fairness metrics can be contradictory—achieving one (e.g., demographic parity) may break another (e.g., predictive parity).

- Synthetic data generation and adversarial de-biasing are still imperfect, sometimes introducing new risks.

- Current tools are effective but not foolproof, requiring continuous improvement and validation.

Why These Challenges Matter

Overcoming these challenges is critical to:

- Ensure trust and adoption of AI solutions across industries.

- Build regulation-ready systems that minimize compliance risks.

- Create long-term sustainable AI practices that balance innovation with responsibility.

Bias mitigation is not a one-time project it is a continuous journey of monitoring, adapting, and improving AI systems.

Advisory and Governance Frameworks for Bias Mitigation

Effective bias mitigation requires clear governance structures, including accountability, review processes, and documentation standards. Advisory input supports policy design and compliance alignment. An AI consulting company may assist organizations in defining ethical AI guidelines and audit frameworks.

6. Best Practices for Organizations

For businesses deploying AI solutions, bias mitigation is not just a technical safeguard—it’s a strategic priority. To build fair, transparent, and regulation-ready systems, organizations must adopt best practices that span the entire AI lifecycle.

Diverse Data Collection Strategies

- Ensure datasets are representative of all demographic groups.

- Proactively identify and fill gaps by sourcing data from varied geographies, age groups, income brackets, and cultural contexts.

- Use data augmentation and synthetic generation carefully to balance datasets without introducing artificial distortions.

- Outcome: More inclusive data leads to robust models that generalize fairly across populations.

Inclusive AI Development Teams

- Build teams with diverse backgrounds—technical, cultural, gender, and disciplinary.

- Diverse perspectives reduce blind spots and uncover bias risks earlier in the design process.

- Encourage ethics-driven culture where fairness and transparency are treated as core design principles, not afterthoughts.

- Outcome: More balanced decision-making and fewer unintentional biases in AI pipelines.

Continuous Monitoring and Auditing of AI Systems

- Bias is not static; it can evolve as models encounter new data.

- Implement real-time monitoring dashboards to track fairness metrics (e.g., demographic parity, equalized odds).

- Conduct regular internal and external audits to validate AI fairness against compliance frameworks.

- Outcome: Bias is detected and corrected early, minimizing business and regulatory risks.

Transparency and Explainability in Decision-Making

- Adopt Explainable AI (XAI) techniques such as LIME, SHAP, or counterfactual explanations to clarify model behavior.

- Provide clear documentation on data sources, model assumptions, and limitations.

- Communicate AI decisions in a way that stakeholders, regulators, and end-users can understand.

- Outcome: Greater trust, accountability, and compliance across the AI ecosystem.

Why Best Practices Matter

By embedding these practices into their workflows, organizations can:

- Strengthen trust with customers and regulators

- Improve model fairness and long-term performance

- Ensure AI systems are ethical, inclusive, and future-proof

Bias mitigation is not a single intervention it’s a culture of responsibility that must be nurtured across teams, tools, and strategies.

Conclusion

Bias in AI is not just a technical issue—it directly affects fairness, trust, and accountability. From unbalanced datasets to algorithmic flaws and societal inequalities, bias can influence every stage of an AI system. Without proper mitigation, organizations risk discrimination, reputational damage, and regulatory penalties. The future of AI depends on building systems that are not only intelligent but also fair and transparent. Achieving this requires strong policies, ethical design practices, and continuous human oversight. As governments and industries push for responsible AI, organizations that embed fairness and explainability into their workflows will be best positioned to lead in the era of trustworthy, inclusive AI. For tailored strategies that ensure ethical and responsible AI adoption, explore our AI Development Services and prepare to lead in the future of responsible AI.

FAQs

1. What is bias in AI and why is it dangerous?

Bias in AI is unfair or skewed outcomes from flawed data or algorithms. It is dangerous because it causes discrimination, loss of trust, and regulatory risks.

2. Can bias in AI be completely eliminated?

Bias cannot be fully eliminated, but it can be minimized with data balancing, fairness-aware algorithms, and post-processing techniques.

3. How do companies test AI systems for fairness?

Companies use fairness metrics, bias detection toolkits, and model audits to measure and correct unfair outcomes.

4. Which industries are most vulnerable to AI bias?

Healthcare, finance, HR, and law enforcement face the highest risks due to their reliance on sensitive decision-making.

5. What role does regulation play in bias mitigation?

Regulations like the EU AI Act enforce fairness, transparency, and accountability, requiring audits and compliance frameworks.

6. What are some widely used tools for bias detection in AI?

Popular tools include IBM AI Fairness 360, Google What-If Tool, and Microsoft Fairlearn for bias detection and mitigation.