Introduction

Artificial Intelligence (AI) is no longer experimental; it is transforming healthcare, finance, HR, and cybersecurity. However, black-box models often create challenges such as biased outcomes, limited transparency, and regulatory risks. Therefore, many organizations are turning to AI development services that embed Ethical AI principles and adopt Explainable AI (XAI) techniques.

This article examines how Ethical AI, Explainable AI, trustworthy AI models, and AI transparency come together. We also cover technical methods such as SHAP, LIME, and counterfactual explanations, along with compliance frameworks including the EU AI Act and the NIST AI Risk Framework, which are critical for building transparent and trustworthy AI systems.

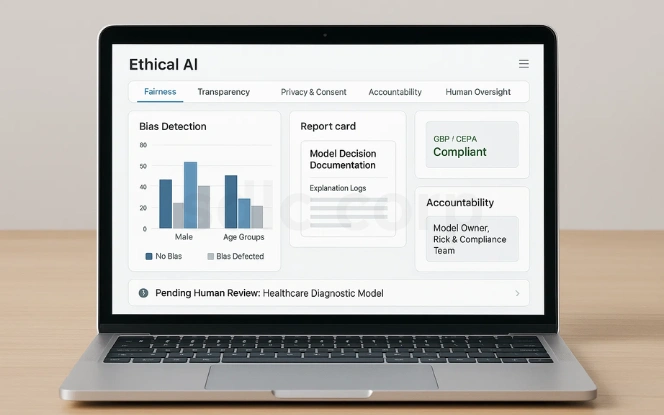

Ethical AI: Principles for Fairness and Accountability

Ethical AI lays the foundation for responsible AI adoption. It ensures models don’t just optimize for performance but also respect fairness, transparency, and accountability. Without these principles, AI can reinforce biases or even violate regulations. Moreover, it risks damaging user trust and increasing compliance failures.

Key Principles:

- Fairness: Bias detection and mitigation in training datasets to prevent discrimination.

- Transparency: Clear documentation and interpretable predictions that stakeholders can understand.

- Privacy & Consent: Use of personal data only with consent and in compliance with laws like GDPR.

- Accountability: Defined ownership of model outcomes to avoid blame-shifting.

- Human Oversight: Human review for high-stakes use cases like healthcare diagnostics or credit scoring.

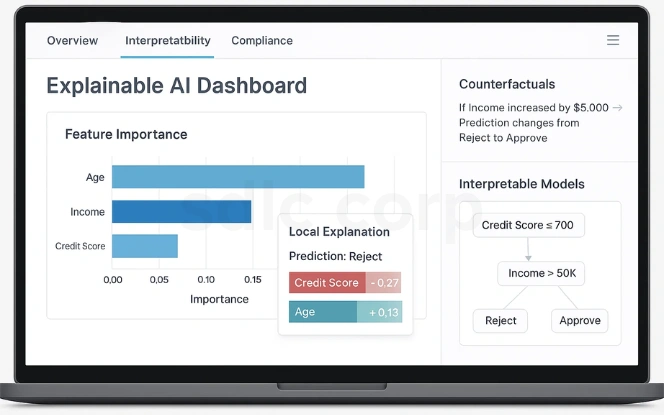

Explainable AI (XAI): Making AI Transparent

Explainable AI focuses on making model behavior interpretable. In fact, instead of treating models as “black boxes,” XAI provides mechanisms for developers, regulators, and end users to understand why and how a decision was made.

Popular Techniques:

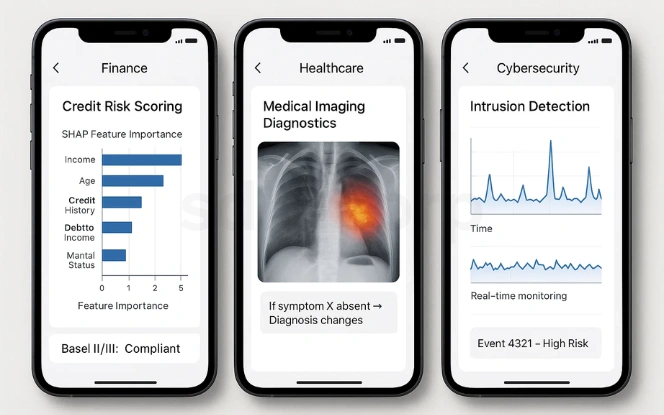

- SHAP explainability (Python-ready): A game theory approach that calculates feature contributions globally and locally.

- LIME explainability (machine learning): Perturbs inputs to approximate decision boundaries, useful for local predictions.

- Counterfactual Explanations: Provide “what-if” scenarios, helping users see minimal changes needed for different outcomes.

- Interpretable Machine Learning Techniques: Models like decision trees and logistic regression offer inherent transparency.

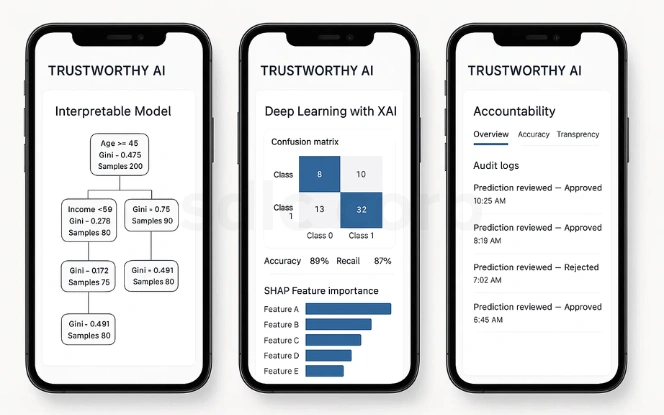

Trustworthy AI Models: Balancing Accuracy and Transparency

Trustworthy AI models strike the right balance between interpretability and performance. On the one hand, simpler models are easier to understand. On the other hand, they may not deliver high accuracy in complex tasks. Deep learning models perform better but require post-hoc explanation tools to maintain accountability.

Trustworthy AI also involves:

- Interpretable Models (decision trees, logistic regression): Naturally explainable but limited in handling complex data.

- Deep Learning Models (CNNs, transformers): High performance but opaque in reasoning.

- Hybrid Approach: Combine complex models with SHAP or LIME explanations for both accuracy and transparency.

- AI Accountability: Use of dashboards, logs, and audits to ensure governance.

Compliance & Governance Frameworks

EU AI Act: Risk-Based Regulation

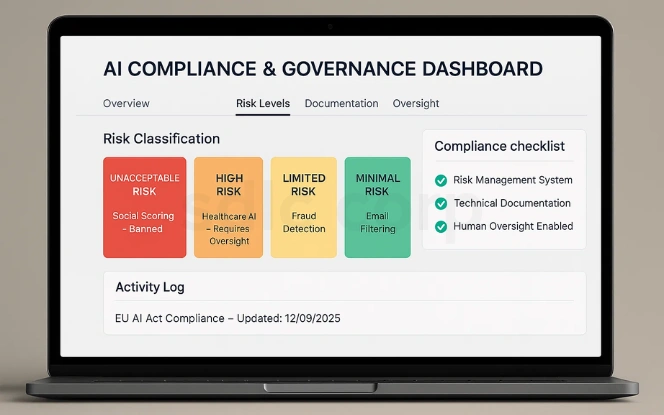

For instance, the EU AI Act introduces a comprehensive legal structure for AI. It classifies systems into risk levels:

- Unacceptable Risk: Social scoring, manipulative AI completely banned.

- High Risk: Healthcare diagnostics, credit scoring, biometric ID strict obligations for transparency, documentation, and human oversight.

- Limited Risk: Chatbots and recommendation engines must disclose AI involvement.

- Minimal Risk: Games and spam filters minimal requirements.

For high-risk systems, organizations must:

- Maintain risk management systems.

- Provide detailed technical documentation.

- Ensure human oversight for critical decisions.

Advisory and Governance for Ethical AI

Ethical AI requires governance frameworks that define accountability, review processes, and escalation paths. Advisory input helps align AI usage with legal and ethical expectations. An AI consulting company may support organizations in defining responsible AI policies and oversight mechanisms.

NIST AI Risk Management Framework (AI RMF)

Similarly, the NIST AI RMF provides practical guidance for building trustworthy AI in enterprises. It is structured into four key functions:

- Govern: Define accountability, assign roles (Data Stewards, Model Owners).

- Map: Identify risks, context, and stakeholders.

- Measure: Evaluate system performance, robustness, bias, and explainability.

- Manage: Mitigate risks, implement monitoring, and update governance practices.

This framework helps enterprises integrate explainability as part of risk management, not just a technical add-on.

Industry-Specific Guidelines

- AI Governance in Finance: Regulators like the Basel Committee mandate explainability in credit risk scoring. SHAP is widely used to provide feature-level justifications.

- Ethical AI in Healthcare: WHO recommends explainable diagnostics, with saliency maps and counterfactuals ensuring fairness and accuracy in medical imaging.

- Cybersecurity Standards: Explainability is required in intrusion detection to distinguish between false positives and real threats.

Enterprise Strategy: Implementing Explainable & Ethical AI

Deploying explainable and ethical AI requires a structured approach across the machine learning lifecycle.

Recommended Strategy:

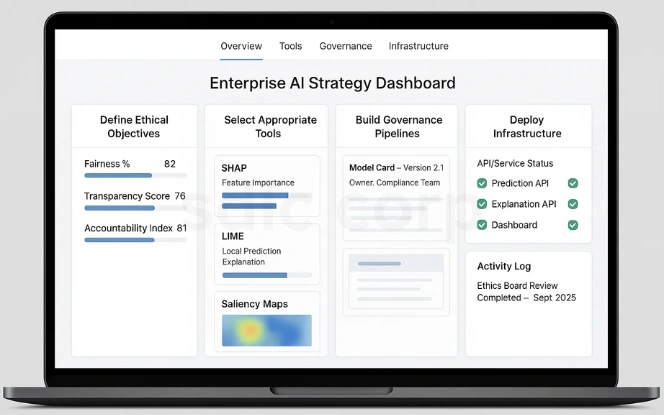

- Define Ethical Objectives: Establish measurable KPIs for fairness, transparency, and accountability.

- Select Appropriate Tools: Use SHAP for gradient boosting models, LIME for model-agnostic explanations, and saliency maps for vision tasks.

- Build Governance Pipelines: Maintain detailed documentation (Model Cards, Data Sheets), prediction logs, and assign accountability to AI ethics boards.

- Deploy Infrastructure: Integrate explanation APIs with prediction services and create dashboards for business and regulatory teams.

Use Cases Across Industries

Explainability and ethics are not optional they are essential in high-stakes domains where decisions directly affect human lives.

Industry Applications:

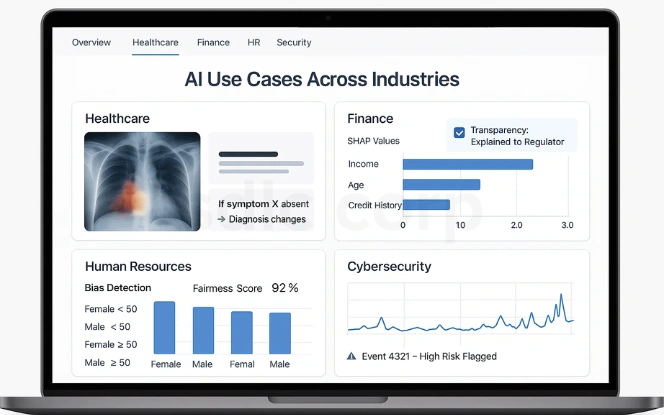

- Healthcare: Saliency maps validate medical imaging predictions, while counterfactuals explain treatment decisions to patients.

- Finance: SHAP values explain credit scoring outcomes, providing transparency to customers and regulators.

- Human Resources: Bias detection ensures AI-based hiring tools treat candidates fairly.

- Cybersecurity: Rule extraction helps detect anomalies in intrusion detection systems, offering both accuracy and explainability.

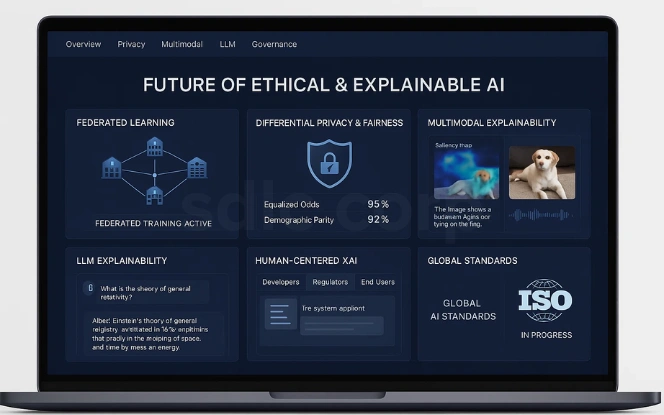

Future of Ethical & Explainable AI

AI technology is rapidly evolving, and so are the expectations for ethics and explainability. Looking ahead, the next wave will make models not only interpretable but also privacy-preserving, context-aware, and user-centric.

1. Federated Learning with Explainability

Instead of centralizing data, federated learning allows models to train on decentralized datasets (e.g., hospitals, banks) without data sharing. Future XAI tools will need to provide transparency across distributed systems.

2. Differential Privacy & Fairness Audits

Ethical AI will increasingly use differential privacy to protect individual data points while ensuring fairness metrics like equalized odds and demographic parity.

3. Multimodal Explainability

As models integrate text, image, and audio inputs, explanations must also become multimodal. For example, a medical AI system may justify predictions using both imaging saliency maps and text rationales.

4. Explainability for Large Language Models (LLMs)

With the rise of GPT-style LLMs, new XAI research is focusing on attention visualization, prompt tracing, and attribution scores to explain responses.

5. Human-Centered XAI

Future XAI systems will adapt explanations to different stakeholders:

- Developers: Technical details like SHAP values.

- Regulators: Compliance-focused summaries.

- End Users: Natural language explanations for decisions.

6. Global Standardization

Over the next decade, ethical AI and explainability will likely converge into ISO-style global standards, ensuring consistency across industries and regions.

Regional Context for Ethical and Explainable AI

Ethical AI requirements vary by region due to differences in regulation and data governance. References to AI development services in the US help illustrate how explainability and transparency standards are adapted to regional compliance frameworks.

Conclusion

The path to trustworthy AI models requires combining Ethical AI principles with Explainable AI techniques. SHAP, LIME, and counterfactual explanations improve technical transparency, while compliance with the EU AI Act and NIST frameworks ensures governance.

Consequently, enterprises can align accountability, fairness, and transparency, enterprises can deploy AI systems that are not only accurate but also compliant, reliable, and trusted by society. To achieve this effectively, organizations can hire AI developers with expertise in explainability, ethics, and governance to build future-ready AI solutions.

FAQ's

What Is Ethical AI And Why Does It Matter?

Ethical AI ensures fairness, accountability, transparency, and privacy in AI systems, preventing bias and helping enterprises meet compliance requirements like GDPR and the EU AI Act.

How Does Explainable AI (XAI) Work?

Explainable AI uses techniques such as SHAP, LIME, counterfactuals, and saliency maps to interpret model decisions, making AI transparent for developers, regulators, and end users.

What Is The Difference Between Ethical AI And Explainable AI?

Ethical AI focuses on principles fairness, accountability, privacy while Explainable AI provides technical tools and methods to understand and validate model predictions.

Why Should Enterprises Hire AI Developers With Expertise In Explainability?

Specialized AI developers can integrate explainability tools and ethical frameworks into production systems, ensuring AI is accurate, compliant, and trustworthy.

What Industries Benefit The Most From Ethical And Explainable AI?

Industries such as healthcare, finance, HR, and cybersecurity rely on ethical and explainable AI to ensure fairness, regulatory compliance, and transparent decision-making.