Introduction

Artificial Intelligence (AI) has made groundbreaking progress in fields like computer vision, natural language processing, and decision-making. However, one fundamental shortcoming continues to limit its capabilities: the lack of memory. Traditional AI models treat every interaction as isolated, without the ability to recall past events or learn across time. This absence of contextual continuity restricts applications in areas like human-like conversation, dynamic learning, and decision-making.

Enter Memory-Augmented AI Systems (MAIS) a revolutionary leap toward machines that don’t just compute but also remember, reason, and evolve. By incorporating memory into AI architectures, these systems bring us closer to lifelong learning machines that adapt and grow over time.

1. What Are Memory-Augmented AI Systems?

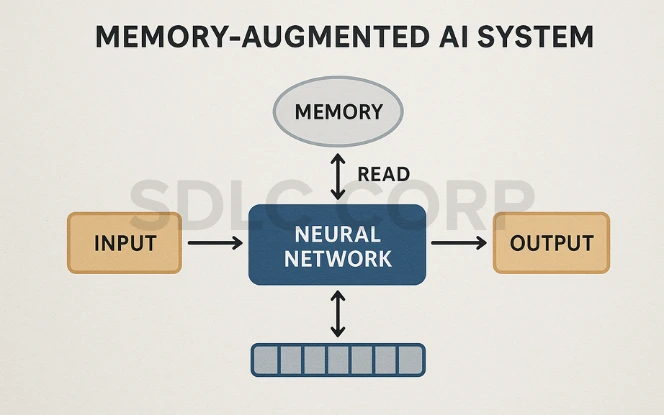

A Memory-Augmented AI System is an intelligent agent that integrates a neural network controller with an external or internal memory store. Unlike standard models, MAIS can store contextual information, retrieve it during computation, and update memories over time, much like how humans use short-term and long-term memory.

These systems are designed to learn differentiable memory access patterns, meaning they can read from and write to memory during training using gradient descent. This makes them flexible, trainable, and capable of solving complex, sequential, and multi-step tasks—all within the bounds of safe and responsible AI development, as discussed in our AI Alignment Strategies.

2. Core Components of a Memory-Augmented AI System

The architecture of a memory-augmented system typically consists of:

- Controller

A recurrent neural network (RNN), Transformer, or LSTM acts as the central processor, deciding when and how to access memory. - Memory Matrix

A 2D differentiable matrix that stores information such as tokens, embeddings, or state representations. - Read/Write Heads

Modules that dynamically access memory slots based on input queries or instructions. - Interface Vectors

Encoded instructions that guide memory interactions much like how we decide to remember or forget events.

This structure enables non-linear, asynchronous access to information, vastly enhancing the model’s reasoning ability.

3. Evolution of Memory in AI

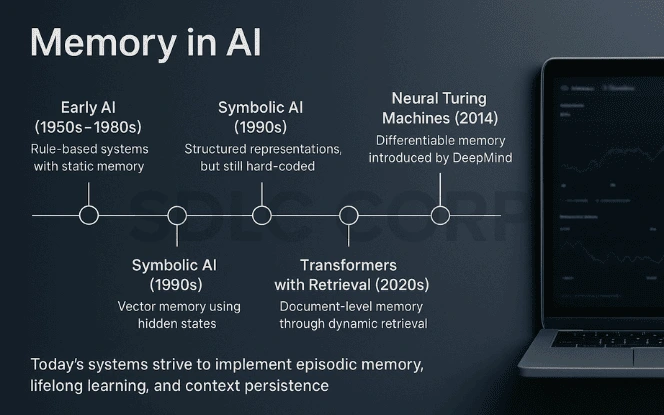

Early AI (1950s–1980s): Rule-Based Systems with Static Memory

Early AI relied on predefined rules stored in fixed memory structures. Knowledge was static, and any update required manual coding, making adaptation to new situations impossible.

Symbolic AI (1990s): Structured Representations

Symbolic AI used formal logic and structured data to represent knowledge. This made reasoning explainable but inflexible, as rules and symbols were hard-coded.

Neural Networks (2000s): Vector Memory in Hidden States

Artificial neural networks stored information in distributed patterns across hidden layers. These patterns acted as short-term memory but struggled with long-term knowledge retention.

Neural Turing Machines (2014): Differentiable Memory by DeepMind

Introduced external memory that models could read from and write to using gradient descent. This allowed complex sequence learning and dynamic information handling.

Transformers with Retrieval (2020s): Dynamic Document-Level Memory

Transformers combined with retrieval systems enabled access to large external databases in real time, allowing detailed responses beyond the model’s fixed training data.

Modern Memory-Augmented AI (Present): Episodic and Lifelong Memory

Current research aims for systems that remember past interactions, accumulate knowledge over time, and adapt to evolving tasks—mirroring human-like memory functions.

4. Popular Memory-Augmented Models

Neural Turing Machine (NTM)

Combines LSTM controllers with differentiable memory for algorithmic tasks.

Differentiable Neural Computer (DNC)

Extends NTM with temporal linkages and better memory addressing.

Memory Networks

Attention-based systems from Facebook AI Research, effective for QA tasks.

RETRO (Retrieval-Enhanced Transformer)

Efficiently accesses external document databases during inference without expanding model size.

5. Human Memory vs. AI Memory

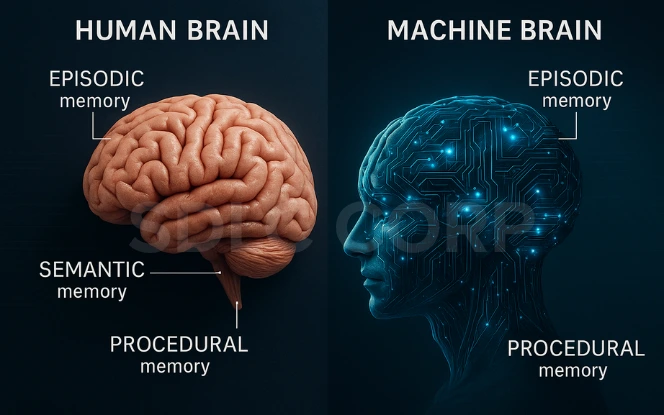

While both systems aim to support learning and retrieval, human and AI memories operate differently.

Human Memory

- Includes episodic, semantic, and procedural components.

- Highly associative and emotion-influenced.

- Can generalize from few examples.

AI Memory

- Task-specific, numeric, and strictly logical.

- Requires explicit encoding and retrieval schemes.

- Struggles with generalization unless explicitly trained.

Despite progress, AI memory remains more brittle and less flexible than its biological counterpart

6. Industry Applications & Case Studies

OpenAI’s Memory in ChatGPT

Remembers user preferences, tone, and history to personalize interactions.

AlphaCode by DeepMind

Solves programming problems using memory-based reasoning and prior examples.

ReAct Agents

Combine memory with reasoning and action planning for multi-step tasks.

Customer Service Bots

Store past interactions, tone, and solutions for faster resolution and improved user satisfaction.

Medical Diagnostics

Recall patient history, medications, and trends to support treatment recommendations.

These memory-augmented AI use cases are becoming increasingly relevant across industries—from healthcare to retail to small enterprises. Learn how memory-enabled AI is transforming small businesses in our AI for Small Business guide.

7. Memory-Augmented Systems in Autonomous Agents

Memory plays a critical role in agents that interact with the world over time.

- Navigation systems remember maps and hazard locations.

- Conversational agents maintain thread continuity over days or weeks.

- Game-playing agents leverage historical states to build winning strategies.

- Industrial robots retain object positions and task routines.

Memory enables autonomy by anchoring decisions in experience.

8. Key Benefits of Memory-Augmented Systems

- Contextual Awareness: Keeps track of previous interactions, improving coherence.

- Lifelong Learning: Adapts over time without retraining from scratch.

- Personalization: Tailors content, responses, or services to individual users.

- Multi-Step Reasoning: Enables chain-of-thought processing.

- Efficiency: Recalls past solutions rather than recalculating them.

9. Architectural Variants and Scaling Strategies

- Key-Value Memory Networks – Quick retrieval via embedding comparison.

- Neural Caches – Temporary memory from recent activations.

- Vector Databases – Scalable long-term memory for retrieval-augmented systems.

- Differentiable Memory – Fully trainable but costly and complex

- Hybrid Models – Combine persistent and transient memory layers.

These allow adaptation to small apps or large enterprise systems.

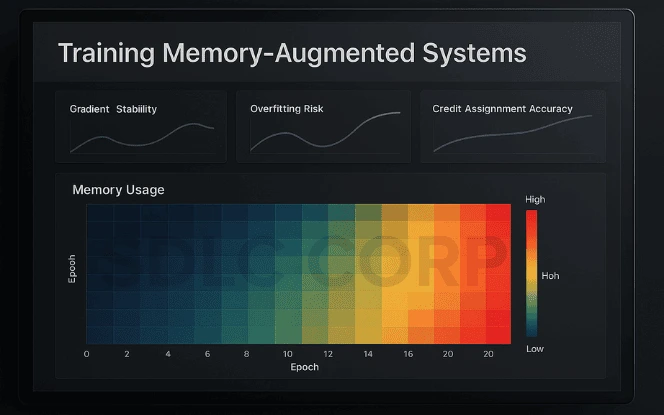

10. Training Memory-Augmented Systems

Memory systems are harder to train due to:

- Credit assignment problems (what to recall when).

- Overfitting risks due to memory memorization.

- Gradient instability in long sequences.

Solutions include:

- Curriculum learning

- Memory dropout layers

- Auxiliary scoring networks

- Meta-learning techniques

11. Ethical and Societal Implications

- Data Ownership – Who owns the AI’s memory?

- Right to be Forgotten – How can users erase their data?

- Memory Bias – Retained biases lead to repeated model errors.

- Surveillance Risks – Persistent tracking of user behavior.

- Regulatory Compliance – Legal frameworks like GDPR and HIPAA must be respected.

Ethical memory architecture will be essential to trustworthy AI.

12. Enterprise Use and Governance Considerations

In enterprise environments, memory-augmented AI systems must align with data governance, security, and compliance requirements. Long-term memory introduces additional considerations around access control and auditability. Enterprises may work with an enterprise AI development company to design systems that support scale while maintaining operational oversight.

13. Advisory and Strategic Oversight

Strategic planning is often required to determine how memory should be used, updated, or retired within AI systems. An AI consulting company may assist organisations in defining governance frameworks, evaluating risk, and aligning memory-augmented systems with business and regulatory expectations.

Conclusion

Memory-Augmented AI Systems mark a pivotal transition in the field of artificial intelligence. Moving beyond stateless computation, these systems embody the essence of learning not just from data, but from experience.

Whether it’s a chatbot remembering your favorite travel destinations or a medical system tracking your health progress, memory gives AI the dimension of time, continuity, and evolution.

As memory becomes central to AI architectures, organizations that invest in capable AI development services will be best positioned to lead the next wave of intelligent, human-like applications.

FAQ'S

What’s the difference between GPT and memory augmented systems?

GPT operates on a fixed-length context window. Memory augmented systems retain context across sessions or tasks, enabling long-term learning.

Can memory be updated dynamically?

Yes. Most memory systems allow updates during inference without retraining the core model.

Are memory systems always persistent?

No. Some use short-term (session-based) memory, while others store data for long-term personalization.

Are there open-source tools for building these systems?

Absolutely. Libraries like Sonnet, Haystack, LangChain, and HuggingFace support memory modules.

What industries benefit from memory augmented AI?

Healthcare, education, finance, software, customer service, and logistics are among the top beneficiaries