Introduction

Personal AI agents are no longer abstract concepts. They already manage email, suggest schedules, summarize research, and update records. In many ways, they act like digital helpers that learn from context and improve with use. Because they save time and reduce errors, people across industries now rely on them.

For context, you can review our overview of how AI is transforming the world. In addition, you can compare terms in how AI and algorithms differ. These pieces set the stage and help readers avoid confusion.

People often ask what are AI agents when they hear about systems that work on their behalf. In simple terms, the idea covers programs that sense, reason, and act for users. In this article, you will see AI intelligent agents explained in clear language. You will also see how personal AI agents work, where they help, and why LLM-based personal agents now lead. Finally, you will learn how personal AI assistant technology fits into real tools and safe policies. Throughout, we use examples and step-by-step descriptions so the concepts stay practical.

The Core Idea Without Jargon

A digital agent is software that acts for a person. It watches for signals, thinks through options, and completes tasks. Because it can choose actions, it feels more helpful than fixed menus or rigid forms. It is still software, yet it operates with a plan and a goal.

When people ask what are AI agents, they often expect a short definition. However, the scope is broad. Some agents only schedule meetings. Others work across email, calendars, documents, and business tools. In many cases, they check policies and ask for approval before they act. As a result, you keep control while the agent saves time.

The phrase personal AI agents refers to tools built for individuals. These tools adapt to a person’s tasks and preferences. They remember useful details. They suggest next steps. They also respect limits that you set. In practice, they remove busywork while keeping you in charge.

How Decisions Happen In Practice

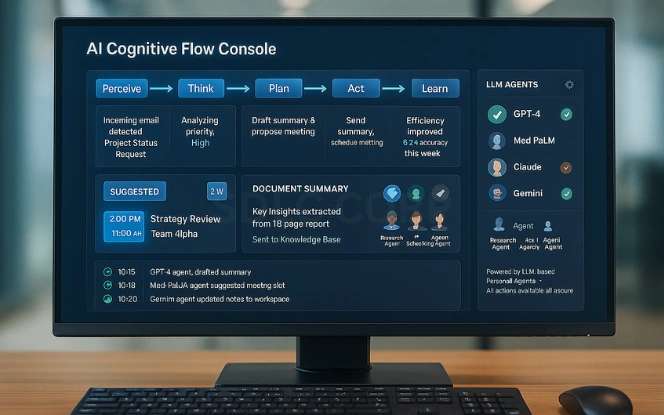

It helps to see AI intelligent agents explained as a simple loop. First, the agent perceives input. Then it reasons about goals and context. Next, it plans steps and chooses tools. After that, it acts. Finally, it checks results and learns from feedback. Because the loop repeats, the agent improves with use.

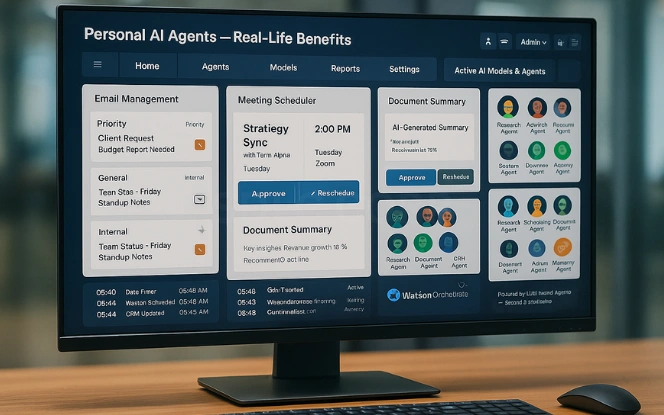

Consider how personal AI agents work during scheduling. You say, “Set a one-hour meeting with James next week.” The agent parses the request. Then it checks both calendars, time zones, and constraints. Next, it proposes two slots and composes an invite. After confirmation, it sends the invite and adds a video link. If conflicts appear, it revises the plan and suggests new times. In short, the agent handles the small steps you used to manage by hand.

This same loop applies to support tickets, research tasks, and finance checks. Inputs arrive. The agent extracts facts. It plans a path. It calls tools. It verifies outputs. Then it stores useful context for next time. Because the loop is consistent, the behavior stays predictable and transparent.

Where These Tools Provide Real Value

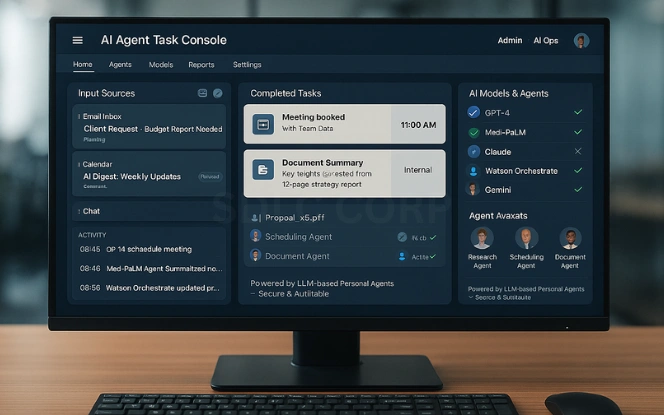

The label personal AI assistant technology covers the setup that connects users, models, and apps. It includes the chat surface, the planner, the memory store, and the connectors. When these parts work together, many tasks get easier.

- Mail and messages: The agent sorts threads, drafts replies, and tags items. In addition, it suggests subjects that match tone and purpose.

- Scheduling and logistics: It finds time slots, books rooms, adds links, and sends reminders. Therefore, you avoid back-and-forth delays.

- Research and reading: It skims long documents and extracts key points. It also links quotes to sources so checks are easy.

- Records and forms: It updates rows, attaches files, and adds notes. As a result, your systems of record stay current.

- Follow-ups and tasks: It captures action items and assigns owners. Meanwhile, it nudges people when due dates approach.

Because these gains show up each day, the impact compounds. A few minutes saved per task turns into hours each week. In addition, error rates drop as the agent applies the same checks every time.

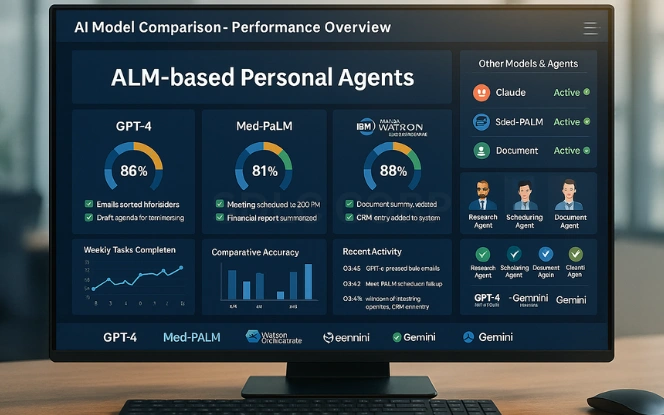

What Powers Today’s Best Systems

The most capable tools today are LLM-based personal agents. A large language model reads and writes text with skill. It follows instructions, asks clarifying questions, and keeps track of context. It can also use tools. For example, it can call an API, query a database, or draft a spreadsheet formula.

Still, a model alone is not an agent. The agent wraps the model with memory, policies, and connectors. It also adds checks and logs. In practice, this wrapper turns a strong model into a dependable helper. Because steps are recorded, you can audit actions or roll back changes if needed. As a result, the system feels trustworthy, not opaque.

The Architecture In Plain English

A reliable agent follows a consistent flow:

Perceive → Think → Plan → Act → Check → Learn.

- Perceive: It reads inputs such as prompts, emails, files, and events.

- Think: It extracts goals, dates, names, and constraints. In addition, it resolves references.

- Plan: It breaks the task into steps and selects tools.

- Act: It executes steps through apps and APIs.

- Check: It verifies outputs, handles errors, and retries when safe.

- Learn: It stores helpful facts for future runs.

Memory That Helps, Not Hurts

Agents need memory, but they should keep it scoped. Short-term memory holds the current thread. Long-term memory stores stable facts, like your role, a project code, or a meeting room. Because excess data increases risk, the store must be small, labeled, and easy to clean. In addition, the system should let users erase entries at any time.

Tools And Permissions

Tools connect the agent to your world. Common tools include calendar clients, email, file storage, CRM, help desk, HR systems, and spreadsheets. However, access should be narrow. The principle of least privilege limits each token to specific actions. Therefore, a scheduling task should not have payroll access. Because permissions drift, regular reviews are important.

Orchestration With Guardrails

The planner selects the next step. In cautious mode, it asks for review before writes. In faster mode, it acts and posts a log with undo links. As a result, teams can choose the pace that matches risk. In addition, logs make audits simple. People see what happened, when it happened, and why.

Data, Privacy, And Controls

Trust grows when control is clear. Good programs publish a short policy with plain language. They show what the agent can see and do. They also explain how to stop an action and how to report an issue.

- Consent and scope: Ask before accessing data. Show scopes in simple terms.

- Data minimization: Keep only data that supports the task. Remove the rest.

- Least privilege: Grant the smallest set of rights that still allows the job.

- Audit trails: Log each action with a link to evidence.

- Masking: Hide sensitive fields when possible.

- Human review: Require approval for risky steps, such as payments or bulk changes.

- Rate limits: Prevent loops that spam systems or people.

- Emergency stop: Provide a visible control that halts actions at once.

When you see AI intelligent agents explained with these controls, the setup makes sense to non-engineers. Because safety is a design choice, the habits above should come first. In addition, teams should test the limits before going live.

Quality And Safety You Can Measure

Strong programs measure results. Numbers reveal progress and problems early. Because agents run many times a day, even small improvements matter.

- Task success rate: Share the percent of tasks done right on the first try.

- Correction rate: Track how often people edit drafts or roll back steps.

- Latency: Measure time from request to result.

- Tool errors: Count failures by app or API so fixes target the true cause.

- Escalations: Watch how often tasks hand off to humans and why.

In addition, teams should maintain a “golden set” of test tasks. They should run it daily and compare results. Therefore, regressions appear early, and rollbacks are quick. Over time, these tests protect quality while features grow.

A Simple Playbook For Teams

Here is a path that works across many companies:

Practical ways teams build personal AI agents

Teams usually start with a narrow workflow, then expand once reliability improves. Many projects use standard frameworks plus AI development services to integrate model selection, tool use, and testing. As a result, early design choices often decide long-term stability.

Enterprise rollout and governance

When agents touch customer data or internal systems, governance becomes the main job. Teams often work with an enterprise AI development company to define permissions, approval flows, and logging. Moreover, strong guardrails reduce the risk of silent failures.

Strategy, use-case selection, and ROI checks

Some teams struggle less with building and more with choosing the right workflows. That’s where an AI consulting company helps by mapping tasks, estimating ROI, and setting success metrics. Then you can scale the agent only after it proves value.

Because the steps are modest, teams show value fast while keeping risk low. In addition, people learn how to work with the system and how to steer it.

Case Examples With Real Models

Healthcare intake using Google Med-PaLM

A clinic builds an intake helper around Med-PaLM. The agent reads forms, checks missing details, and drafts patient notes. It flags data gaps and suggests questions for staff. As a result, intake time drops by thirty minutes per patient. Errors fall as well. In addition, an audit log links each change to a source line.

Finance reconciliation using GPT-4

A finance team powers a ledger agent with GPT-4. The agent groups failed transactions, explains likely causes, and drafts tickets with steps to fix. It posts a status chart each morning. Therefore, leaders see progress without asking for reports. After two weeks, backlog volume drops by half, and average fix time improves.

IT self-service using IBM Watson Orchestrate

An IT group uses IBM Watson Orchestrate to process laptop requests. The agent checks stock, role, and budget. Then it fills the purchase form and routes for approval. Meanwhile, it posts updates to the requester. Turnaround time falls from five days to two. Because the process is logged, audits take minutes, not hours.

These examples show how personal AI agents work when linked to strong models and safe policies. They act with speed. They record steps. They keep humans in charge while removing drudgery.

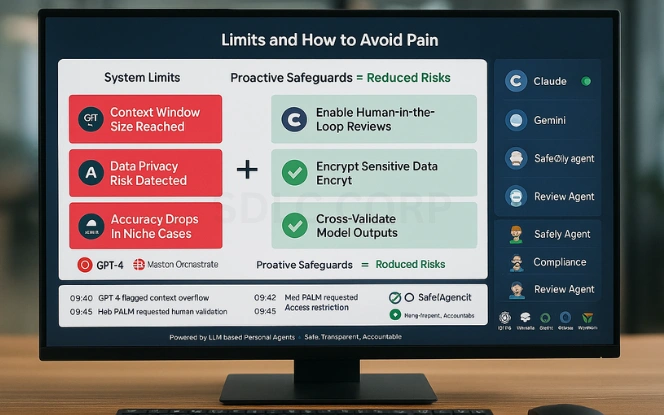

Limits And How To Avoid Pain

Every tool has limits. Agents act fast, which is both a strength and a risk. You can avoid most issues with a few habits.

- Hallucination risk: Models can be wrong with high fluency. Therefore, verify facts before writing.

- Tool misuse: Bad prompts can call the wrong API. Use allow-lists and typed arguments.

- Scope creep: Features grow too quickly and trust drops. Add capabilities in small steps.

- Privacy drift: Logs can leak information. Mask fields and rotate stores on a schedule.

- Silent changes: Unannounced updates can surprise users. Keep a changelog and announce releases.

Because these risks are known, plans can address them up front. In addition, a culture of review and rollback makes change safer.

What To Expect Over The Next Year

The trend line is clear. Models will get faster and smaller. Tools will integrate more deeply with calendars, documents, and line-of-business apps. Meanwhile, planners will improve at multi-step tasks and interruptions.

Expect improvements in four areas:

- Long-term memory: Better recall with clear retention limits and simple purge tools.

- Multi-agent handoffs: One agent delegates to another with shared context.

- Privacy by default: More work will happen on encrypted or local data.

- Built-in tests: Workflows will include tests that run before deployment, like unit tests for code.

As these parts mature, personal AI assistant technology will feel as normal as search or email. People will ask the system to handle steps and will expect a clear log after each task.

Conclusion

You have now seen AI intelligent agents explained in simple language. You have also seen how personal AI agents work across email, calendars, and records. We discussed safeguards that keep people in control. We covered real models that make results stable. In addition, we showed where personal AI assistant technology fits in the stack and how to roll out a safe pilot. Because clarity matters, we kept claims specific and tied to steps you can follow.

If you plan to build skills, you can share how to become an AI developer with your team. These resources add background and support clear decisions.

If your organization is ready to test a small use case, we can help map scope, set rules, and ship a pilot. Hire AI Development Services with SDLC Corp to begin with a plan that saves time and protects trust.

FAQs

How Do These Agents Make Choices?

They interpret your request, check data, and plan steps. Then they use tools, act, and verify results. In this process, personal AI agents learn from feedback and improve over time.

Where Do They Help The Most?

They help with routine steps that slow people down. For example, they sort mail, schedule meetings, update records, and summarize documents. As needs grow, personal AI assistant technology supports larger workflows.

What Sets Today’s Systems Apart?

Modern tools use strong models and safer wrappers. Because of that design, LLM-based personal agents can follow policies, call tools, and explain actions in plain terms.

Can You Explain The Concept Simply?

Yes. You can think of the idea this way: perceive, think, plan, act, check, and learn. When you see AI intelligent agents explained in these steps, the behavior becomes easy to predict.

Are These Tools Only For Technical Teams?

No. Any group that handles repeat tasks can benefit. If you still wonder what AI agents are, imagine a careful helper that respects your rules and reduces your manual work.