Introduction

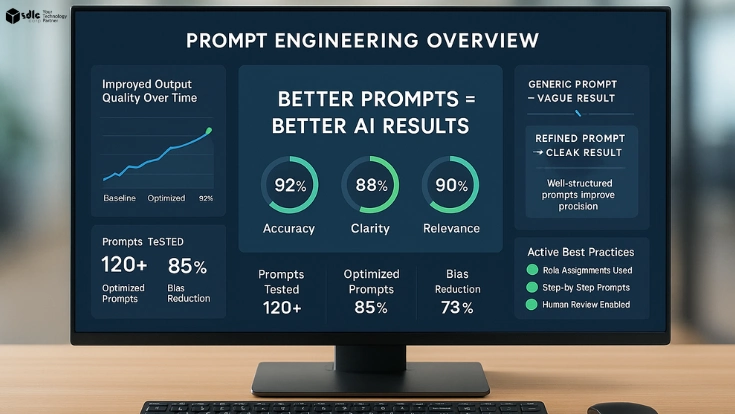

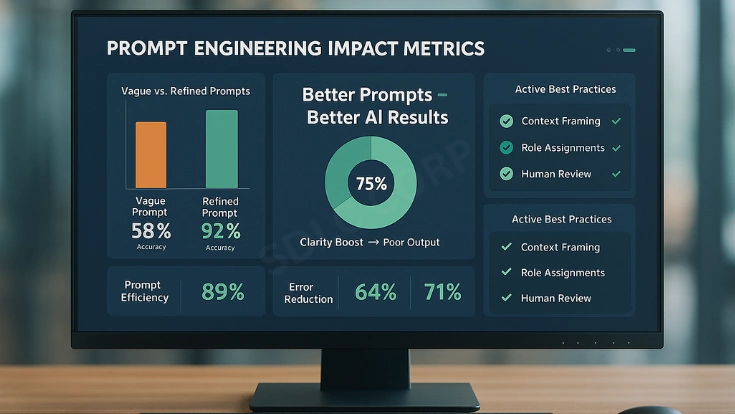

Prompt engineering best practices have become essential for anyone working with AI in 2025. From generating business insights to writing technical documents, the way you design prompts determines the quality of results. Many AI project failures are not caused by model flaws but by poor instructions. Without clarity, even advanced models like GPT-4 or Claude may produce vague, biased, or incomplete outputs.

A good prompt is not long, it is structured, specific, and optimized for the task. For example, asking “Write a report” may lead to a generic reply. A refined version such as “Act as a financial analyst. Summarize this quarterly report in 200 words with three risks and two recommendations” creates actionable output. This difference highlights why structure matters.

In this blog, we break down practical methods for writing prompts that work. You will see how AI prompt design techniques and advanced prompt engineering methods improve results. We will also explore how large language model prompt tuning ensures accuracy in enterprise settings.

By the end, you will have a framework to build prompts with clarity, context, and consistency, delivering reliable results every time.

For a broader perspective, see our detailed article on Responsible AI Development

Why Prompt Engineering Is a Core Skill

AI results depend heavily on prompt clarity. Organizations often find that most output errors come from poor prompt design, not technical limitations. This makes structured prompting an essential skill, not a side task.

Here are three foundations of effective prompting:

- Understand Your Model – Models differ in strengths. Some are creative, while others handle analysis better. Tailor prompts to the system you are using.

- Know the Constraints – Token limits, format handling, and budget affect responses. Some models perform best when asked for tables or structured formats.

- Test and Iterate – Prompting requires refinement. Comparing variations and analyzing results improves stability and accuracy.

These practices form the base of prompt engineering best practices. With clear methods, you reduce bias, improve compliance, and achieve results that align with your goals.

You can explore more on this in our guide to AI for Small Business

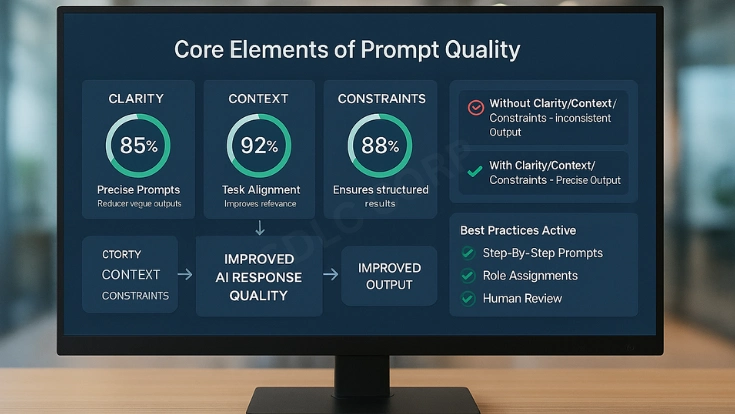

Core Elements of a High-Quality Prompt

High-quality prompts share three traits: specificity, context, and format.

- Specificity: Avoid vague directions. Say “Write exactly 300 words with two recommendations” instead of “briefly discuss.”

- Context: Assign roles such as “Act as a cybersecurity analyst.” This directs tone and focus.

- Format: State how you want outputs—bullet points, JSON, tables, or paragraphs.

Add examples when possible. Few-shot learning shows the AI what good results look like. Clarify tone and detail level as well. Stating “Explain in technical detail” versus “Give an executive summary” makes a difference.

Together, these steps create effective natural language prompts. They allow different teams to apply prompts without needing deep expertise. The result is consistency, accuracy, and clarity across workflows.

For further reading, check our post on Personal AI Agents Explained

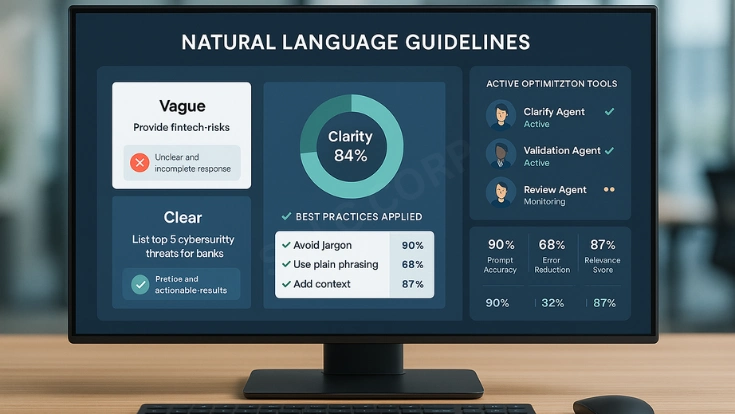

Natural Language Matters

AI models work best when instructions reflect natural human communication. Overly complex or abstract wording reduces clarity.

For example:

- Complex: “Provide cyber risk vectors in the fintech sector.”

- Clear: “List the top five cybersecurity threats for financial firms.”

The second version uses plain English, is easier for humans to check, and guides the model more effectively. These are hallmarks of effective natural language prompts.

This approach makes AI adoption easier across teams. Analysts, engineers, and managers can all apply structured prompts without requiring advanced technical knowledge. Natural language enhances collaboration and reduces misinterpretation.

Using this style within prompt engineering best practices ensures clarity and reliability while keeping outputs accessible for both technical and non-technical users.

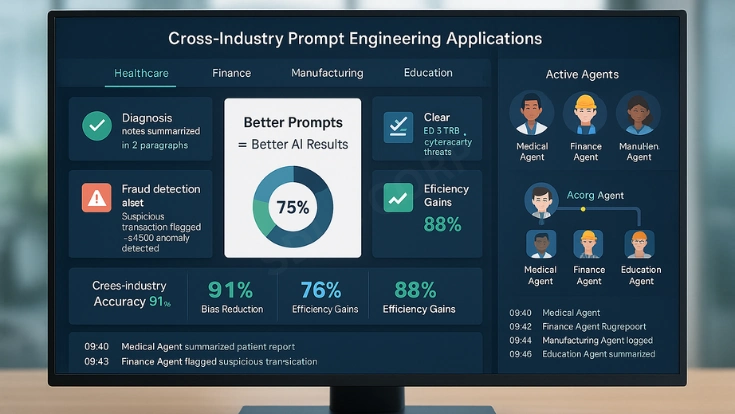

Practical Applications Across Industries

Prompt engineering has real value across industries, and best practices adapt to these contexts.

- Healthcare: Used for medical literature reviews, patient record summaries, and drug interaction checks. Here, precision and compliance are critical.

- Finance: Applied for fraud detection reports, policy interpretation, and risk analysis. Prompts need accuracy and auditability.

- Manufacturing: Used for production summaries, equipment issue detection, and safety guideline documentation. Clarity prevents costly errors.

- Education: Supports curriculum design, test preparation, and grading feedback. Structure ensures relevance for learning outcomes.

These use cases show how effective AI prompt design shapes trust and reliability.

Advanced Prompt Engineering Methods

For enterprise applications, advanced methods take prompting to the next level:

- Chain of Thought → Ask the AI to explain step by step.

- Prompt Chaining → Connect multiple prompts where each depends on the last.

- Few-Shot Learning → Show examples to shape output quality and tone.

- Structured Outputs → Request JSON, tables, or bullet lists for predictable results.

- Role Assignments → Define expertise with prompts like “Act as a legal advisor.”

These advanced prompt engineering methods produce repeatable, reliable results. They reduce randomness, enforce structure, and allow AI to scale across business operations.

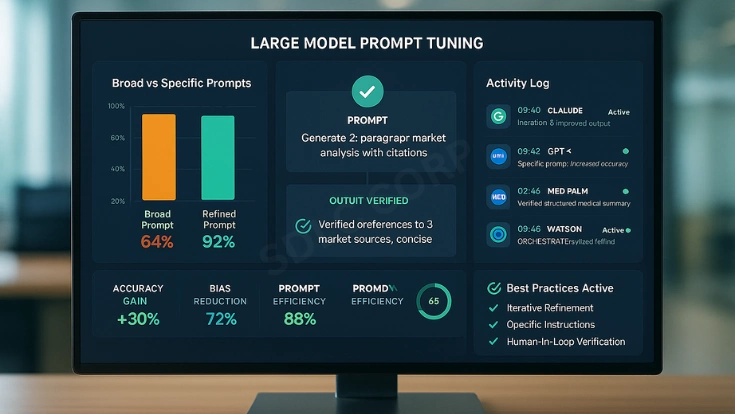

Large Language Model Prompt Tuning

Large language model prompt tuning is the process of refining and testing prompts until results are accurate and repeatable. Unlike smaller systems, large models can handle complex reasoning and generate nuanced outputs. However, they are also highly sensitive to how instructions are phrased. A single word change can shift the tone, structure, or even factual accuracy of the answer.

For example, asking “Write a market analysis of the retail sector” may give a broad summary. But if you say “Write a two-paragraph market analysis of the retail sector, include three risks and cite two sources,” you get actionable, formatted insights. This illustrates why tuning is essential.

Tuning involves four steps:

- Design structured prompts → Begin with role, task, and format.

- Test variations → Compare different wordings to measure impact.

- Refine iteratively → Adjust phrasing, tone, and constraints until consistent.

- Document and reuse → Save effective prompts in a shared library.

Over time, organizations build a knowledge base of prompts that consistently deliver quality. This reduces errors, prevents hallucinations, and supports compliance by making responses predictable.

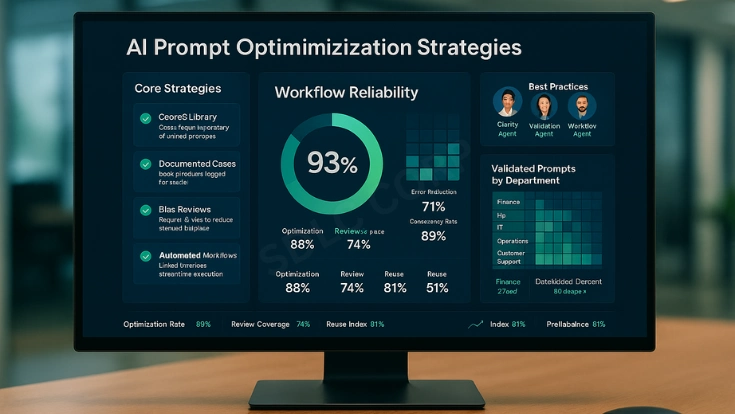

Tuning is also part of AI prompt optimization strategies. It ensures that prompts work across industries and remain effective even as models evolve. The result is a scalable framework where small refinements unlock significant performance improvements.

Building AI Workflow Strategies

Prompts alone are not enough to scale AI usage. To move from experimentation to stability, teams must embed prompts within structured workflows. This means creating repeatable processes, libraries, and tools that ensure consistency.

A practical workflow includes four parts:

- Prompt Library → Build a repository with tested, domain-specific prompts. Each should include context, examples, and expected outcomes.

- Validation System → Use review cycles or peer audits to test whether results match business needs. This prevents unreliable outputs from entering critical workflows.

- Automation → Integrate prompts into dashboards or APIs so they can run at scale. For example, a customer support team might use automated prompts to summarize tickets.

- Documentation → Record why a prompt works and when to use it. This allows teams to reuse knowledge across departments.

Consider a finance team using AI for risk assessments. Without workflows, different analysts may write different prompts, leading to inconsistent reports. A workflow library ensures every analyst uses prompts that are already validated, producing reliable and comparable outcomes.

These strategies transform prompt engineering best practices from individual skills into organizational assets. When workflows are in place, AI becomes less experimental and more of a dependable tool for daily operations.

Human–AI Collaboration

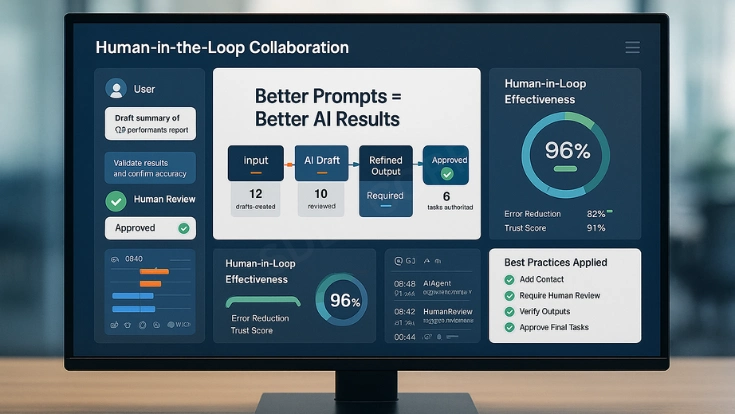

AI is powerful, but it cannot replace human expertise. Models generate insights, draft content, and highlight trends. However, they cannot fully understand business priorities, ethical boundaries, or compliance requirements. This is why human oversight is critical.

In a human-in-the-loop model, AI handles tasks like summarizing research or generating alternatives. Humans then review, validate, and refine these outputs. For example, a legal team might ask AI to summarize a case. The model can prepare a structured draft, but lawyers must confirm accuracy and context before it is used.

This collaboration ensures accountability. Humans bring judgment, context, and ethical oversight that models cannot replicate. AI contributes speed, scale, and efficiency. Together, they create a balanced workflow that maximizes strengths while reducing risks.

To implement effective collaboration, organizations should:

- Define boundaries → Clarify what AI can do and where human review is required.

- Set review protocols → Require humans to validate outputs before final decisions.

- Maintain feedback loops → Use human corrections to refine prompts for future accuracy.

When applied consistently, this approach supports compliance, prevents misuse, and aligns AI adoption with long-term business strategy. It also ensures that AI prompt design techniques are applied responsibly, reinforcing trust in outputs.

Common Pitfalls in Prompt Engineering

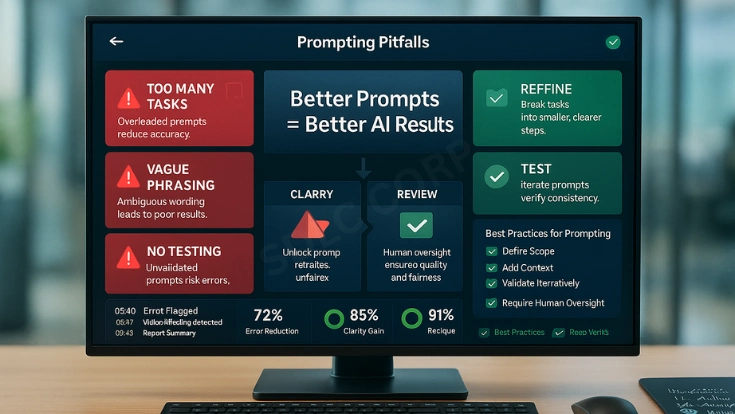

Even with clear methods, teams often face challenges when designing prompts. These pitfalls reduce accuracy and create inefficiency if not addressed.

- Overloading Prompts → Asking a model to “Summarize this report, suggest five improvements, and write a press release” in one instruction usually produces poor results. Breaking it into steps works better.

- Vague Instructions → Phrases like “Write briefly” or “Give insights” leave too much room for interpretation. Always define scope and format.

- Neglecting Bias and Compliance → Without review, outputs may reflect bias from training data. Prompts should include compliance checks when used in regulated industries.

- Skipping Iteration → Treating a prompt as final after one test misses opportunities for refinement. Testing variations helps reduce unpredictability.

For example, in healthcare, a vague prompt could lead to unsafe or incomplete medical summaries. In finance, ignoring compliance can result in reports that fail audit requirements. Both cases highlight why structured prompts are non-negotiable.

By recognizing these pitfalls early, teams can design safer, clearer, and more effective workflows. Simple fixes, such as using advanced prompt engineering methods like chaining or structured outputs, can transform performance.

The Role of Continuous Improvement

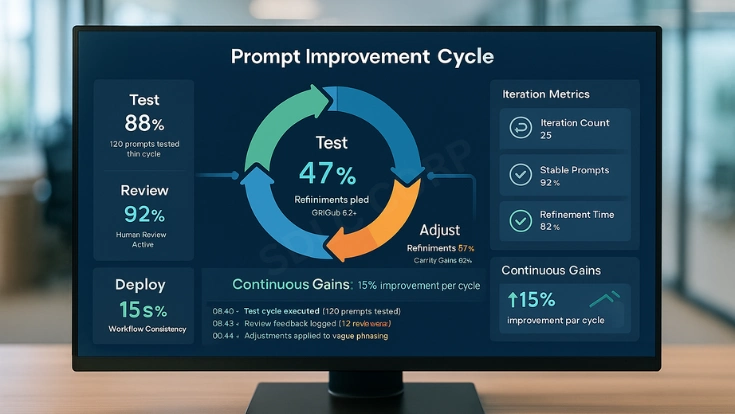

Prompt engineering is not a one-time skill. As models evolve, so do the techniques required to use them effectively. Organizations that adopt continuous improvement remain ahead, while those that treat prompting as static fall behind.

Improvement begins with regular testing. Models are updated often, and prompts that worked yesterday may not perform the same today. Running evaluations ensures stability.

Next is feedback collection. Every time a user corrects or improves an AI output, that insight should be captured. Over time, these corrections become part of the organization’s prompt knowledge base.

Teams should also practice prompt versioning. By storing different iterations and noting their results, it becomes easier to refine prompts systematically. This mirrors software version control and brings structure to prompt management.

Finally, knowledge sharing is critical. A prompt that works in one department may also help another. For example, a customer service summary prompt could be adapted for HR case reviews. Sharing reduces duplication and creates standardization across teams.

Continuous refinement ensures that prompt engineering best practices stay relevant as AI capabilities grow. This cycle of testing, learning, and sharing builds resilience and ensures organizations can trust AI outputs in the long term.

Conclusion

Prompts shape the way AI delivers results. Clear, structured, and context-rich instructions turn vague outputs into actionable insights. By applying prompt engineering best practices, organizations gain accuracy, reliability, and efficiency.

Methods such as AI prompt design techniques, advanced prompt engineering methods, and large language model prompt tuning provide the framework for consistent results. These practices go beyond experimentation, embedding AI into workflows that deliver measurable business value.

Equally important is collaboration between humans and AI. With oversight, feedback, and ethical review, AI becomes a reliable partner rather than a risk. Combined with workflows, testing, and continuous improvement, organizations can scale AI responsibly.

The next step is not only to understand these practices but also to apply them across industries. From finance and healthcare to education and manufacturing, prompt engineering is shaping reliable AI adoption.

Hire AI Development Services with SDLC Corp and explore how tailored solutions can transform your organization.

FAQs

What Are Prompt Engineering Best Practices?

Prompt engineering best practices include writing clear, specific instructions, adding context through role assignments, and defining constraints such as word count or format. These methods reduce randomness and help AI deliver consistent, accurate outputs.

How Do AI Prompt Design Techniques Improve Model Accuracy?

AI prompt design techniques improve model accuracy by structuring prompts in a way that reduces ambiguity. Using natural language, role framing, and step-by-step instructions ensures that outputs align with intended goals.

Why Is Large Language Model Prompt Tuning Important?

Large language model prompt tuning is important because small changes in wording can significantly affect output quality. By iterating, testing, and refining prompts, organizations can improve reliability and reduce errors in AI workflows.

What Are Some Effective Natural Language Prompts For AI?

Effective natural language prompts for AI are those written in plain, professional language. For example, instead of saying “Explain fintech risks,” a better prompt would be “List the top five cybersecurity threats for financial firms.” This ensures clarity and measurable results.

Which Advanced Prompt Engineering Methods Are Used In Enterprises?

Enterprises often use advanced prompt engineering methods such as few-shot learning, prompt chaining, and structured outputs. These approaches help AI deliver domain-specific, repeatable results that support large-scale operations.