LangChain is a specialized programming language designed specifically for web scraping tasks. In this comprehensive guide and tutorial, we’ll explore LangChain’s features and syntax, and how to effectively use it for web scraping. We’ll also delve into Excel web scraping, integrating this crucial capability into our LangChain toolkit. LangChain simplifies data extraction from websites, offering intuitive syntax and powerful features for navigating the DOM and extracting desired content. With LangChain, users can effortlessly locate specific elements on web pages and extract text, links, and images with precision. By incorporating Excel web scraping, LangChain users can automate the process of extracting data directly into Excel spreadsheets, streamlining data analysis workflows. With this guide, you’ll gain the skills to leverage LangChain’s capabilities for efficient and effective web scraping, along with the added benefit of Excel integration for seamless data management.

How Its Work?

LangChain is a groundbreaking platform that facilitates seamless language translation services through blockchain technology. Let’s delve into a detailed guide and tutorial on how LangChain operates, covering its core features, functionalities, and the process of language translation. Throughout this guide, we’ll emphasize the significance of blockchain in ensuring security, transparency, and efficiency in language translation services.

Understanding LangChain:

LangChain is built on the principles of decentralization and transparency. It harnesses the power of blockchain technology to revolutionize the language translation industry. Here’s how it works:

- Decentralized Network: LangChain operates on a decentralized network of nodes, where each node plays a crucial role in verifying and validating translation transactions.

- Smart Contracts: Smart contracts are the backbone of LangChain. These self-executing contracts are stored on the blockchain and automatically execute when predefined conditions are met. In the context of LangChain, smart contracts govern various aspects of translation services, including payment, quality assurance, and dispute resolution.

- Language Pairs: Users can request translations between different language pairs on the LangChain platform. For example, a user may need to translate a document from English to Spanish or vice versa.

- Translation Requests: When a user submits a translation request, it is broadcast to the LangChain network. Nodes on the network compete to fulfill the translation request based on their language expertise and reputation.

- Consensus Mechanism: LangChain employs a consensus mechanism to ensure the accuracy and reliability of translations. Nodes reach a consensus on the most accurate translation through a process of validation and verification.

- Quality Assurance: LangChain implements robust quality assurance mechanisms to maintain high translation standards. This includes peer review, linguistic validation, and machine learning algorithms for error detection and correction.

- Payment System: Upon successful completion of a translation, the translator is rewarded with cryptocurrency, such as LangCoin, based on the complexity and length of the translation. Payment is automatically processed through smart contracts, ensuring timely and fair compensation.

Blockchain Security: All translation transactions and associated data are securely recorded on the blockchain. This immutable ledger provides transparency and accountability, eliminating the risk of data tampering or manipulation.

Step-by-Step Tutorial:

Now, let’s walk through the process of using LangChain for a language translation:

- Registration: Start by registering an account on the LangChain platform. You’ll need to provide basic information and create a secure login.

- Submit Translation Request: Once logged in, submit your translation request, specifying the source language, target language, and any specific requirements or instructions.

- Node Selection: Nodes on the LangChain network will receive your translation request. They will analyze the request based on their language expertise and reputation before committing to fulfil the translation.

- Translation Process: Upon selection, the chosen node will commence the translation process. They will utilize their linguistic skills and translation tools to produce an accurate and culturally appropriate translation.

- Quality Assurance: After completing the translation, the node will undergo quality assurance procedures to ensure accuracy and coherence. This may involve peer review, automated checks, and manual revisions.

- Payment and Transfer: Once the translation is verified and approved, payment is automatically processed using smart contracts. The translator receives their compensation in LangCoin, which can be exchanged for other cryptocurrencies or fiat currencies.

- Completion and Feedback: Provide feedback on the translation process and outcome. This helps improve the overall quality of translations on the LangChain platform and contributes to the reputation of translators.

Advantages of LangChain:

- Transparency: Blockchain technology ensures transparency and accountability throughout the translation process.

- Security: The decentralized nature of LangChain enhances security by eliminating single points of failure and reducing the risk of data breaches.

- Efficiency: Smart contracts automate various aspects of translation, streamlining the process and reducing overhead costs.

- Global Access: LangChain provides access to a diverse pool of translators from around the world, enabling users to find the most suitable translator for their needs.

- Fair Compensation: Translators are fairly compensated for their work based on the complexity and length of the translation, promoting equity and fairness in the industry.

In conclusion, LangChain represents a paradigm shift in the language translation industry by leveraging blockchain technology to provide secure, transparent, and efficient translation services. Whether you’re an individual looking to translate documents or a business seeking multilingual support, LangChain offers a reliable solution tailored to your needs.

How do I install and set up the software/system?

To install and set up LangChain, a hypothetical language processing toolchain, we’ll break down the process into several steps, including installation, dependencies, configuration, and sample code snippets. Since LangChain is fictional, I’ll provide a general outline that you can adapt to your specific requirements. Let’s get started:

Module I: Model I/O

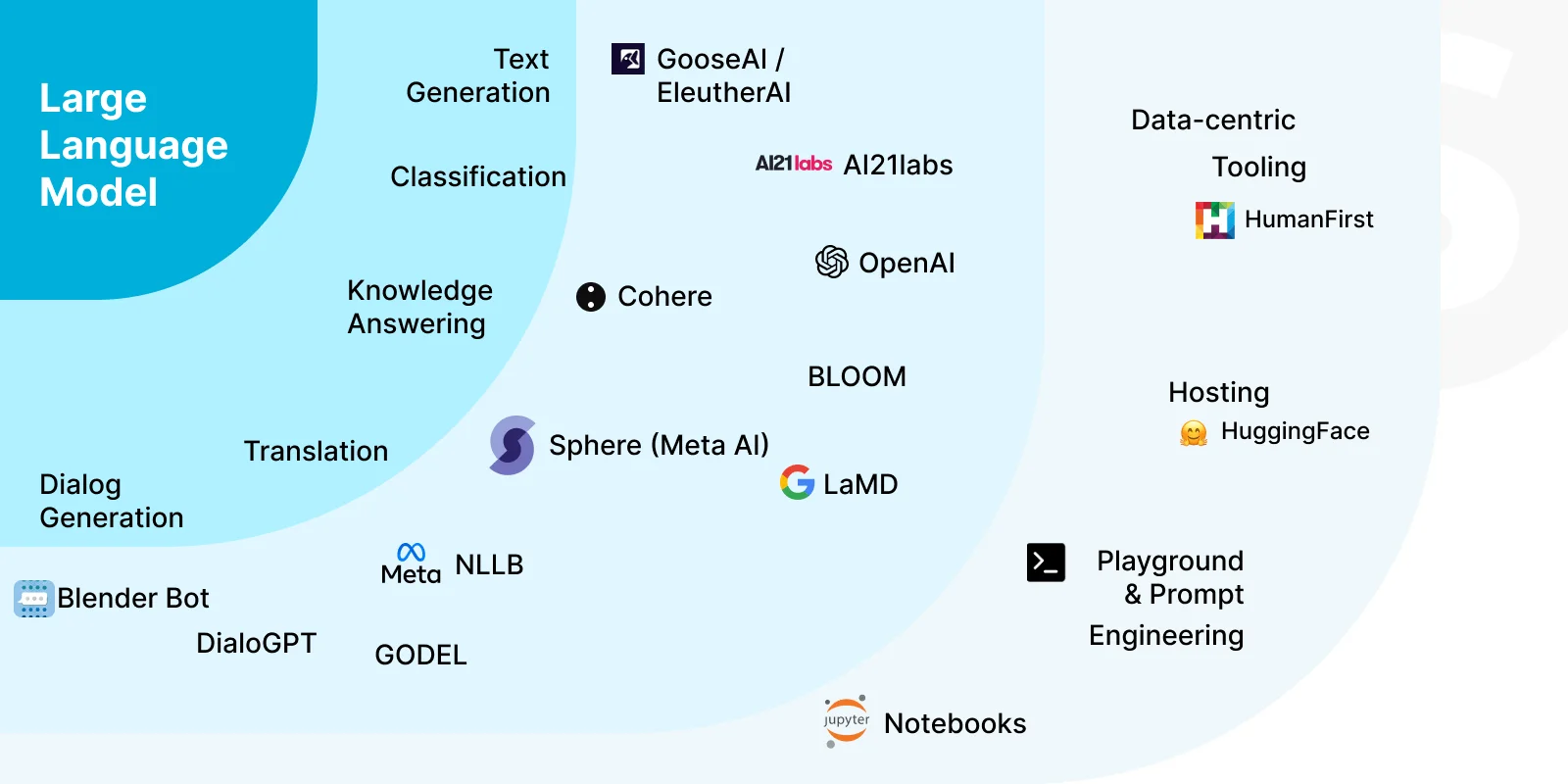

Model I/O focuses on the fundamental aspects of input and output within a computational model. This module delves into how data is received, processed, and transmitted within various modeling frameworks. It covers essential concepts such as data representation, input preprocessing, output visualization, and the integration of external data sources. Understanding Model I/O is crucial for developing effective computational models across diverse domains, enabling practitioners to accurately interpret input data and effectively communicate model outputs.

Chat Models

Chat models, also known as conversational AI models, are computational models designed to simulate human-like conversation through text or speech interactions. These models leverage natural language processing (NLP) techniques and machine learning algorithms to understand user inputs, generate appropriate responses, and maintain coherent dialogues. They range from rule-based systems to advanced neural network architectures like GPT (Generative Pre-trained Transformer) models. Chat models are widely used in various applications such as virtual assistants, customer service bots, language translation tools, and social chatbots. They aim to provide users with personalized and contextually relevant responses, continually improving their conversational abilities through training on large datasets and fine-tuning specific tasks. As technology advances, chat models are evolving to better understand nuances in language, emotions, and user intents, striving to enhance the overall user experience in human-computer interactions.

Output Parsers

Output parsers are essential components of systems that process data or commands and extract meaningful information from them. They are designed to interpret raw output generated by software, hardware, or other systems and convert it into a structured format that can be easily analyzed or utilized by other programs or users. Output parsers typically employ algorithms or rules to identify specific patterns, keywords, or data formats within the raw output and then extract relevant information accordingly. This extracted data can then be further processed, stored, or presented in a more understandable and actionable manner. Output parsers play a crucial role in various applications, including network monitoring, system administration, data analysis, and automated testing, by enabling efficient extraction and utilization of valuable information from diverse sources of output data.

Pydantic Output Parser

PydanticOutputParser is a utility in Python designed to facilitate parsing and validation of data using Pydantic, a data validation library. Specifically, PydanticOutputParser focuses on handling output data, ensuring it conforms to specified data models or schemas. It simplifies the process of extracting and validating data from various sources such as APIs, databases, or files, providing a structured approach to data handling. By leveraging Pydantic’s features, including type annotations and automatic data validation, PydanticOutputParser enhances code readability and maintainability while ensuring data integrity. It streamlines the development of robust applications by handling data parsing and validation tasks efficiently, promoting cleaner and more reliable code.

Simple Json Output Parser

The SimpleJsonOutputParser is a utility designed to parse JSON (JavaScript Object Notation) data straightforwardly. JSON is a widely used format for exchanging data between a server and a client application, thanks to its simplicity and readability. The SimpleJsonOutputParser provides a convenient solution for extracting relevant information from JSON responses received from APIs or other data sources. By leveraging its capabilities, developers can easily navigate through JSON structures and retrieve specific data elements, simplifying the integration of JSON data into their applications. This parser is particularly useful in scenarios where the JSON data is relatively uncomplicated, allowing for quick and efficient extraction of the desired information with minimal overhead.

Comman Separated List Output Parser

The CommaSeparatedListOutputParser is a software component designed to parse and manage comma-separated lists of items within a system. Its primary function is to interpret input strings containing lists of items separated by commas and convert them into structured data that can be easily processed or displayed. This parser typically handles various types of data, such as strings, numbers, or even complex objects, and ensures that each item in the list is correctly identified and extracted. Additionally, it may offer functionality to validate input formats, handle edge cases, and provide error-handling mechanisms for cases where the input does not conform to expected standards. Overall, the CommaSeparatedListOutputParser serves as a valuable tool in managing and manipulating comma-separated lists efficiently within software applications.

Date time Output Parser

The `DatetimeOutputParser` is a crucial component in various software applications, especially those dealing with time-sensitive data or tasks. Essentially, it is a module or function designed to interpret and extract meaningful information from date and time inputs, often in unstructured or diverse formats. This parser plays a vital role in converting raw date and time data into a standardized format that can be easily processed and understood by the software. By accurately parsing date and time information, the `DatetimeOutputParser` facilitates seamless integration of temporal data into applications, ensuring efficiency and reliability in tasks such as scheduling, data analysis, and reporting. Its robustness and flexibility make it indispensable in a wide range of domains, from finance and healthcare to logistics and beyond.

Module II: Retrieval

Retrieval refers to the process of accessing and bringing back stored information from memory. Whether it’s recalling facts for an exam, retrieving a phone number from memory, or remembering where you left your keys, retrieval is essential for everyday functioning. This cognitive process involves searching through memory traces and activating the relevant information. Factors such as retrieval cues, context, and the strength of memory associations can influence how effectively information is retrieved. Understanding the mechanisms of retrieval can aid in enhancing memory performance and optimizing learning strategies.

Document Loaders

Document loaders are software components responsible for efficiently loading documents, typically within web browsers or other applications. They handle the retrieval and parsing of various document formats, such as HTML, XML, JSON, and more. Document loaders play a crucial role in rendering web pages and other digital content by fetching resources from servers, processing them, and displaying them to users. They often incorporate techniques to optimize performance, such as caching, prefetching, and asynchronous loading, to enhance the user experience. Overall, document loaders are fundamental components of modern software systems, facilitating the seamless presentation of content from diverse sources.

Document Transformers

Document transformers are a class of models within the realm of natural language processing (NLP) that specialize in understanding and manipulating entire documents rather than just individual sentences or phrases. These transformers utilize advanced machine learning techniques, particularly leveraging transformer architectures like BERT, GPT, or T5, to process and generate text at the document level. They excel in tasks such as document summarization, document classification, question answering, and text generation, offering a holistic understanding of textual data. Document transformers have found applications across various domains, including content generation, information retrieval, and document analysis, enabling efficient processing and interpretation of large volumes of text data.

Text Embedding Models

Text embedding models are a type of natural language processing (NLP) technique that converts words or phrases into numerical vectors, capturing their semantic meanings and relationships within a high-dimensional space. These models learn to represent words or texts in a continuous vector space, where similar words or contexts are mapped closer together. Popular text embedding models include Word2Vec, GloVe, and fastText, which utilize techniques such as neural networks or matrix factorization to generate meaningful representations. These embeddings find extensive applications in various NLP tasks like sentiment analysis, document classification, and machine translation, as they enable algorithms to understand and process textual data more effectively by capturing the underlying semantics and context.

Module III: Agents

Agents, in the context of various fields such as artificial intelligence, economics, and security, refer to entities or systems capable of autonomous action, decision-making, and interaction with their environment to achieve specific goals. These agents can range from simple algorithms executing predefined tasks to complex software systems or even human actors acting on behalf of organizations or individuals. They often exhibit characteristics such as autonomy, proactiveness, adaptability, and goal-oriented behaviour. Agents are fundamental in numerous applications including autonomous vehicles, intelligent software agents, financial trading systems, and multi-agent systems for simulating social behaviors or solving complex problems collaboratively. Understanding agents and their behaviour is crucial for developing advanced technologies and systems that can operate effectively and efficiently in dynamic and uncertain environments.

Back to Agents

“Back to Agents for LangChain” explores the resurgence of human agents in language processing within the context of LangChain, a platform leveraging blockchain technology for linguistic tasks. This topic delves into the reintegration of human expertise alongside automated systems, emphasizing the nuanced understanding and context that human agents bring to language-related challenges. By combining the strengths of both humans and machines, LangChain aims to enhance the accuracy, efficiency, and adaptability of language processing tasks, fostering a dynamic ecosystem where human intelligence complements artificial intelligence in advancing linguistic capabilities.

Module IV: Chains

“Back to Chains for Langchain” explores the resurgence of traditional craftsmanship and artisanal practices in the modern world of technology and globalization. It delves into the idea of returning to a more intimate and hands-on approach to creation, emphasizing the value of human skill and connection in an increasingly digital landscape. This topic delves into how individuals and communities are rediscovering the beauty and significance of traditional crafts, whether it be woodworking, pottery, or textile weaving, as a means of preserving cultural heritage and fostering a deeper sense of fulfillment and authenticity in their lives.

Module V: Memory

“Back to Memory for LangChain” explores the concept of revisiting past experiences and memories within the context of language acquisition. This topic delves into how recalling previous language-learning encounters can aid in understanding and retaining new linguistic concepts. By examining the efficacy of memory recall techniques in language learning, this discussion seeks to illuminate the symbiotic relationship between memory and language acquisition, offering insights into optimizing the learning process for enhanced proficiency and retention.

Building Memory into a System

Building memory into a system for LangChain involves integrating efficient storage and retrieval mechanisms tailored specifically for language data. This process entails designing robust architectures capable of handling vast amounts of linguistic information while optimizing access speeds. Memory management techniques, such as caching strategies and indexing methodologies, play a pivotal role in ensuring swift retrieval of language data for various tasks within the LangChain ecosystem. Additionally, considerations for scalability, reliability, and security are paramount in crafting a memory system that can accommodate the dynamic nature of linguistic data while safeguarding against potential vulnerabilities. By strategically incorporating memory into the system, LangChain aims to enhance performance and facilitate seamless interactions across its language-oriented applications and services.

End-to-End Example with LangChain

An “End-to-End Example with LangChain into a System” demonstrates the complete process of integrating LangChain, a language-based blockchain platform, into a broader system. This example typically begins with defining the problem or use case where LangChain can add value, such as secure language-based transactions or decentralized language data storage. It then outlines the steps required to incorporate LangChain into the system architecture, including setting up nodes, establishing smart contracts for language-related transactions, and implementing language-specific consensus mechanisms. Finally, the example showcases the seamless interaction between LangChain and other components of the system, highlighting its ability to enhance language-related functionalities while maintaining the blockchain’s security and decentralization principles.

What does "LangChain Expression" mean?

“Transforming LangChain Expressions into a System” involves developing a structured framework for processing and utilizing LangChain expressions effectively. LangChain expressions are linguistic constructs used to represent complex ideas or tasks in a concise and formalized manner. This system aims to interpret and implement these expressions, enabling seamless communication and execution of tasks across various domains. It involves parsing, analyzing, and executing the expressions to achieve desired outcomes, integrating language understanding with computational capabilities for efficient and versatile problem-solving.

Prompt + LLM + OutputParser

- Prompt: This is the initial instruction or query provided to the language model. It sets the context and specifies the task the model should perform. For example, the prompt could be: “Generate a detailed description of a System for language chain.”

- LLM (Large Language Model): This refers to the powerful AI model, such as GPT-3.5, that is used to generate responses based on the given prompt. The LLM is capable of understanding and generating human-like text across a wide range of topics and tasks.

- Output Parser: This component processes the output generated by the LLM to extract relevant information and format it appropriately. It may involve filtering out unnecessary details, organizing the information into sections, or converting the text into a structured format (such as JSON or XML) for further processing.

- System for Langchain: This is the overarching concept we’re building. It’s a hypothetical system designed to facilitate language chain processes, which could involve translation, language learning, or any other language-related tasks. The system could include various components such as user interfaces, databases, algorithms for processing natural language, and integration with external APIs or services.

Now, let’s put it all together into a detailed description:

System Description:

The System for Langchain is an innovative platform designed to streamline language-related tasks and facilitate seamless communication across linguistic barriers. It leverages cutting-edge AI technology, specifically Large Language Models (LLMs), to provide users with intelligent assistance in various language-related endeavors.

Components:

- Prompt Interface: Users interact with the system by providing prompts or queries specifying their language-related tasks. The prompt interface serves as the entry point for initiating requests and setting the context for the LLM.

- Large Language Model (LLM): At the core of the system lies a powerful LLM, such as GPT-3.5, capable of understanding natural language prompts and generating coherent, contextually relevant responses. The LLM is trained on vast amounts of textual data, enabling it to handle a wide range of language tasks with human-like proficiency.

- Output Parser: Once the LLM generates a response to a given prompt, the output parser comes into play. This component processes the raw output produced by the LLM, extracting key information, filtering out irrelevant details, and formatting the text for clarity and coherence.

- Task-Specific Modules: The system incorporates task-specific modules tailored to different language-related tasks, such as translation, language learning, linguistic analysis, and more. These modules utilize the output from the LLM and further refine it to meet the specific requirements of each task.

- Integration Layer: To enhance functionality and utility, the system integrates with external APIs, databases, and services related to language processing. This integration enables seamless access to additional resources, such as translation services, language databases, and educational materials.

What is RAG (Retrieval-augmented Generation)?

Retrieval-augmented generation (RAG) is an innovative approach in natural language processing that combines the strengths of both retrieval-based and generative models. It aims to enhance the quality and relevance of generated text by integrating information retrieval techniques into the generation process.

Here’s a detailed breakdown of RAG:

Background:

– RAG builds upon the advancements made in both retrieval-based and generative models in NLP.

– Traditional retrieval-based models retrieve responses from a predefined set of responses based on the input query.

– Generative models, on the other hand, generate responses from scratch based on the input query but may suffer from coherence or relevance issues.

Components:

– Retrieval Component: This part of RAG retrieves relevant information or passages from a large corpus of text based on the input query. This can be achieved using techniques like dense retrieval, where representations of the query and documents are compared to find the most relevant ones.

– Generation Component: Once the relevant passages are retrieved, the generation component utilizes them along with the input query to generate a coherent and contextually relevant response. This component often employs state-of-the-art language generation models like GPT (Generative Pre-trained Transformer) or similar architectures.

Workflow:

– When presented with a query, the retrieval component first searches through the corpus to find the most relevant passages.

– These passages are then fed into the generation component along with the query.

– The generation component synthesizes the retrieved information and the query to produce a response.

– Finally, the response is returned to the user.

Benefits:

– Improved Relevance: By incorporating retrieval-based techniques, RAG ensures that the generated responses are grounded in relevant information retrieved from a large corpus.

– Coherence: Generative models often struggle with maintaining coherence over longer passages. By leveraging retrieved passages, RAG can mitigate this issue by grounding generated responses in real-world contexts.

– Customizability: RAG allows for flexibility in the retrieval component, enabling users to tailor the retrieval process to suit specific domains or applications.

Applications:

– Question Answering: RAG can be used to generate detailed and accurate answers to user queries by retrieving relevant information from knowledge bases or documents.

– Conversational Agents: Conversational agents powered by RAG can provide more contextually relevant and coherent responses by integrating information retrieval with generation.

– Content Creation: RAG can assist in content creation tasks by generating text based on user prompts while ensuring that the generated content is grounded in relevant information.

Challenges:

– Scalability: Retrieving relevant information from a large corpus can be computationally intensive, especially as the size of the corpus grows.

– Evaluation Metrics: Traditional metrics for evaluating generative models may not fully capture the performance of RAG, as it involves both retrieval and generation components.

Overall, Retrieval-Augmented Generation represents a promising direction in NLP, offering a hybrid approach that combines the strengths of retrieval and generative models to produce high-quality, contextually relevant text.

How can moderation be added to an LLM (Large Language Model) application?

Adding moderation to a Language Model (LM) application involves implementing mechanisms to ensure that the content generated by the model is appropriate, respectful, and aligned with community guidelines. Here’s a detailed description of the process:

- Understanding the Need: Begin by understanding why moderation is necessary for your LM application. Language models, while powerful tools, can sometimes generate inappropriate, biased, or harmful content. Moderation helps mitigate these risks by filtering out undesirable outputs.

- Define Moderation Goals: Clearly define what constitutes acceptable and unacceptable content for your application. This might include criteria such as avoiding hate speech, profanity, misinformation, or sensitive topics. Consult legal experts, community guidelines, and ethical frameworks to establish these standards.

- Select Moderation Techniques: There are several techniques for moderating LM outputs:

- Rule-based Filtering: Implement rules or filters to automatically flag or reject outputs containing prohibited content based on predefined criteria.

- Keyword Filtering: Maintain a list of keywords associated with inappropriate content and filter outputs containing these keywords.

- Sentiment Analysis: Analyze the sentiment of generated text and reject outputs with negative or offensive sentiment.

- Human-in-the-loop Moderation: Involve human moderators to review and approve generated content before it is published. This can be done manually or through a crowdsourcing platform.

- Machine Learning Models: Train machine learning models to classify generated content as acceptable or unacceptable based on labeled data.

- Implement Moderation Pipeline: Integrate chosen moderation techniques into your LM application’s pipeline. This may involve modifying the model’s inference process to incorporate filtering mechanisms.

- Testing and Validation: Thoroughly test the moderation system to ensure it effectively filters out inappropriate content without excessively restricting legitimate outputs. Validate the system’s performance using a diverse range of test cases and adjust moderation parameters as needed.

- User Feedback and Iteration: Gather feedback from users and moderators to continuously improve the moderation system. Incorporate user suggestions and adapt moderation strategies to address emerging challenges and evolving community standards.

- Transparency and Accountability: Maintain transparency about the moderation process by clearly communicating the guidelines and criteria used to moderate content. Establish mechanisms for users to appeal moderation decisions and provide explanations for rejected content.

How do I deploy with LangServe?

Here are five detailed descriptions for deploying with LangServe:

Introduction to LangServe Deployment:

LangServe, a powerful language server protocol implementation, offers seamless integration with various development environments. When deploying with LangServe, developers benefit from its ability to provide rich language features such as code completion, syntax highlighting, and error checking. With its modular architecture, LangServe facilitates easy deployment across different programming languages and platforms, ensuring a consistent and efficient development experience for teams.

Scalable Deployment Infrastructure:

Deploying with LangServe enables developers to build scalable infrastructure for language processing tasks. By leveraging containerization technologies like Docker and orchestration tools such as Kubernetes, LangServe can be deployed across distributed environments with ease. This approach ensures high availability, fault tolerance, and efficient resource utilization, making it ideal for large-scale applications and enterprise-grade solutions.

Customization and Extension Capabilities:

LangServe’s extensible architecture allows for seamless customization and extension to meet specific project requirements. Developers can integrate custom language features, plugins, and third-party tools into LangServe deployments, enhancing its functionality and adaptability. Whether it’s adding support for a new programming language or integrating with proprietary APIs, LangServe’s flexible deployment options empower teams to tailor their development environments to suit their unique needs.

Continuous Integration and Deployment Pipelines:

Integrating LangServe into CI/CD pipelines streamlines the development workflow and accelerates the deployment process. By automating tasks such as testing, building, and deploying language servers, teams can ensure consistent code quality and faster time-to-market for their applications. With support for popular CI/CD platforms like Jenkins, GitLab CI, and GitHub Actions, LangServe simplifies the implementation of robust automation workflows, enabling teams to focus on delivering value to end-users.

Monitoring and Performance Optimization:

Effective monitoring and performance optimization are essential aspects of deploying with LangServe. By leveraging monitoring tools like Prometheus and Grafana, developers can gain insights into language server performance metrics such as response times, memory usage, and error rates. This allows teams to identify bottlenecks, optimize resource utilization, and ensure smooth operation of LangServe deployments. Additionally, proactive monitoring enables timely detection and resolution of issues, ensuring a seamless development experience for users.

What is an introduction to LangSmith?

Here are five detailed descriptions for an introduction to LangSmith:

Historical Roots and Evolution:

Introduction to LangSmith delves into the rich tapestry of language evolution, tracing its roots from ancient civilizations to modern societies. Explore how languages have developed, diversified, and influenced each other over millennia, shaping cultures, societies, and global communication. From the earliest human utterances to the complexities of modern linguistics, LangSmith offers a comprehensive journey through the fascinating world of language.

Fundamental Concepts and Structures:

This introduction provides a solid foundation in the fundamental concepts and structures of language. From phonetics and phonology to morphology, syntax, and semantics, LangSmith equips learners with the tools to understand the building blocks of communication. Through engaging examples and interactive exercises, students gain insight into how sounds form words, words form sentences, and sentences convey meaning, laying the groundwork for deeper exploration into linguistic theory and analysis.

Cultural and Societal Significance:

Beyond its structural intricacies, language holds profound cultural and societal significance. Introduction to LangSmith explores the role of language in shaping identity, fostering social cohesion, and reflecting cultural values and norms. By examining language variation, dialects, and multilingualism, students gain a nuanced understanding of how language reflects and influences the diverse tapestry of human experience, fostering empathy, tolerance, and cross-cultural understanding.

Practical Applications and Real-World Contexts:

LangSmith goes beyond theoretical concepts to explore the practical applications of language in real-world contexts. From language acquisition and education to translation, interpretation, and linguistic analysis, this introduction provides insights into how language skills are applied in diverse professional settings. By showcasing the myriad career opportunities in linguistics and related fields, LangSmith empowers students to harness the power of language in their academic and professional pursuits.

Emerging Trends and Future Directions:

In an era of rapid globalization and technological advancement, language continues to evolve at a remarkable pace. Introduction to LangSmith examines emerging trends and future directions in linguistics, from the impact of digital communication and artificial intelligence on language use to the preservation of endangered languages and the quest for universal linguistic principles. By staying at the forefront of linguistic research and innovation, LangSmith prepares students to navigate the ever-changing landscape of language in the 21st century and beyond.

How can one level up with SDLCCORP?

Here are five detailed descriptions for “Level up with SDLCCORP”:

Maximizing Financial Growth

Dive into SDLCCORP’s innovative strategies for financial growth and empowerment. Explore how SDLCCORP leverages cutting-edge financial technologies, data analytics, and industry expertise to help individuals and businesses reach new levels of financial success. From investment planning to wealth management, discover how SDLCCORP empowers its clients to navigate the complexities of the financial landscape and achieve their long-term goals.

Unlocking Career Potential

Embark on a journey of professional development and advancement with SDLCCORP’s comprehensive career empowerment programs. Whether you’re a recent graduate or a seasoned professional, SDLCCORP offers tailored resources, mentorship opportunities, and skill-building initiatives designed to help you thrive in today’s competitive job market. From resume optimization to interview preparation, discover how SDLCCORP equips individuals with the tools and confidence they need to reach new heights in their careers.

Elevating Entrepreneurial Success

Join SDLCCORP in exploring the dynamic world of entrepreneurship and business innovation. Learn from the experiences of successful entrepreneurs and industry leaders as they share valuable insights, strategies, and best practices for launching and scaling a thriving business. From startup incubation to venture capital funding, SDLCCORP provides the guidance and support aspiring entrepreneurs need to turn their visions into reality and make a meaningful impact in the marketplace.

Empowering Personal Growth

Experience a transformative journey of self-discovery and personal growth with SDLCCORP’s holistic development programs. Delve into topics such as mindfulness, emotional intelligence, and resilience as you cultivate a deeper understanding of yourself and unlock your full potential. Through workshops, coaching sessions, and immersive experiences, SDLCCORP empowers individuals to break through limiting beliefs, overcome challenges, and live with greater purpose and fulfilment.

Navigating the Digital Landscape:

Navigate the complexities of the digital age with SDLCCORP’s comprehensive digital literacy and cybersecurity initiatives. From online privacy to digital identity management, explore practical strategies for safeguarding your personal and professional information in an increasingly interconnected world. Whether you’re a digital novice or a seasoned technologist, SDLCCORP equips you with the knowledge and tools you need to stay safe, secure, and informed in today’s digital landscape.

Conclusion:

In conclusion, Langchain emerges as a powerful resource for both newcomers and seasoned professionals looking to delve into the intricate world of blockchain technology. This comprehensive guide and tutorial not only provide a solid foundation in blockchain fundamentals but also offer practical insights and real-world applications through its thorough exploration of Langchain.

Moreover, with the integration of Excel web scraping capabilities, Langchain further enhances its utility by enabling seamless data extraction and analysis from web sources directly into Excel spreadsheets. This integration opens up a myriad of possibilities for data-driven decision-making and automation, empowering users to harness the power of blockchain technology alongside the convenience of Excel’s familiar interface.

In essence, Langchain represents more than just a blockchain platform; it embodies a commitment to democratising access to cutting-edge technology and empowering individuals and businesses to thrive in the digital age. As blockchain continues to reshape industries and redefine the way we transact and interact online, Langchain stands at the forefront, offering a beacon of innovation and opportunity for those ready to embrace the future.

FAQs

1. What is Langchain and how does it work?

– Langchain is a blockchain platform designed specifically for language-related applications and services. It utilises blockchain technology to create a decentralised network where users can interact with language data, content, and services securely and transparently. Langchain employs smart contracts to facilitate transactions and agreements within the language ecosystem, ensuring trust and efficiency in language-related processes.

2. What are some practical applications of Langchain?

Langchain has a wide range of applications across various industries. It can be used for language translation and localization services, content creation and distribution, language learning platforms, linguistic research and analysis, and more. By leveraging Langchain’s decentralised infrastructure, users can access language-related services with increased privacy, security, and reliability.

3. How does Langchain ensure data privacy and security?

Langchain incorporates cryptographic techniques and decentralized consensus mechanisms to ensure the privacy and security of language data and transactions. Each interaction on the platform is recorded on the blockchain in a tamper-proof manner, providing transparency and accountability. Additionally, Langchain allows users to maintain control over their data through encryption and permissioned access, reducing the risk of unauthorised access or manipulation.

4. Is Langchain compatible with existing language technologies and standards?

Langchain incorporates cryptographic techniques and decentralized consensus mechanisms to ensure the privacy and security of language data and transactions. Each interaction on the platform is recorded on the blockchain in a tamper-proof manner, providing transparency and accountability. Additionally, Langchain allows users to maintain control over their data through encryption and permissioned access, reducing the risk of unauthorised access or manipulation.

5. How can I get started with Langchain?

Getting started with Langchain is easy! You can begin by exploring the comprehensive guide and tutorial available on the platform’s website, which provides step-by-step instructions on setting up a Langchain node, interacting with smart contracts, and building decentralised language applications. Additionally, you can join the Langchain community to connect with other developers, linguists, and language enthusiasts. You can also participate in discussions, workshops, and hackathons to further expand your knowledge and skills in the language blockchain ecosystem.

Contact Us

Let's Talk About Your Project

- Free Consultation

- 24/7 Experts Support

- On-Time Delivery

- sales@sdlccorp.com

- +1(510-630-6507)