Introduction

In recent years, diffusion models have become a leading class of generative models, transforming how machines create content. They mark a major leap in AI generation techniques, producing high-quality outputs in image synthesis, audio generation, and more. Unlike older methods like GANs or VAEs, diffusion models use a probabilistic denoising process to generate data with impressive realism and diversity.

Diffusion models power advanced tools like Stable Diffusion and DALLE 2, making them central to modern AI development services. Their precision and scalability enable next-gen applications in art, science, and design. Today, leading AI development companies use these models to deliver smart, creative, and automated solutions.

Also read – supervised Learning Techniques

1. What Are Diffusion Models?

Diffusion models are a class of probabilistic generative models that create data by learning to reverse a process of gradually added noise. This process starts with a completely noisy image or signal and reconstructs it step-by-step into a meaningful output. The gradual transformation helps the model learn more nuanced and realistic representations, which is why diffusion techniques have become central in cutting-edge generative AI.

This unique structure enables denoising diffusion, where the model doesn’t just generate an output from scratch but instead refines it iteratively. This results in images, audio, and other data types that are far more detailed and controlled compared to older models like GANs, which often suffer from instability.

Understanding how diffusion models work is essential to using them effectively in modern AI. They’re not only more reliable but also easier to guide using text prompts or visual cues. Their step-by-step design makes them more transparent and interpretable, offering developers more control over the creative process.

Key Concepts in Detail:

Gradual Noise Reversal: Diffusion models learn to generate content by reversing a noisy transformation, resulting in outputs that are clean, realistic, and controllable.

Forward and Reverse Diffusion: The forward process introduces noise, while the reverse process uses learned patterns to restore the original data—mimicking a form of digital evolution.

Stable and High-Quality Generation: These models are praised for producing consistent and high-resolution outputs with fewer errors or distortions than adversarial models.

Foundation of Tools Like Stable Diffusion: Many popular AI art and image platforms today are built on diffusion-based architecture, allowing users to create content from simple prompts.

- Critical for Realistic AI Output: Whether in image synthesis, audio generation, or 3D modeling, diffusion models are essential when precision and realism are a top priority.

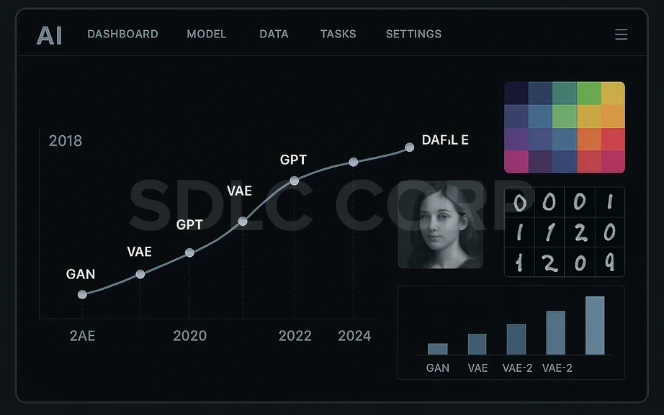

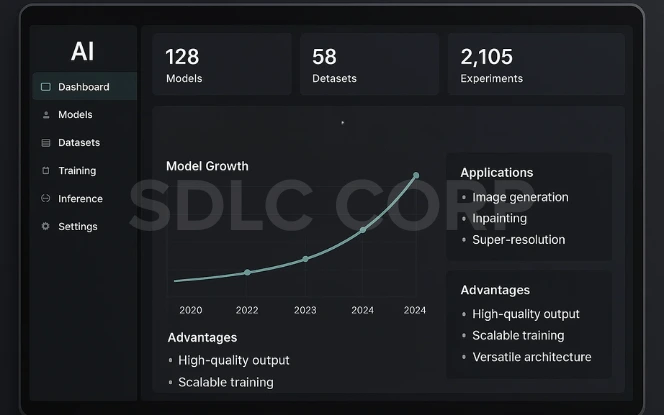

2. Evolution of Generative Models

The field of generative AI has rapidly evolved, starting with VAEs (Variational Autoencoders), followed by GANs (Generative Adversarial Networks), and now, diffusion models. For those exploring what is generative AI, it refers to AI systems capable of creating new content like images, audio, or text rather than just analyzing data. While GANs gained popularity for producing sharp images, they often suffered from instability and mode collapse. In contrast, diffusion models offer more reliable and flexible generation by learning to reverse a noise process. This shift marks a key moment in generative AI evolution, as diffusion models provide better output quality and training stability. Today, they are considered the most promising among modern generative models.

Key Points:

- VAEs: Good structure, blurry results.

- GANs: Sharp images but hard to train.

- Diffusion Models: Stable, high-quality, and versatile.

- Highlight the shift in the generative AI evolution.

- Growing preference in research and production settings.

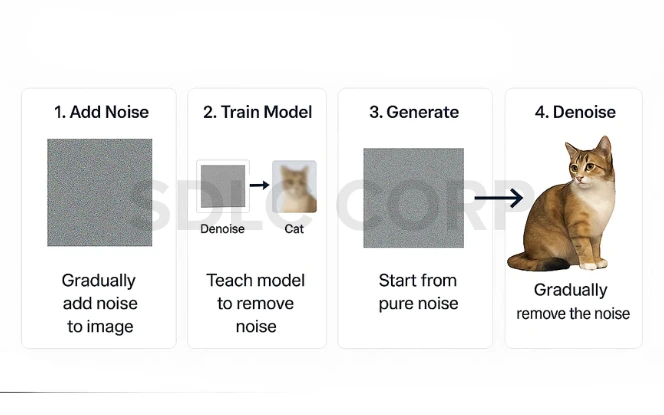

3. How Diffusion Models Work (Step-by-Step)

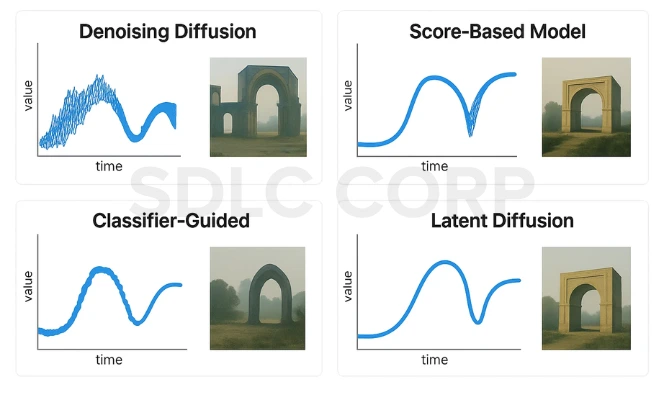

At the core of diffusion model architecture is a two-phase process: forward diffusion and reverse denoising. In the forward phase, noise is gradually added to clean data, effectively destroying its structure. This noisy data is then used in the denoising process, where the model learns to reconstruct the original input step-by-step. These transitions often follow a Markov chain, allowing each state to depend only on the previous one. Some models use latent diffusion, which compresses data before applying noise, making training more efficient and scalable.

Key Points:

- Forward process: Adds noise to data over time.

- Reverse process: Learn to denoise and recover original data.

- Based on Markov chain transitions for step-wise modeling.

- Latent diffusion improves speed by working in compressed space.

- Enables fine-grained and high-quality data generation.

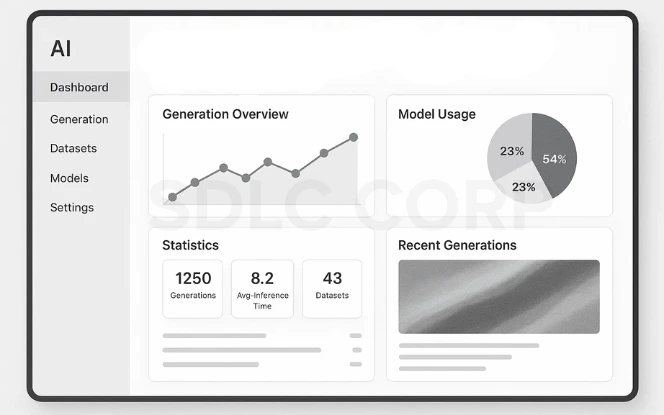

4. Applications of Diffusion Models

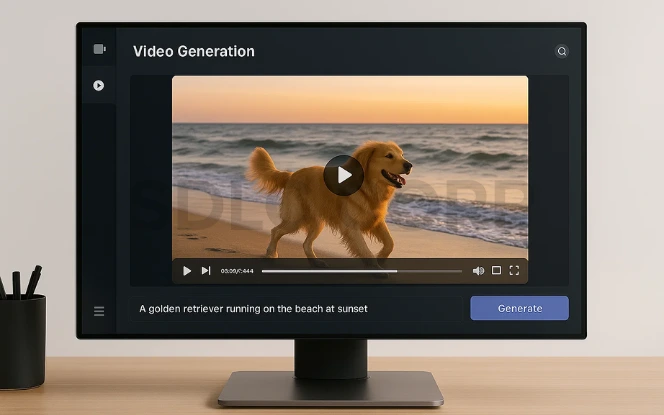

Diffusion models are being widely adopted across creative and scientific fields due to their ability to generate highly realistic content. In image creation, tools like Stable Diffusion, DALLE 2, and Midjourney use text-to-image diffusion models to turn prompts into detailed visuals. These models also power audio synthesis with diffusion, enabling high-quality voice, music, and ambient sound generation. In video and animation, they enable frame-by-frame generation and enhancement. Additionally, diffusion model applications are making strides in medical imaging and molecular modeling, where precision is crucial.

Key Applications:

- Text-to-Image: Create art and visuals from natural language.

- Stable Diffusion use cases: Design, storytelling, concept art.

- Audio Synthesis: Generate realistic sounds and voices.

- Video Generation: Animate scenes and enhance quality.

- Scientific Imaging: Analyze and simulate medical or molecular data.

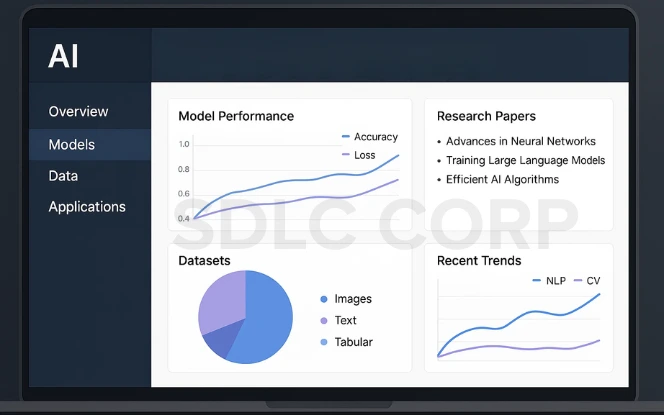

5. Popular Diffusion Models in Practice

Several diffusion models have set benchmarks in generative AI, with each excelling in different areas. Stable Diffusion stands out among the best diffusion models for its open-source accessibility and wide creative use. DALLE-2 by OpenAI and Imagen by Google are also popular text-to-image models known for producing vivid, detailed visuals from natural language prompts. Another strong contender is GLIDE, which combines diffusion with guidance techniques for more accurate outputs. These models offer a range of Stable Diffusion examples and capabilities across industries, from art to marketing and research.

Popular Diffusion Models:

- Stable Diffusion – Flexible, open-source, used for artwork, UI concepts, and storytelling.

- DALLE-2 – Creates surreal, artistic, and abstract visuals from simple text.

- Imagen – Focuses on photorealism with precise prompt understanding.

- GLIDE – Integrates guidance to produce more accurate and directed outputs.

6. Strengths and Limitations

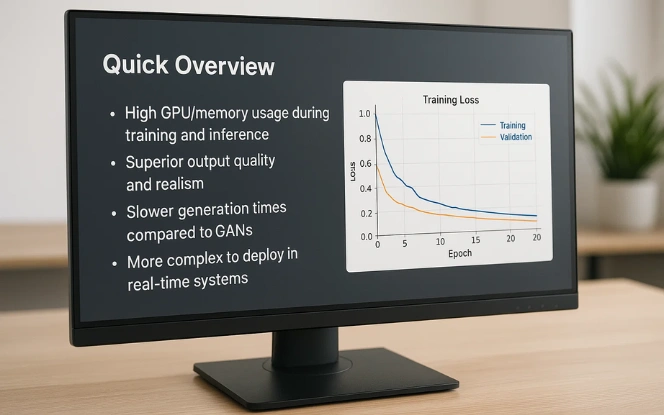

One of the key advantages of diffusion models is their ability to generate high-quality, detailed, and diverse content with impressive realism. They are flexible across tasks like image, video, and audio generation, making them ideal for creative and scientific domains. However, the limitations of diffusion models include high computational requirements and slower inference times compared to models like GANs. These diffusion model challenges can make real-time applications or deployment on edge devices more difficult without optimization.

Quick Overview:

- Advantages:

- Superior output quality and realism

- Stable training and fewer artifacts

- Versatile across media types (image, audio, video)

- Superior output quality and realism

- Limitations:

- High GPU/memory usage during training and inference

- Slower generation times compared to GANs

- More complex to deploy in real-time systems

- High GPU/memory usage during training and inference

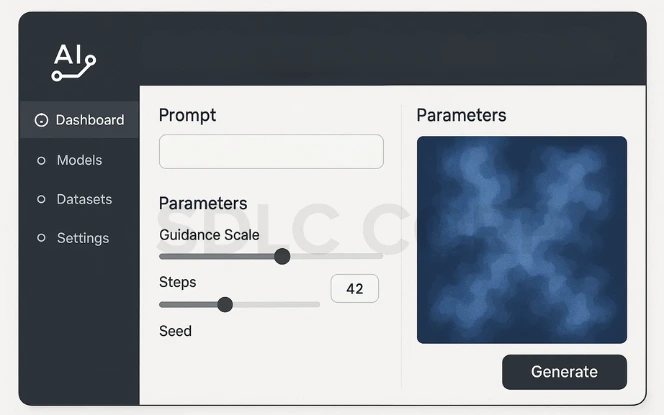

7. Prompt Engineering for Diffusion Models

Effective diffusion prompt engineering plays a vital role in controlling the quality and style of outputs generated by models like Stable Diffusion. The wording, structure, and specificity of text-to-image prompts directly influence how accurately the model interprets and visualizes a concept. Carefully crafted prompts can produce highly detailed, stylized, or photorealistic results, while vague ones may lead to generic or distorted outputs. As prompt control becomes more essential in creative workflows, many users are focusing on prompt optimization in Stable Diffusion using curated datasets, prompt guides, and AI-powered prompt tools.

Prompt Engineering Tips:

- Be descriptive: Use adjectives and stylistic terms (e.g., “cinematic lighting”, “ultra-realistic”).

- Include subject, style, and composition for clarity.

- Use prompt generators or online tools for inspiration.

- Experiment with modifiers like artist names or rendering styles.

- Save successful prompts to build reusable prompt libraries.

8. Latest Research and Developments

The latest in diffusion model research is focused on improving efficiency, scalability, and generation quality. One major breakthrough is ELR-Diffusion, which uses efficient low-rank layers to speed up training while preserving performance. Researchers are also exploring low-rank diffusion model training methods to reduce computational cost without sacrificing output fidelity. Additionally, Diffusion Transformers are being developed to blend transformer architectures with diffusion processes, enabling better sequence modeling and multi-modal generation.

Key Innovations:

- ELR-Diffusion: Enhances training speed using low-rank parameterization.

- Low-Rank Optimization: Reduces GPU usage, ideal for large-scale models.

- Diffusion Transformers: Combines transformers and diffusion for richer, contextual outputs.

- Ongoing work focuses on real-time generation and memory-efficient diffusion models.

9. Future of Diffusion Models

The future of diffusion models looks promising as researchers push toward faster, more scalable, and multi-modal capabilities. Innovations are making real-time generation increasingly feasible, opening doors for live content creation and interactive AI systems. Beyond image and audio, diffusion is expanding into new territories like molecular design, robotics, and diffusion for 3D generation, where precision and structure are crucial. As a result, diffusion is set to play a major role in building next-gen generative models across science, design, and automation.

What’s Ahead:

- Real-time diffusion for interactive apps and games.

- 3D content generation for VR/AR, architecture, and robotics.

- AI-assisted molecular design and drug discovery.

- Integration of multi-modal generation (text + image + audio).

- Lighter models for mobile and on-device deployment.

Also read – Agentic AI Fundamentals

10. Expanding the Role of Diffusion Models in Generative AI

Diffusion models have become a key part of generative AI, offering high-quality and controllable outputs through a step-by-step denoising process. They’re now used in areas like text-to-image generation, video creation, and even drug discovery, thanks to their precision and flexibility. Models like Stable Diffusion and Imagen show how these systems outperform older methods like GANs. With growing support from open-source tools and better hardware, diffusion models are now widely adopted across AI development services, research, and creative industries.

Extended Key Insights:

- Multi-modal generation: Can work across text, image, audio, and even molecular data.

- Controllable outputs: Enables guided generation using text prompts or constraints.

- Ethical generation: Offers better transparency and traceability compared to black-box GANs.

- Scalability: Can generate from small to ultra-high-resolution content (e.g., 4K images).

- Integration-ready: Easily incorporated into pipelines for design, content creation, or simulation.

Conclusion

In the rapidly evolving world of generative AI, diffusion models have set a new standard for high-quality, controllable, and multi-modal content generation. From realistic image synthesis to advanced scientific simulations, these models offer unmatched precision and flexibility. Their structured denoising approach not only ensures better output but also opens the door to more transparent and ethical AI applications.

As an innovative AI development company, leverages the power of diffusion models to build cutting-edge solutions for design, automation, healthcare, and beyond. By integrating the latest in generative technology, SDLC Corp is empowering businesses to harness AI for smarter, faster, and more creative outcomes.

FAQs

1. What are diffusion models in generative AI?

Diffusion models are a type of generative AI algorithm that create data by gradually reversing a noise process. They start with random noise and use a learned denoising method to produce detailed images, audio, or other data types.

2. How do diffusion models differ from GANs?

While GANs use a generator-discriminator setup and can be unstable, diffusion models generate data step-by-step, making them more stable and often capable of producing higher-quality results. This makes them a preferred choice for many modern AI tasks.

3. What are some real-world applications of diffusion models?

Diffusion models are used in text-to-image generation, video synthesis, audio creation, medical imaging, and even scientific fields like drug discovery and molecular design. Tools like Stable Diffusion and DALLE 2 are based on this technology.

4. Why are diffusion models considered important in AI development services?

Due to their flexibility and high-quality output, diffusion models are widely used in AI development services to build intelligent applications in design, automation, and creative industries. Their scalability also makes them ideal for enterprise-grade AI solutions.

5. Can diffusion models be used for real-time applications?

Currently, diffusion models are slower than some other approaches due to their multi-step generation process. However, research into real-time diffusion and optimization (e.g., low-rank training) is ongoing, making real-time use cases increasingly viable.

6. What are some popular diffusion models in practice?

Some of the most recognized diffusion models include Stable Diffusion, DALLE 2, Imagen, and GLIDE. These models are known for producing high-resolution, prompt-guided outputs and are widely adopted in creative and commercial projects.