Introduction

Generative AI creates new content, text, images, audio, or code based on patterns it has learned from large datasets. In healthcare, this technology can draft patient notes, assist in diagnostics, and accelerate drug discovery. It’s already in hospitals, labs, and research centers. The promise is clear: less admin work for clinicians, faster insights for researchers, and more personalized care for patients.

But while the technology moves fast, healthcare demands accuracy, safety, and trust. This blog explores what generative AI for healthcare is, how it works, where it’s being used, and the challenges it must overcome before it becomes a trusted tool in clinical practice.

How Generative AI Works in Healthcare

In healthcare, two types of generative AI are most relevant:

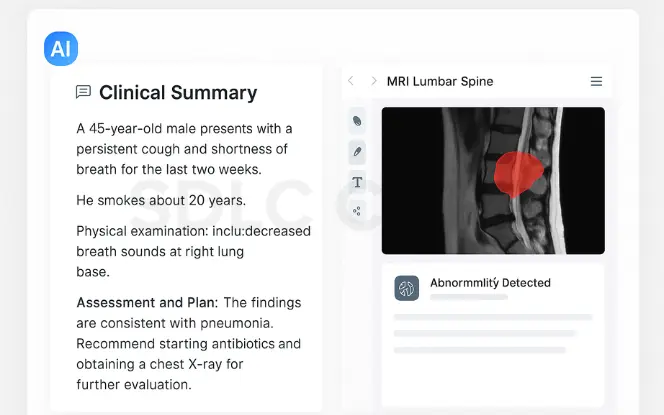

- Large Language Models (LLMs): Trained on text, they can generate clinical summaries, explain diagnoses, and support medical education.

- Image Generation Models: Trained on imaging datasets (X-rays, MRIs, pathology slides), they can simulate or enhance images to support diagnosis.

For example:

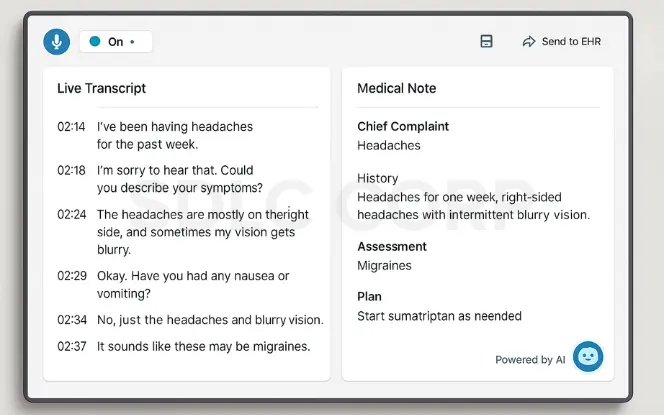

- An LLM can transcribe and summarize a doctor–patient conversation into a structured medical note.

- An image model can enhance low-quality scans, helping radiologists spot subtle signs of disease.

This combination of text and image generation opens new ways to manage workloads and improve decision-making.

Read Our New Blog On: What is Generative AI?

Key Applications of Generative AI in Healthcare

1. Clinical Documentation and AI Scribes

Clinical workflows depend on accurate and timely documentation. Generative AI can help draft summaries, consolidate patient records, and support non-diagnostic decision processes. In these scenarios, a Generative AI consulting company may assist healthcare providers in evaluating appropriate use cases, managing risk, and ensuring responsible use aligned with clinical governance.

Physicians spend a large share of their time on paperwork. Generative AI can automate much of this work:

- Transcribing consultations in real-time.

- Summarizing findings into structured formats.

- Filling forms for insurance and referrals.

Early pilots in AI for healthcare show significant time savings, allowing doctors to focus more on patient care. For example, Nuance DAX and Microsoft’s ambient clinical intelligence tools are being tested in multiple US hospitals.

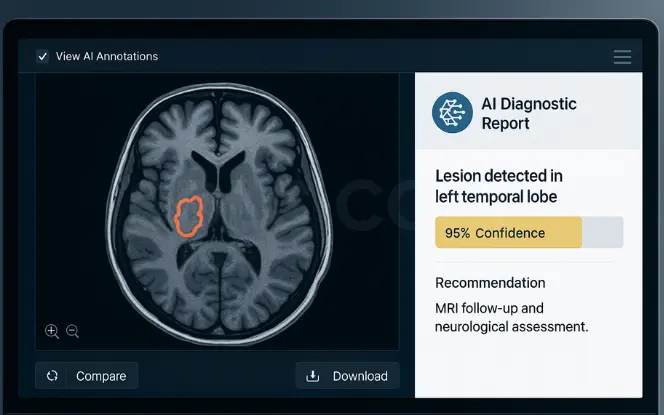

2. Diagnostic Support and Medical Imaging

Generative AI can analyze medical images with high precision:

- Radiology: AI systems detect fractures, tumors, or lesions faster.

- Pathology: AI-generated “synthetic” tissue images help train models without using sensitive patient data.

- Cardiology: AI-enhanced echocardiograms produce clearer results for early disease detection.

These tools don’t replace clinicians; they act as a second set of eyes, highlighting areas worth reviewing.

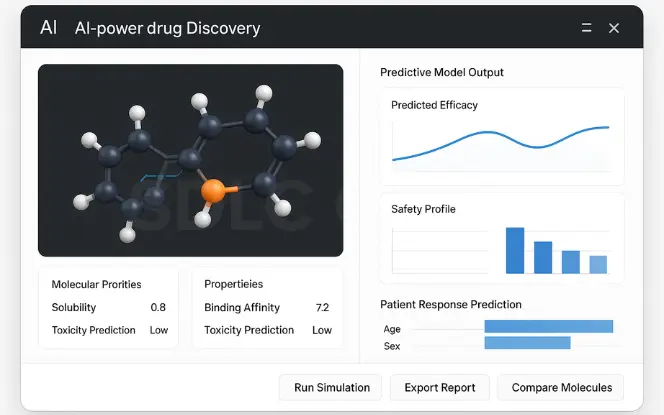

3. Drug Discovery and Clinical Trials

Drug development is slow and expensive. Generative AI speeds up:

- Molecule design: AI proposes new compounds with desired chemical properties.

- Predictive modeling: AI estimates how a drug will interact with the body.

- Trial optimization: AI models can simulate patient responses, improving trial design.

Insilico Medicine, for example, used generative AI to design a novel drug candidate for fibrosis in under 18 months, a process that can take years traditionally.

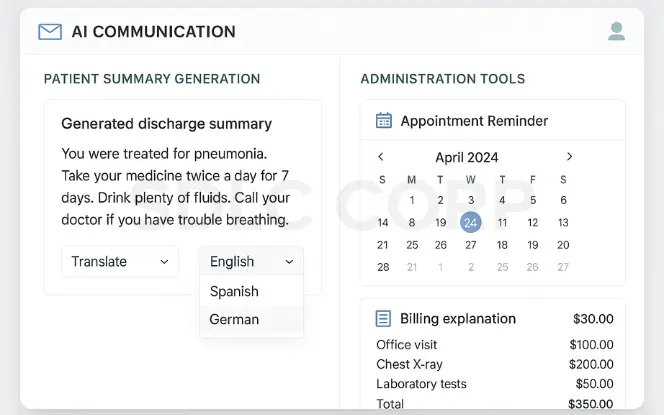

4. Patient Communication and Administration

Hospitals are using AI to:

- Generate discharge summaries with clear, patient-friendly language.

- Translate medical instructions into multiple languages.

- Automate appointment reminders and billing explanations.

This improves patient understanding and reduces missed follow-ups.

Risks and Ethical Concerns

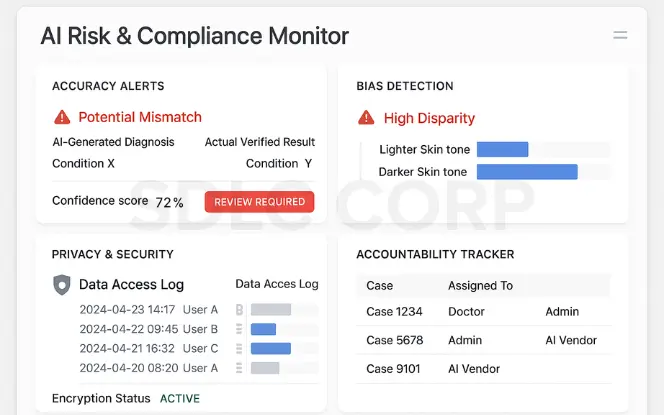

1. Accuracy and Hallucinations

Generative AI can produce outputs that look correct but are factually wrong. In medicine, even a small error can have serious consequences. Without rigorous validation, AI-generated notes or diagnoses could mislead clinicians.

2. Bias and Inequality

If training data lacks diversity, AI may underperform for certain populations. For instance, skin cancer detection models trained mostly on images of lighter skin tones may be less accurate for darker skin tones.

3. Privacy and Security

Healthcare data is highly sensitive. Using patient records to train models must follow strict privacy laws like HIPAA or GDPR. Synthetic data generation can help, but it’s not foolproof.

4. Accountability

When AI assists in a medical decision, who is responsible if something goes wrong: the doctor, the hospital, or the AI vendor? This legal gray area is still being defined.

Benefits of Generative AI in Healthcare

- Efficiency Gains: Reduces administrative workload by up to 40% in some trials.

- Better Patient Care: More clinician time for direct interaction.

- Faster Research: Drug candidates can be identified months earlier.

- Personalization: Tailored care plans based on individual health records.

These benefits directly address long-standing issues in healthcare, such as burnout, resource strain, and access delays.

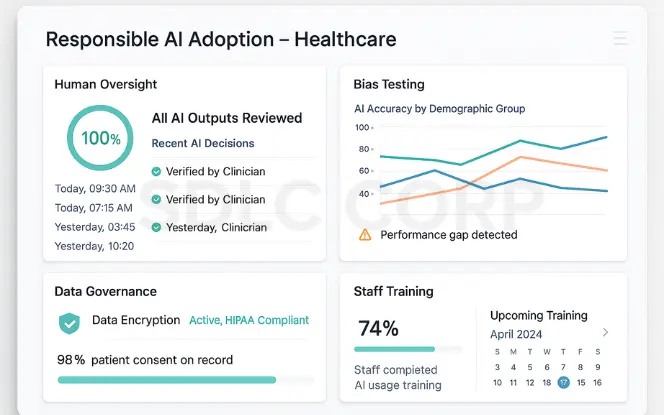

Responsible Adoption of Generative AI in Healthcare

- Human Oversight: Clinicians should verify all AI outputs.

- Bias Testing: Regularly check models for performance across demographics.

- Data Governance: Secure handling of patient data, with consent.

- Staff Training: Ensure healthcare workers understand AI’s capabilities and limitations.

The Outlook

Generative AI Development Services are not a magic cure for healthcare’s problems. They are a set of powerful tools, but only as reliable as the systems and people managing them. As technology improves, we can expect:

- More AI integration in electronic health record systems.

- Increased use of synthetic data for research.

- Better transparency on AI decision-making.

The future is likely to be a hybrid model where AI handles repetitive tasks and humans handle complex, high-stakes decisions.

Integration with Healthcare IT Platforms

Generative AI tools often integrate with EHR systems, analytics platforms, and administrative software. Effective integration requires stable infrastructure and secure data flows. Healthcare organisations may rely on AI development services to connect generative models with existing systems while maintaining data integrity and operational continuity.

Conclusion

Generative AI for healthcare holds the potential to make care faster, more efficient, and more personalized. But its use must be grounded in strong governance, clinician oversight, and rigorous testing. If implemented responsibly, it can be a genuine asset—helping doctors spend more time with patients and researchers bring new treatments to market faster.

The path forward isn’t about replacing human expertise. It’s about augmenting it with technology that works reliably in the service of health, supported by skilled teams who understand its capabilities, making it essential for organizations to hire generative AI developers with the right expertise to build safe, effective solutions.

Have an idea in mind? Contact us today to start building with SDLC Corp’s expert development team.

FAQ's

What is Generative AI in healthcare?

Generative AI in healthcare refers to artificial intelligence systems that can create new medical content, such as clinical notes, diagnostic images, or drug designs, based on patterns learned from large medical datasets. It is used to support documentation, diagnosis, research, and patient communication.

How is Generative AI used in hospitals?

Hospitals use Generative AI for:

Clinical documentation — drafting patient notes from consultations.

Medical imaging analysis — detecting anomalies in scans.

Drug discovery — designing new compounds.

Administrative tasks — sending reminders and simplifying billing explanations.

Is Generative AI replacing doctors?

No. Generative AI is designed to assist, not replace, healthcare professionals. It handles repetitive or data-heavy tasks so clinicians can focus on patient care. Final decisions remain with trained medical experts.

What are the main risks of Generative AI in healthcare?

Key risks include:

Accuracy issues — AI can generate incorrect information.

Bias — models may perform differently across demographic groups.

Privacy concerns — sensitive patient data must be securely managed.

Accountability gaps — unclear responsibility in case of AI-related errors.

How can hospitals adopt Generative AI responsibly?

Best practices include:

Human oversight of AI outputs.

Regular bias testing.

Strong data governance aligned with HIPAA or GDPR.

Comprehensive staff training on AI tools.

What’s the future of Generative AI in healthcare?

Generative AI will become more integrated into electronic health record systems, improve synthetic data generation for research, and deliver more personalized care plans. Its adoption will depend on regulation, trust, and evidence of clinical value.